by Chintan Trivedi

How to create realistic Grand Theft Auto 5 graphics with Deep Learning

This project is a continuation of my previous article. In it, I explained how we can use CycleGANs for image style transfer, and apply it to convert Fortnite graphics and make it look like PUBG.

CycleGAN is a type of Generative Adversarial Network that is capable of mimicking the visual style of one image and transfering it onto another. We can use it to make a game’s graphics look like that of another game or the real world.

In this article, I wanted to share some more results using the same CycleGAN algorithm which I covered in my previous work. First, I’ll try to improve GTA 5 graphics by adapting them to look like the real world. Next, I’ll cover how we can achieve the same photo-realistic results, without having to render high-detailed GTA graphics in the first place.

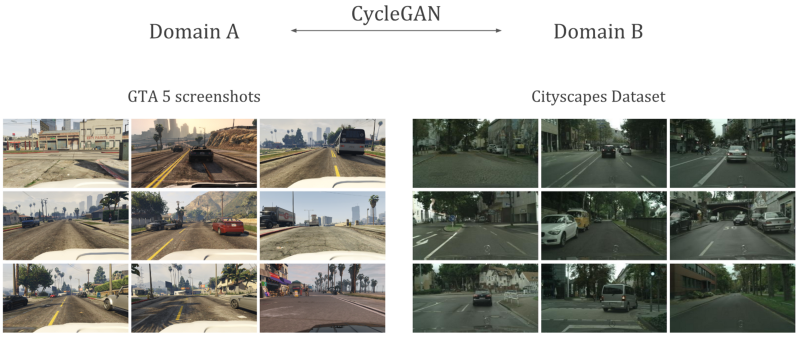

For the first task, I have taken screenshots of the game as our source domain which we want to convert into something photo-realistic. The target domain comes from the cityscapes dataset that represents the real world (which we aim to make our game resemble).

CycleGAN results

Based on about three days of training for about 100 epochs, the Cyclegan model seems to do a very nice job of adapting GTA to the real world domain. I really like how the smaller details are not lost in this translation and the image retains its sharpness even at such a low resolution.

The main downside is that this neural network turned out to be quite materialistic: it hallucinates a Mercedes logo everywhere, ruining the almost perfect conversion from GTA to real world. (It’s because the cityscapes dataset was collected by a Mercedes owner.)

How to achieve the same photo-realistic graphics with less effort

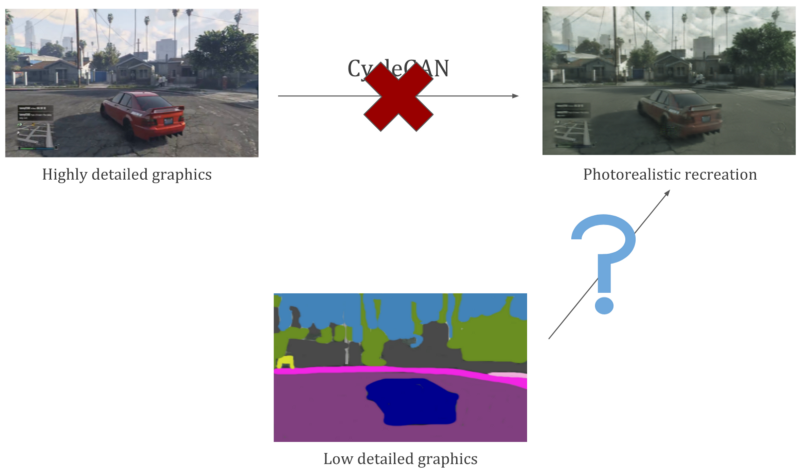

While this approach may seem very promising in improving game graphics, I do not think the real potential lies in following this pipeline. By that I mean that it seems impractical to render such a highly detailed image and then convert it to something else.

Wouldn’t it be better to synthesize a similar quality image but with much less time and effort in designing the game in the first place? I think the real potential lies in rendering objects with low detail and letting the neural net synthesize the final image from this rendering.

So, based on the semantic labels available in the cityscapes dataset, I segmented objects in a screenshot of GTA giving us a representation of low detail graphics. Consider this as a game rendering of only a few objects, like the road, car, houses, sky, and so on without designing them in detail. This will act as the input to our image style transfer model instead of the highly detailed screenshot from the game.

Let’s see what quality of final images can be generated from such low detail semantic maps using CycleGANs.

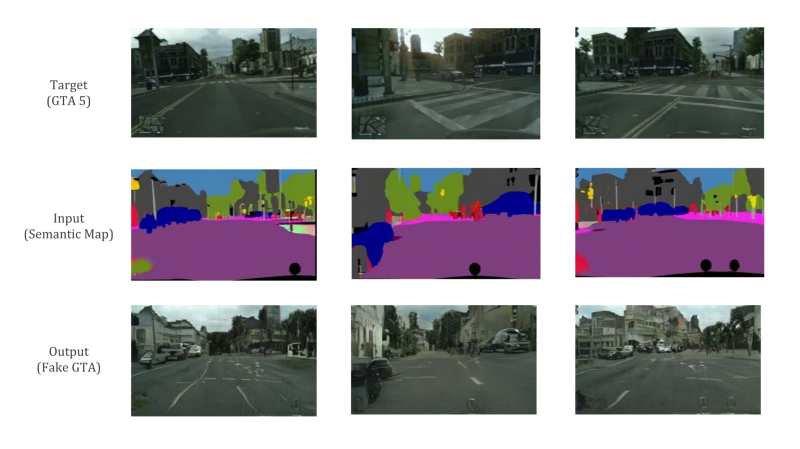

Results of image synthesis from semantic maps

Here are a few examples of how it looks when we recreate GTA graphics from semantic maps. Note that I have not created these maps by hand. That seemed really tedious, so I simply let another CycleGAN model do it (it is trained to perform image segmentation using the cityscapes dataset).

It seems like a good conversion from far away, but looking closely its quite obvious that the image is fake and lacks any kind of details.

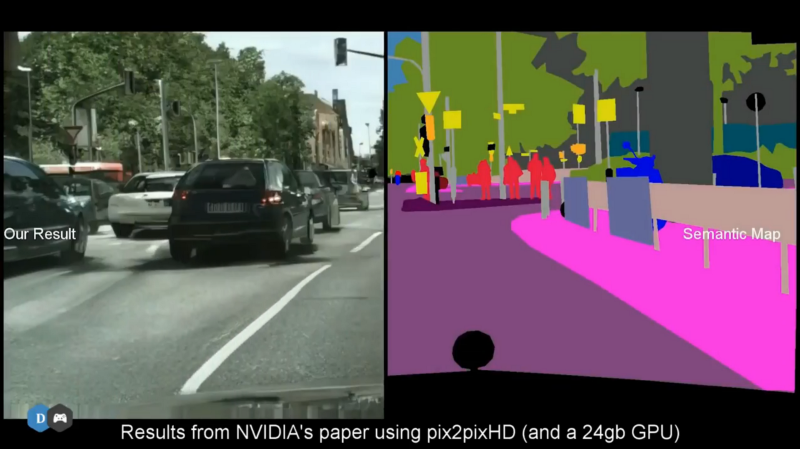

Now, these results are 256p and have been generated on a GPU with 8 GB of memory. However, the authors of the original paper have shown that it is possible to create a much more detailed 2048 x 1024p image using a GPU with over 24 GB of memory. It uses the supervised learning version of CycleGAN, called pix2pixHD, that is trained to perform the same task. And boy does the fake image look pretty darn convincing!

Conclusion

GANs have great potential to change how the entertainment industry will produce content going forward. They are capable of producing much better results than humans and in much less time.

The same is applicable to the gaming industry as well. I’m sure that in a few years, this will revolutionize how game graphics are generated. It will be much easier to simply mimic the real world than to recreate everything from scratch.

Once we attain that, rolling out new games will also be much faster. Exciting times ahead with these advancements in Deep Learning!

More results in video format

All the above results and more can be found on my YouTube channel and in the video embedded below. If you liked it, feel free to subscribe to my channel to follow more of my work.

Thank you for reading! If you liked this article, please follow me on Medium, GitHub, or subscribe to my YouTube channel.