by Richard Freeman, PhD

How to work in Data Science, AI, or Big Data based on my experience

In summer 2013, I interviewed for a lead role in the data science and analytics team at tech-for-good company JustGiving. During the interview, I said I planned to deliver batch machine learning, graph analytics and streaming analytics systems, both in-house and in the cloud.

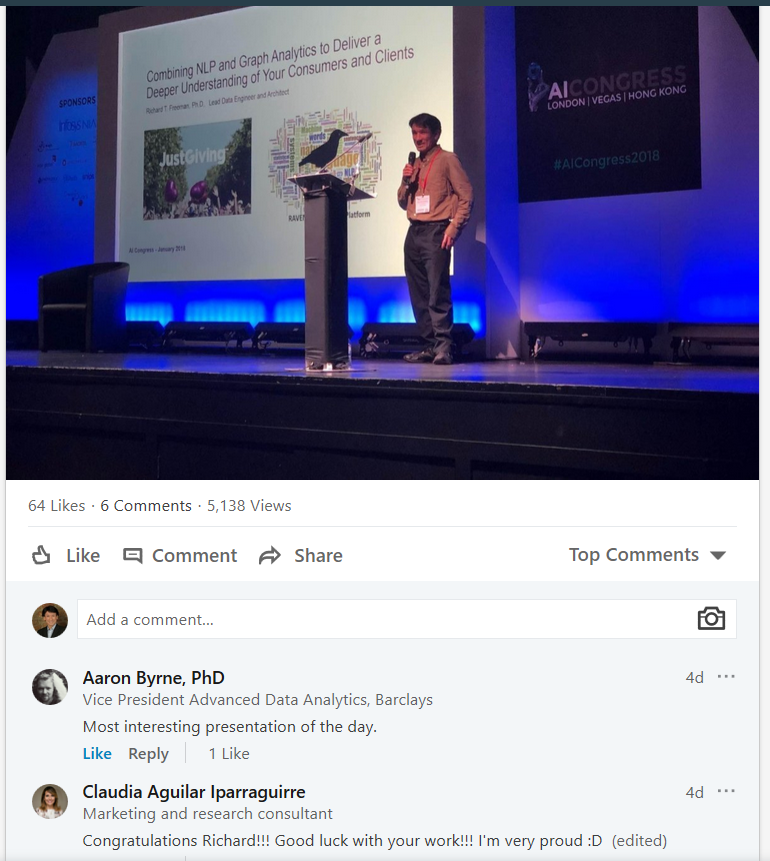

A few years later, my former boss Mike Bugembe and I were both presenting at international conferences, winning awards and becoming authors!

Here is my story, and what I learnt on the journey — plus my recommendations for you.

Why Big Data Engineering and Data Science?

I’ve always been interested in artificial intelligence (AI), machine learning (ML) and natural language processing (NLP). In particular, I’ve been interested in scalable systems, and making robots more intelligent and responsive.

My interest in data engineering comes from my background as a solutions architect. In that role, I enjoyed building cloud-based systems to store and process data to derive new insight and knowledge.

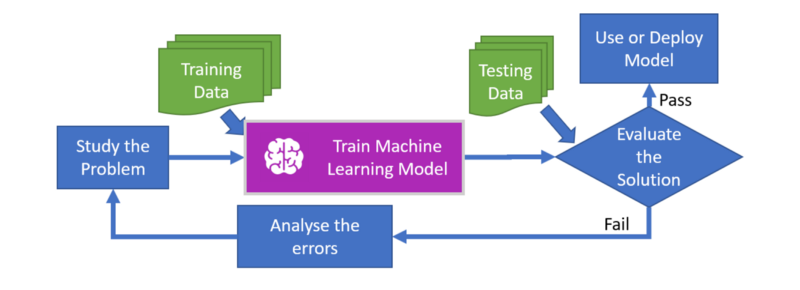

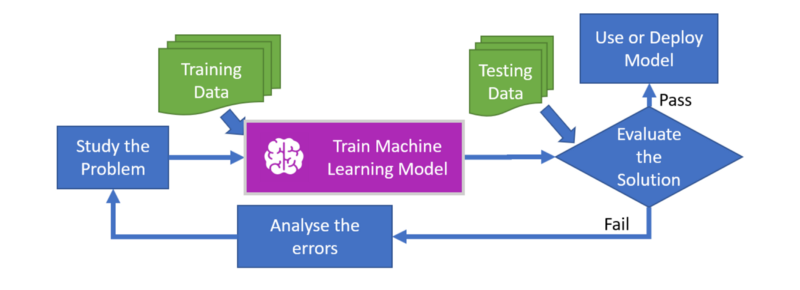

I also develop big data and ML pipelines to automate the whole ML process. This helps data scientists and analysts save time preparing data for training and testing their algorithms, running metrics and deriving key performance indicators at scale.

Data preparation is particularly important. Data scientists typically spend about 80% of their time on it. Having access to data shaped in the right way makes them more productive and happier.

My previous background

I previously earned a Masters degree in computer systems engineering, and a PhD in ML and NLP. I completed both at the University of Manchester.

Rather than join a specialised vendor in my Ph.D. area of expertise, I decided to broaden my skills and gain more client exposure by joining Capgemini. Capgemini are a large global consulting, technology and outsourcing services company.

I worked my way from being a developer to a solution architect. There, I helped deliver large scale projects for Fortune Global 500 companies in sectors including insurance, retail banking, financial services, and central government.

I then joined PageGroup. There, I worked as an lead developer and architect on a global transformation programme across 34 countries. I led the technical delivery of search, multi-channel communication, business intelligence, text analytics, job board integration, and advertising solutions.

Current roles

Now I am a lead big data and machine learning engineer at JustGiving. JustGiving is a tech-for-good company that’s helped 26 million users in 164 countries raise $5 billion for good causes. It was acquired in 2017 by Blackbaud — the world’s leading software company powering social good.

I currently lead the delivery and architecture of our in-house data science platform RAVEN and production ML systems. These were initially deployed with Azure, but later hosted in AWS. I also dive in as a data scientist specialising in scalable streaming analytics, ML and NLP algorithms.

I share my technical experience and knowledge internally and externally relating to AWS, stream processing, serverless stacks, ML and NLP. I also present regularly at industry conferences, open source my code and write technical blog posts on Medium and for AWS such as Analyze a Time Series in Real Time.

I’m also an independent freelance advisor and consultant helping organisations with cloud architecture, serverless computing and ML at Starwolf.

A typical day in the office

JustGiving is still a start-up at heart, so there is no typical day. I get involved in various tasks, such as data and report requirements capture, engineering new data pipeline, investigating operational issues, running data experiments, analysing unstructured data looking for useful patterns, exploring new ways to use the data to answer questions, presenting a data story, and sharing my knowledge and experience. This means that I work closely with marketing, product managers and product analysts to understand their data needs and what metrics and predictions are important for them.

Speaking to others outside your specialist area helps to broaden your views, gives you a new perspective, and new areas you can apply your skills.

On the technical side, I work with engineers, data analysts, developers, business intelligence analysts, operations, and data scientists to support their data and platform requirements.

Things I enjoy about work

I am passionate about working with huge data sets, as you face different kinds of performance, costs and operational issues that require you to think differently in order to scale your data warehouse, ETL processes, and algorithms and how you present your results. A lot of what you know about data warehousing with their millions of records goes out the roof when you hit hundreds of billions rows and need to iterate or do complex joins to run ML data preparation queries.

Building and running large-scale data infrastructure and distributed model training are active areas in academia and industry. They are evolving at a fast pace, with new tooling being introduced every few months. I like to use cloud solutions in an innovative way to improve our in-house data science platform, enhance our business processes, and make data insights available to internal and external users.

I’ve found that a lot of companies give their power away by using 3rd parties for their web analytics solutions, rather than building their own. That data is then siloed in marketing or sales departments, is difficult if not possible to get back in its raw form, and cannot be streamed back for example preventing you from making real-time ML recommendation or predictions directly in your product.

At JustGiving we built an in-house web analytics product called KOALA and have this data available in real-time as an AWS Serverless stack. This allowed us to have a full suite of data pipelines for ML training and analytics in-house, and the likes of MAGPIE that allows us to create real-time metrics and insighs that we can serve back to the users.

For example here is early version shown in this Tweet during a crowdfunding campaign for the Manchester attacks victims families’ in May 2017.

In addition KOALA allows us to make predictions from streaming data. It is extremely costs effective solution compared to paying for a vendor product. If you compare it to a vendor solution based on the same web traffic, KOALA is 10x cheaper, more developer friendly, and we get the raw streamed data back in real-time, rather than in batches or having to use a propitiatory locked down querying or reporting system.

I am also a big fan of Python and have successfully encouraged its uptake in the company and wider community for the data pipelines, ML and serverless computing. Why Python? It has extensive ML Libraries, scales with the likes of pySpark, and easy to read / write.

I also enjoy working with different organisations, charities, universities and giving back to the wider technical community with my experience and time such as at the AWS and British Heart Foundation Hackathon recently.

The Future of Big Data, Data Science and AI

I see more people using ML, real-time analytics, graph analytics and NLP in their products and applications, not just offline on their laptops. This is accelerating as the cloud providers offer ML and NLP application program interfaces (APIs).

For real-time analytics, there is a growing demand from consumers that are much more data aware and impatient. For example they want to know what is happening right now, see the results of their action, and use more intelligent applications and websites that adapt as they are interacting with them.

On the infrastructure side, I see serverless computing and Platform as a Service (PaaS) infrastructure in the public cloud such as AWS and Azure becoming more prominent. Functions in serverless computing are particularly interesting for me, as they can auto-scale in less than a 100 milliseconds, are highly available and are low cost. They are low cost as you only pay for the time your code is executed, rather than for an always-on machine or container like in more traditional cloud infrastructure. I’ve even shown that you can implement most of the existing container-based microservices patterns using a serverless stack.

The open source frameworks and programming languages will also continue to grow compared to closed vendor specific products and languages, e.g. Apache Spark framework, Python, R, SQL. The same goes for data storage and access: cloud storage, data warehouses and data lakes will store data in more open rather than proprietary formats, and this will be more accessible over standard APIs or open protocols.

There will also be growing requirements to analyse unstructured and multimedia data sources, and again the cloud providers will have a growing role to play.

We will also see more companies making the transition from using strategies decided by a few on gut instinct at the top, to becoming more experiment-based, evidence-based, and data-driven as described by my former CAO Mike Bugembe in his book. For example the testing of new products or features, identifying new opportunities and strategic decisions will come more and more from the data analysis, insight and predictions.

This will require more staff to get involved in data capture, data preparation, running experiments using algorithms, data visualisation and presenting results.

As such, new data orientated jobs based on creating and training data models will emerge, disrupting some of the existing specialist fields such as health care, accountancy and law. AI, Internet of things (IoT) and robotics will also replace some existing blue and white collar jobs so we will need to think about training and upskilling people to the changing landscape, and possibly introduce some kind of universal basic income.

You can draw parallels with the shift seen during the industrial revolution from the agrarian or pre-industrial times. For AI to take off, we need two things to happen: the cost of human workers becomes higher than the AI alternative, and for AI to be deployed in a scalable way.

In the much the longer term, quantum computing will also disrupt the field again in terms of how we process, analyse and store data, and will transform areas like cyber security, banking and existing AI.

How to inspire people to pursue careers in data science

I think it’s a lot easier to get people interested in big data and data science than it used to be, thanks to the likes of Google and Facebook that make it fashionable to be smart and work within technology.

In addition, the growing number of young and flexible startup companies with infrastructures in the public cloud are successfully competing and winning market shares from large established companies. Employers need to be willing to educate and upskill existing staff or graduates rather than solely recruit people with existing data engineering or data science skills.

For inspiring existing staff, we need to show the benefits, use cases and data sources most relevant to them, which makes them more productive and their jobs easier. With more data exploration tools available, staff in other departments outside IT or finance, such as customer support, marketing and product managers will be self-serving on the data and insights.

For people who have not worked in industry, I think we need to start early in schools and then universities. Teachers and lecturers in non-computer science subjects could make data more visual and interactive in their respective fields.

I think that almost any subject can benefit — for example even in English literature you can draw a relationship graph of the characters and their connections linked to main themes, events and locations. In history classes, you could have and interactive visual maps and time evolving graph representations of key events their dependencies.

Advice I would you give to someone considering a career in Big Data and Data Science

Whether you are a graduate, already working in an organisation or not from a technical background, you can benefit from analysing and understanding data. For example, data journalists are typically not from a technical or scientific background, yet are able to do simple analysis and create an interesting data story for the general public.

It’s about self-motivation: when things move at such a fast pace, you can look broadly across the sector to gain a general understanding. But you also need to focus your energy on one specific course or project and complete it. The industry also tends to repackage old technologies with some improvements as new trending ones, like cyber security, cognitive computing, chatbots, virtual reality and deep learning at the moment. So I would follow your heart for the areas you are truly interested in and want to focus on rather than the latest trend.

Behind each viral trend there have usually been early explorers that have worked and struggled on that area for years!

In terms of gaining the knowledge, it is a lot easier than it used to be. For example in the past you had to pay for specific vendor training and there was the cost of the product itself. You can now access the learning materials, data sources, and tools all for free, so there is no excuse not to get started today!

For the learning materials, a lot of the content is available for free in massive open online courses, forms, blogs, and source code repositories. Equally there are numerous free data sources like ML datasets, open data, news feeds and social media you can use.

There are many tools out there. Some are graphical, but in my view you should learn to program in SQL, Python, or R. All three have the ability to do data science at scale thanks to frameworks like Apache Spark. I particularly like Python as it benefits from being an efficient development language with a solid test framework and numerous data science packages.

As an ML engineer or data scientist, expect to spend a lot of time on data preparation. This is an important process to master, which involves the cleaning, parsing, enriching and shaping the data so that it can be used in the ML algorithms and experiments. Overall, remember that the processes, tools and data sources are always evolving, so there is no one-off unicorn training course you can do. You will need be self-motivated and open to constantly learn and adapt to the data ecosystem.

I would recommend that you learn another language such as Mandarin (1.1 billion speakers) or Spanish (0.5 billion speakers), to remain mobile, get more career opportunities, and be competitive within this interconnected world. This will also open your mind and give you an insight into other cultures and values, and how they use their data.

Cloud computing also means that you no longer need a physical presence in a country to operate in it, so you need to be open to building systems across regions and analysing data from many countries. Start using collaborative tools and participate in tech for good communities.

Some jobs and professions will be replaced, and some human expertise will be lost, but we will still rely on the data and algorithms. For example, once driverless transportation is widely adopted and considered safer, cheaper and more convenient than human drivers, future generations may not wish to drive a car or even have a driving license. However humans will still be involved in the systems that automate the driving, the creative analysis of the telemetry and IoT data, the supervision and monitoring of the ecosystem, and the wider participation in the transport industry and sharing economy.

Summary

If you want to have a career in data science, ML, or data engineering, the business needs still drive the software development and analysis. Think about the metrics you want to calculate that will benefit your business decisions, or the hypothesis you want to validate with an experiment.

What actions will your audience take with your results? What growth or cost savings opportunities for a business are out there? Then work back to see what data, models and infrastructure you need for the task. I think that being curious, inquisitive, and having an experimental mind are important qualities.

Feel free to connect with me on LinkedIn, follow me on Twitter, or message me for comments and questions. If you want to have a more personalised chat with me, based on your requests, I’m offering short 30min Skype calls on career advice or mentoring for a small fee. I also do short term consultancy, and provide expert advice and audit services to organisations building and running big data and data science platforms in the cloud.