By Aman Agarwal

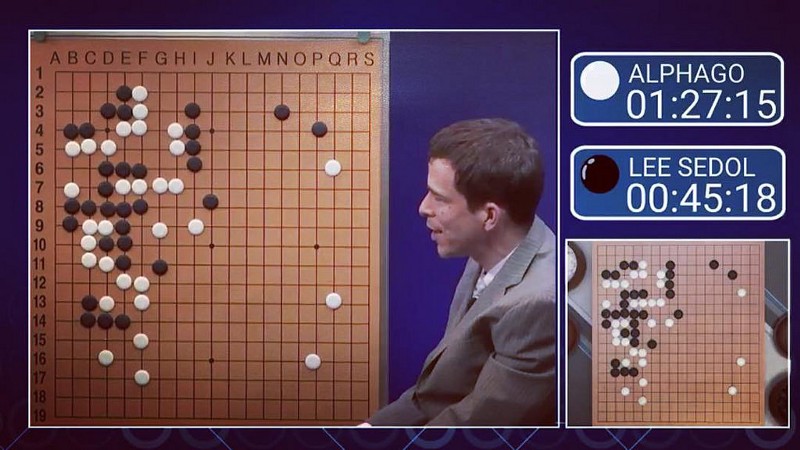

This is about AlphaGo, Google DeepMind’s Go playing AI that shook the technology world in 2016 by defeating one of the best players in the world, Lee Sedol.

Go is an ancient board game which has so many possible moves at each step that future positions are hard to predict — and therefore it requires strong intuition and abstract thinking to play. Because of this reason, it was believed that only humans could be good at playing Go. Most researchers thought that it would still take decades to build an AI which could think like that. In fact, I’m releasing this essay today because this week (March 8–15) marks the two-year anniversary of the AlphaGo vs Sedol match!

But AlphaGo didn’t stop there. 8 months later, it played 60 professional games on a Go website under disguise as a player named “Master”, and won every single game, against dozens of world champions, of course without resting between games.

Naturally this was a HUGE achievement in the field of AI and sparked worldwide discussions about whether we should be excited or worried about artificial intelligence.

Today we are going to take the original research paper published by DeepMind in the Nature journal, and break it down paragraph-by-paragraph using simple English.

After this essay, you’ll know very clearly what AlphaGo is, and how it works. I also hope that after reading this you will not believe all the news headlines made by journalists to scare you about AI, and instead feel excited about it.

Worrying about the growing achievements of AI is like worrying about the growing abilities of Microsoft Powerpoint. Yes, it will get better with time with new features being added to it, but it can’t just uncontrollably grow into some kind of Hollywood monster.

You DON’T need to know how to play Go to understand this paper. In fact, I myself have only read the first 3–4 lines in Wikipedia’s opening paragraph about it. Instead, surprisingly, I use some examples from basic Chess to explain the algorithms. You just have to know what a 2-player board game is, in which each player takes turns and there is one winner at the end. Beyond that you don’t need to know any physics or advanced math or anything.

This will make it more approachable for people who only just now started learning about machine learning or neural networks. And especially for those who don’t use English as their first language (which can make it very difficult to read such papers).

If you have NO prior knowledge of AI and neural networks, you can read the “Deep Learning” section of one of my previous essays here. After reading that, you’ll be able to get through this essay.

If you want to get a shallow understanding of Reinforcement Learning too (optional reading), you can find it here.

Here’s the original paper if you want to try reading it:

As for me: Hi I’m Aman, an AI and autonomous robots engineer. I hope that my work will save you a lot of time and effort if you were to study this on your own.

Do you speak Japanese? Ryohji Ikebe has kindly written a brief memo about this essay in Japanese, in a series of Tweets.

Let’s get started!

Abstract

As you know, the goal of this research was to train an AI program to play Go at the level of world-class professional human players.

To understand this challenge, let me first talk about something similar done for Chess. In the early 1990s, IBM came out with the Deep Blue computer which defeated the great champion Garry Kasparov in Chess. (He’s also a very cool guy, make sure to read more about him later!) How did Deep Blue play?

Well, it used a very brute force method. At each step of the game, it took a look at all the possible legal moves that could be played, and went ahead to explore each and every move to see what would happen. And it would keep exploring move after move for a while, forming a kind of HUGE decision tree of thousands of moves. And then it would come back along that tree, observing which moves seemed most likely to bring a good result. But, what do we mean by “good result”? Well, Deep Blue had many carefully designed chess strategies built into it by expert chess players to help it make better decisions — for example, how to decide whether to protect the king or get advantage somewhere else? They made a specific “evaluation algorithm” for this purpose, to compare how advantageous or disadvantageous different board positions are (IBM hard-coded expert chess strategies into this evaluation function). And finally it chooses a carefully calculated move. On the next turn, it basically goes through the whole thing again.

As you can see, this means Deep Blue thought about millions of theoretical positions before playing each move. This was not so impressive in terms of the AI software of Deep Blue, but rather in the hardware — IBM claimed it to be one of the most powerful computers available in the market at that time. It could look at 200 million board positions per second.

Now we come to Go. Just believe me that this game is much more open-ended, and if you tried the Deep Blue strategy on Go, you wouldn’t be able to play well. There would be SO MANY positions to look at at each step that it would simply be impractical for a computer to go through that hell. For example, at the opening move in Chess there are 20 possible moves. In Go the first player has 361 possible moves, and this scope of choices stays wide throughout the game.

This is what they mean by “enormous search space.” Moreover, in Go, it’s not so easy to judge how advantageous or disadvantageous a particular board position is at any specific point in the game — you kinda have to play the whole game for a while before you can determine who is winning. But let’s say you magically had a way to do both of these. And that’s where deep learning comes in!

So in this research, DeepMind used neural networks to do both of these tasks (if you have never read about neural networks yet, here’s the link again). They trained a “policy neural network” to decide which are the most sensible moves in a particular board position (so it’s like following an intuitive strategy to pick moves from any position). And they trained a “value neural network” to estimate how advantageous a particular board arrangement is for the player (or in other words, how likely you are to win the game from this position). They trained these neural networks first with human game examples (your good old ordinary supervised learning). After this the AI was able to mimic human playing to a certain degree, so it acted like a weak human player. And then to train the networks even further, they made the AI play against itself millions of times (this is the “reinforcement learning” part). With this, the AI got better because it had more practice.

With these two networks alone, DeepMind’s AI was able to play well against state-of-the-art Go playing programs that other researchers had built before. These other programs had used an already popular pre-existing game playing algorithm, called the “Monte Carlo Tree Search” (MCTS). More about this later.

But guess what, we still haven’t talked about the real deal. DeepMind’s AI isn’t just about the policy and value networks. It doesn’t use these two networks as a replacement of the Monte Carlo Tree Search. Instead, it uses the neural networks to make the MCTS algorithm work better… and it got so much better that it reached superhuman levels. THIS improved variation of MCTS is “AlphaGo”, the AI that beat Lee Sedol and went down in AI history as one of the greatest breakthroughs ever. So essentially, AlphaGo is simply an improved implementation of a very ordinary computer science algorithm. Do you understand now why AI in its current form is absolutely nothing to be scared of?

Wow, we’ve spent a lot of time on the Abstract alone.

Alright — to understand the paper from this point on, first we’ll talk about a gaming strategy called the Monte Carlo Tree Search algorithm. For now, I’ll just explain this algorithm at enough depth to make sense of this essay. But if you want to learn about it in depth, some smart people have also made excellent videos and blog posts on this:

- A short video series from Udacity

- Jeff Bradberry’s explanation of MCTS

- An MCTS tutorial by Fullstack Academy

The following section is long, but easy to understand (I’ll try my best) and VERY important, so stay with me! The rest of the essay will go much quicker.

Let’s talk about the first paragraph of the essay above. Remember what I said about Deep Blue making a huge tree of millions of board positions and moves at each step of the game? You had to do simulations and look at and compare each and every possible move. As I said before, that was a simple approach and very straightforward approach — if the average software engineer had to design a game playing AI, and had all the strongest computers of the world, he or she would probably design a similar solution.

But let’s think about how do humans themselves play chess? Let’s say you’re at a particular board position in the middle of the game. By game rules, you can do a dozen different things — move this pawn here, move the queen two squares here or three squares there, and so on. But do you really make a list of all the possible moves you can make with all your pieces, and then select one move from this long list? No — you “intuitively” narrow down to a few key moves (let’s say you come up with 3 sensible moves) that you think make sense, and then you wonder what will happen in the game if you chose one of these 3 moves. You might spend 15–20 seconds considering each of these 3 moves and their future — and note that during these 15 seconds you don’t have to carefully plan out the future of each move; you can just “roll out” a few mental moves guided by your intuition without TOO much careful thought (well, a good player would think farther and more deeply than an average player). This is because you have limited time, and you can’t accurately predict what your opponent will do at each step in that lovely future you’re cooking up in your brain. So you’ll just have to let your gut feeling guide you. I’ll refer to this part of the thinking process as “rollout”, so take note of it!

So after “rolling out” your few sensible moves, you finally say screw it and just play the move you find best.

Then the opponent makes a move. It might be a move you had already well anticipated, which means you are now pretty confident about what you need to do next. You don’t have to spend too much time on the rollouts again. OR, it could be that your opponent hits you with a pretty cool move that you had not expected, so you have to be even more careful with your next move.

This is how the game carries on, and as it gets closer and closer to the finishing point, it would get easier for you to predict the outcome of your moves — so your rollouts don’t take as much time.

The purpose of this long story is to describe what the MCTS algorithm does on a superficial level — it mimics the above thinking process by building a “search tree” of moves and positions every time. Again, for more details you should check out the links I mentioned earlier. The innovation here is that instead of going through all the possible moves at each position (which Deep Blue did), it instead intelligently selects a small set of sensible moves and explores those instead. To explore them, it “rolls out” the future of each of these moves and compares them based on their imagined outcomes.

(Seriously — this is all I think you need to understand this essay)

Now — coming back to the screenshot from the paper. Go is a “perfect information game” (please read the definition in the link, don’t worry it’s not scary). And theoretically, for such games, no matter which particular position you are at in the game (even if you have just played 1–2 moves), it is possible that you can correctly guess who will win or lose (assuming that both players play “perfectly” from that point on). I have no idea who came up with this theory, but it is a fundamental assumption in this research project and it works.

So that means, given a state of the game s, there is a function v*(s) which can predict the outcome, let’s say probability of you winning this game, from 0 to 1. They call it the “optimal value function”. Because some board positions are more likely to result in you winning than other board positions, they can be considered more “valuable” than the others. Let me say it again: Value = Probability between 0 and 1 of you winning the game.

But wait — say there was a girl named Foma sitting next to you while you play Chess, and she keeps telling you at each step if you’re winning or losing. “You’re winning… You’re losing… Nope, still losing…” I think it wouldn’t help you much in choosing which move you need to make. She would also be quite annoying. What would instead help you is if you drew the whole tree of all the possible moves you can make, and the states that those moves would lead to — and then Foma would tell you for the entire tree which states are winning states and which states are losing states. Then you can choose moves which will keep leading you to winning states. All of a sudden Foma is your partner in crime, not an annoying friend. Here, Foma behaves as your optimal value function v*(s). Earlier, it was believed that it’s not possible to have an accurate value function like Foma for the game of Go, because the games had so much uncertainty.

BUT — even if you had the wonderful Foma, this wonderland strategy of drawing out all the possible positions for Foma to evaluate will not work very well in the real world. In a game like Chess or Go, as we said before, if you try to imagine even 7–8 moves into the future, there can be so many possible positions that you don’t have enough time to check all of them with Foma.

So Foma is not enough. You need to narrow down the list of moves to a few sensible moves that you can roll out into the future. How will your program do that? Enter Lusha. Lusha is a skilled Chess player and enthusiast who has spent decades watching grand masters play Chess against each other. She can look at your board position, look quickly at all the available moves you can make, and tell you how likely it would be that a Chess expert would make any of those moves if they were sitting at your table. So if you have 50 possible moves at a point, Lusha will tell you the probability that each move would be picked by an expert. Of course, a few sensible moves will have a much higher probability and other pointless moves will have very little probability. For example: if in Chess, let’s say your Queen is in danger in one corner of the game, you might still have the option to move a little pawn in another corner of the game She is your policy function, p(a\s). For a given state s, she can give you probabilities for all the possible moves that an expert would make.

Wow — you can take Lusha’s help to guide you in how to select a few sensible moves, and Foma will tell you the likelihood of winning from each of those moves. You can choose the move that both Foma and Lusha approve. Or, if you want to be extra careful, you can roll out the moves selected by Lusha, have Foma evaluate them, pick a few of them to roll out further into the future, and keep letting Foma and Lusha help you predict VERY far into the game’s future — much quicker and more efficient than to go through all the moves at each step into the future. THIS is what they mean by “reducing the search space”. Use a value function (Foma) to predict outcomes, and use a policy function (Lusha) to give you grand-master probabilities to help narrow down the moves you roll out. These are called “Monte Carlo rollouts”. Then while you backtrack from future to present, you can take average values of all the different moves you rolled out, and pick the most suitable action. So far, this has only worked on a weak amateur level in Go, because the policy functions and value functions that they used to guide these rollouts weren’t that great.

Phew.

The first line is self explanatory. In MCTS, you can start with an unskilled Foma and unskilled Lusha. The more you play, the better they get at predicting solid outcomes and moves. “Narrowing the search to a beam of high probability actions” is just a sophisticated way of saying, “Lusha helps you narrow down the moves you need to roll out by assigning them probabilities that an expert would play them”. Prior work has used this technique to achieve strong amateur level AI players, even with simple (or “shallow” as they call it) policy functions.

Yeah, convolutional neural networks are great for image processing. And since a neural network takes a particular input and gives an output, it is essentially a function, right? So you can use a neural network to become a complex function. So you can just pass in an image of the board position and let the neural network figure out by itself what’s going on. This means it’s possible to create neural networks which will behave like VERY accurate policy and value functions. The rest is pretty self explanatory.

Here we discuss how Foma and Lusha were trained. To train the policy network (predicting for a given position which moves experts would pick), you simply use examples of human games and use them as data for good old supervised learning.

And you want to train another slightly different version of this policy network to use for rollouts; this one will be smaller and faster. Let’s just say that since Lusha is so experienced, she takes some time to process each position. She’s good to start the narrowing-down process with, but if you try to make her repeat the process , she’ll still take a little too much time. So you train a faster policy network for the rollout process (I’ll call it… Lusha’s younger brother Jerry? I know I know, enough with these names). After that, once you’ve trained both of the slow and fast policy networks enough using human player data, you can try letting Lusha play against herself on a Go board for a few days, and get more practice. This is the reinforcement learning part — making a better version of the policy network.

Then, you train Foma for value prediction: determining the probability of you winning. You let the AI practice through playing itself again and again in a simulated environment, observe the end result each time, and learn from its mistakes to get better and better.

I won’t go into details of how these networks are trained. You can read more technical details in the later section of the paper (‘Methods’) which I haven’t covered here. In fact, the real purpose of this particular paper is not to show how they used reinforcement learning on these neural networks. One of DeepMind’s previous papers, in which they taught AI to play ATARI games, has already discussed some reinforcement learning techniques in depth (And I’ve already written an explanation of that paper here). For this paper, as I lightly mentioned in the Abstract and also underlined in the screenshot above, the biggest innovation was the fact that they used RL with neural networks for improving an already popular game-playing algorithm, MCTS. RL is a cool tool in a toolbox that they used to fine-tune the policy and value function neural networks after the regular supervised training. This research paper is about proving how versatile and excellent this tool it is, not about teaching you how to use it. In television lingo, the Atari paper was a RL infomercial and this AlphaGo paper is a commercial.

Alright we’re finally done with the “introduction” parts. By now you already have a very good feel for what AlphaGo was all about.

Next, we’ll go slightly deeper into each thing we discussed above. You might see some ugly and dangerous looking mathematical equations and expressions, but they’re simple (I explain them all). Relax.

A quick note before you move on. Would you like to help me write more such essays explaining cool research papers? If you’re serious, I’d be glad to work with you. Please leave a comment and I’ll get in touch with you.

A photo of two Japanese women playing Go, placed here in case you’re already sick of looking at long slabs of text.

A photo of two Japanese women playing Go, placed here in case you’re already sick of looking at long slabs of text.

So, the first step is in training our policy NN (Lusha), to predict which moves are likely to be played by an expert. This NN’s goal is to allow the AI to play similar to an expert human. This is a convolutional neural network (as I mentioned before, it’s a special kind of NN that is very useful in image processing) that takes in a simplified image of a board arrangement. “Rectifier nonlinearities” are layers that can be added to the network’s architecture. They give it the ability to learn more complex things. If you’ve ever trained NNs before, you might have used the “ReLU” layer. That’s what these are.

The training data here was in the form of random pairs of board positions, and the labels were the actions chosen by humans when they were in those positions. Just regular supervised learning.

Here they use “stochastic gradient ASCENT”. Well, this is an algorithm for backpropagation. Here, you’re trying to maximise a reward function. And the reward function is just the probability of the action predicted by a human expert; you want to increase this probability. But hey — you don’t really need to think too much about this. Normally you train the network so that it minimises a loss function, which is essentially the error/difference between predicted outcome and actual label. That is called gradient DESCENT. In the actual implementation of this research paper, they have indeed used the regular gradient descent. You can easily find a loss function that behaves opposite to the reward function such that minimising this loss will maximise the reward.

The policy network has 13 layers, and is called “SL policy” network (SL = supervised learning). The data came from a… I’ll just say it’s a popular website on which millions of people play Go. How good did this SL policy network perform?

It was more accurate than what other researchers had done earlier. The rest of the paragraph is quite self-explanatory. As for the “rollout policy”, you do remember from a few paragraphs ago, how Lusha the SL policy network is slow so it can’t integrate well with the MCTS algorithm? And we trained another faster version of Lusha called Jerry who was her younger brother? Well, this refers to Jerry right here. As you can see, Jerry is just half as accurate as Lusha BUT it’s thousands of times faster! It will really help get through rolled out simulations of the future faster, when we apply the MCTS.

For this next section, you don’t have to know about Reinforcement Learning already, but then you’ll have to assume that whatever I say works. If you really want to dig into details and make sure of everything, you might want to read a little about RL first.

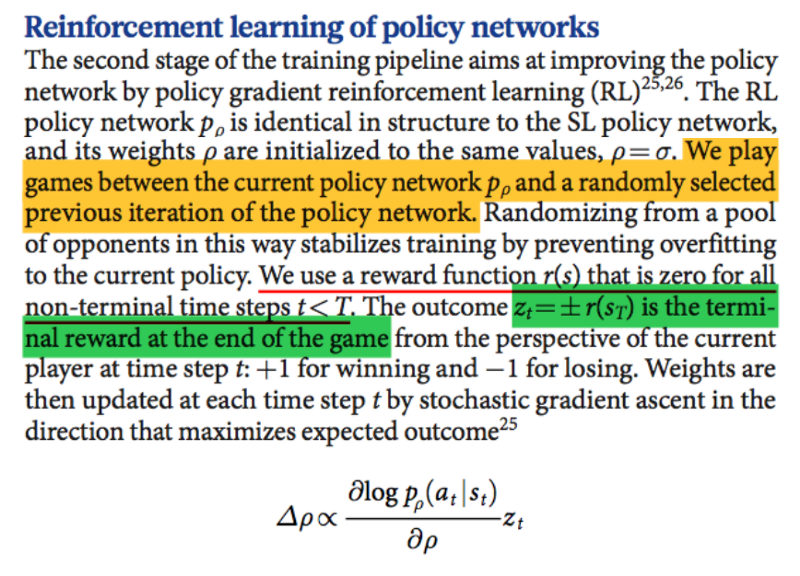

Once you have the SL network, trained in a supervised manner using human player moves with the human moves data, as I said before you have to let her practice by itself and get better. That’s what we’re doing here. So you just take the SL policy network, save it in a file, and make another copy of it.

Then you use reinforcement learning to fine-tune it. Here, you make the network play against itself and learn from the outcomes.

But there’s a problem in this training style.

If you only forever practice against ONE opponent, and that opponent is also only practicing with you exclusively, there’s not much of new learning you can do. You’ll just be training to practice how to beat THAT ONE player. This is, you guessed it, overfitting: your techniques play well against one opponent, but don’t generalize well to other opponents. So how do you fix this?

Well, every time you fine-tune a neural network, it becomes a slightly different kind of player. So you can save this version of the neural network in a list of “players”, who all behave slightly differently right? Great — now while training the neural network, you can randomly make it play against many different older and newer versions of the opponent, chosen from that list. They are versions of the same player, but they all play slightly differently. And the more you train, the MORE players you get to train even more with! Bingo!

In this training, the only thing guiding the training process is the ultimate goal, i.e winning or losing. You don’t need to specially train the network to do things like capture more area on the board etc. You just give it all the possible legal moves it can choose from, and say, “you have to win”. And this is why RL is so versatile; it can be used to train policy or value networks for any game, not just Go.

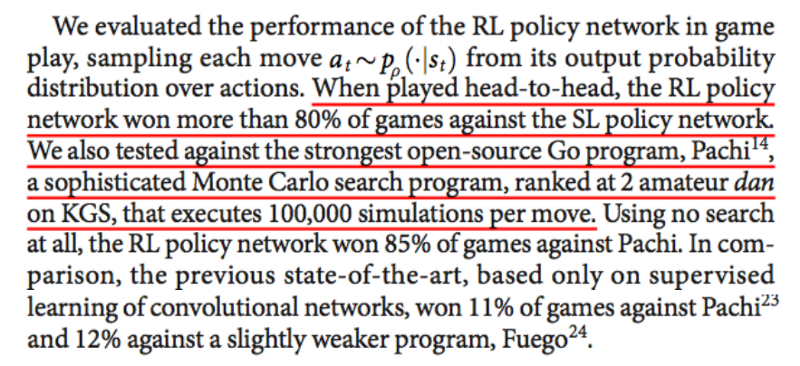

Here, they tested how accurate this RL policy network was, just by itself without any MCTS algorithm. As you would remember, this network can directly take a board position and decide how an expert would play it — so you can use it to single-handedly play games.

Well, the result was that the RL fine-tuned network won against the SL network that was only trained on human moves. It also won against other strong Go playing programs.

Must note here that even before training this RL policy network, the SL policy network was already better than the state of the art — and now, it has further improved! And we haven’t even come to the other parts of the process like the value network.

Did you know that baby penguins can sneeze louder than a dog can bark? Actually that’s not true, but I thought you’d like a little joke here to distract from the scary-looking equations above. Coming to the essay again: we’re done training Lusha here. Now back to Foma — remember the “optimal value function”: v(s) -> that only tells you how likely you are to win in your current board position if both players play perfectly from that point on?

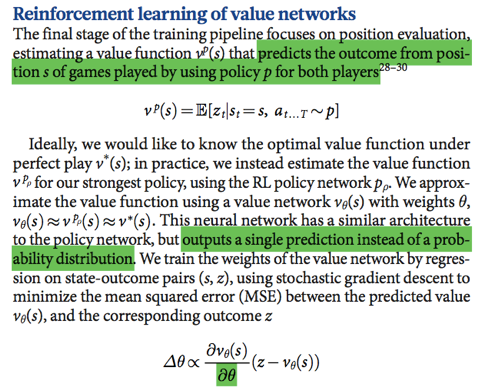

So obviously, to train an NN to become our value function, we would need a perfect player… which we don’t have. So we just u*se our strongest player, which happens to be our RL policy network.

It takes the current state board state s, and outputs the probability that you will win the game. You play a game and get to know the outcome (win or loss). Each of the game states act as a data sample, and the outcome of that game acts as the label. So by playing a 50-move game, you have 50 data samples for value prediction.

Lol, no. This approach is naive. You can’t use all 50 moves from the game and add them to the dataset.

The training data set had to be chosen carefully to avoid overfitting. Each move in the game is very similar to the next one, because you only move once and that gives you a new position, right? If you take the states at all 50 of those moves and add them to the training data with the same label, you basically have lots of “kinda duplicate” data, and that causes overfitting. To prevent this, you choose only very distinct-looking game states. So for example, instead of all 50 moves of a game, you only choose 5 of them and add them to the training set. DeepMind took 30 million positions from 30 million different games, to reduce any chances of there being duplicate data. And it worked!

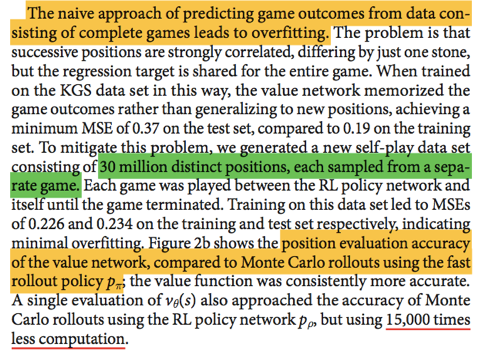

Now, something conceptual here: there are two ways to evaluate the value of a board position. One option is a magical optimal value function (like the one you trained above). The other option is to simply roll out into the future using your current policy (Lusha) and look at the final outcome in this roll out. Obviously, the real game would rarely go by your plans. But DeepMind compared how both of these options do. You can also do a mixture of both these options. We will learn about this “mixing parameter” a little bit later, so make a mental note of this concept!

Well, your single neural network trying to approximate the optimal value function is EVEN BETTER than doing thousands of mental simulations using a rollout policy! Foma really kicked ass here. When they replaced the fast rollout policy with the twice-as-accurate (but slow) RL policy Lusha, and did thousands of simulations with that, it did better than Foma. But only slightly better, and too slowly. So Foma is the winner of this competition, she has proved that she can’t be replaced.

Now that we have trained the policy and value functions, we can combine them with MCTS and give birth to our former world champion, destroyer of grand masters, the breakthrough of a generation, weighing two hundred and sixty eight pounds, one and only Alphaaaaa GO!

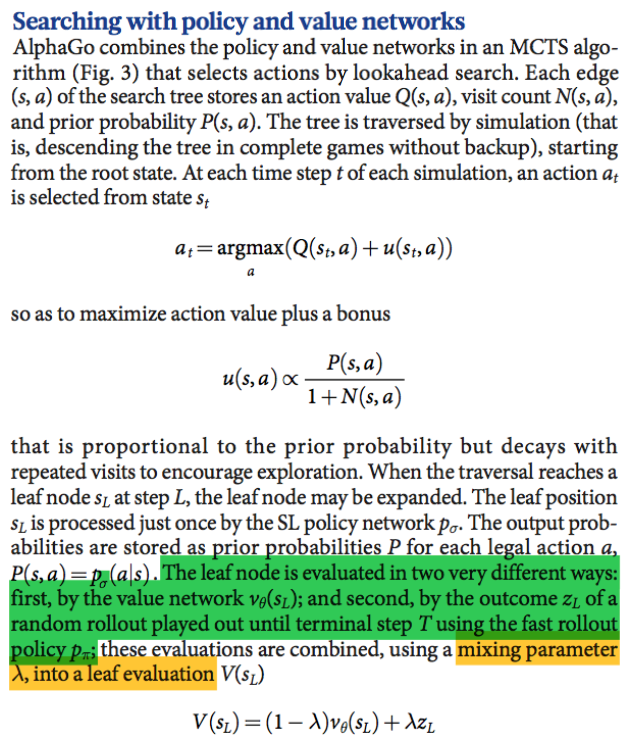

In this section, ideally you should have a slightly deeper understanding of the inner workings of the MCTS algorithm, but what you have learned so far should be enough to give you a good feel for what’s going on here. The only thing you should note is how we’re using the policy probabilities and value estimations. We combine them during roll outs, to narrow down the number of moves we want to roll out at each step. Q(s,a) represents the value function, and u(s,a) is a stored probability for that position. I’ll explain.

Remember that the policy network uses supervised learning to predict expert moves? And it doesn’t just give you most likely move, but rather gives you probabilities for each possible move that tell how likely it is to be an expert move. This probability can be stored for each of those actions. Here they call it “prior probability”, and they obviously use it while selecting which actions to explore. So basically, to decide whether or not to explore a particular move, you consider two things: First, by playing this move, how likely are you to win? Yes, we already have our “value network” to answer this first question. And the second question is, how likely is it that an expert would choose this move? (If a move is super unlikely to be chosen by an expert, why even waste time considering it. This we get from the policy network)

Then let’s talk about the “mixing parameter” (see came back to it!). As discussed earlier, to evaluate positions, you have two options: one, simply use the value network you have been using to evaluate states all along. And two, you can try to quickly play a rollout game with your current strategy (assuming the other player will play similarly), and see if you win or lose. We saw how the value function was better than doing rollouts in general. Here they combine both. You try giving each prediction 50–50 importance, or 40–60, or 0–100, and so on. If you attach a % of X to the first, you’ll have to attach 100-X to the second. That’s what this mixing parameter means. You’ll see these hit and trial results later in the paper.

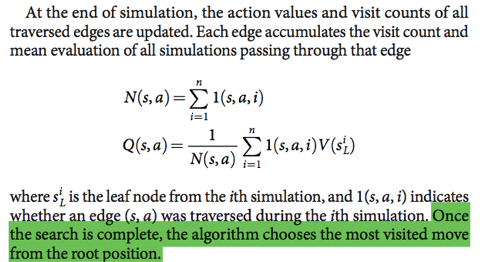

After each roll out, you update your search tree with whatever information you gained during the simulation, so that your next simulation is more intelligent. And at the end of all simulations, you just pick the best move.

Interesting insight here!

Remember how the RL fine-tuned policy NN was better than just the SL human-trained policy NN? But when you put them within the MCTS algorithm of AlphaGo, using the human trained NN proved to be a better choice than the fine-tuned NN. But in the case of the value function (which you would remember uses a strong player to approximate a perfect player), training Foma using the RL policy works better than training her with the SL policy.

“Doing all this evaluation takes a lot of computing power. We really had to bring out the big guns to be able to run these damn programs.”

Self explanatory.

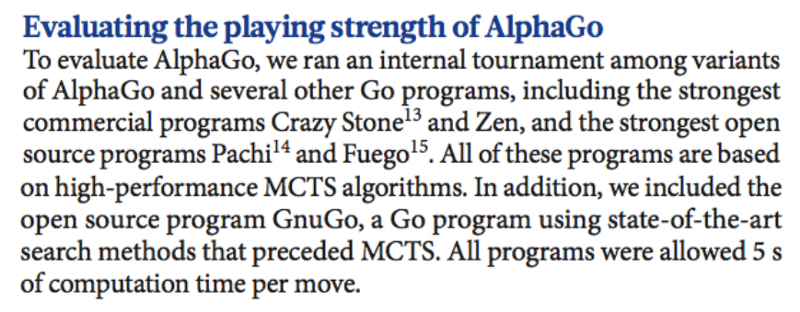

“LOL, our program literally blew the pants off of every other program that came before us”

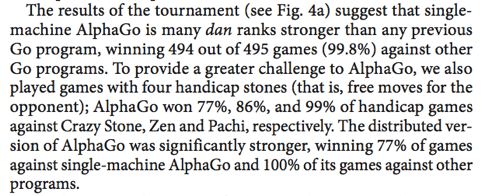

This goes back to that “mixing parameter” again. While evaluating positions, giving equal importance to both the value function and the rollouts performed better than just using one of them. The rest is self explanatory, and reveals an interesting insight!

Self explanatory.

Self explanatory. But read that red underlined sentence again. I hope you can see clearly now that this line right here is pretty much the summary of what this whole research project was all about.

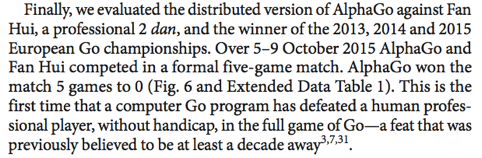

Concluding paragraph. “Let us brag a little more here because we deserve it!” :)

Oh and if you’re a scientist or tech company, and need some help in explaining your science to non-technical people for marketing, PR or training etc, I can help you. Drop me a message on Twitter: @mngrwl