By Lior Messinger

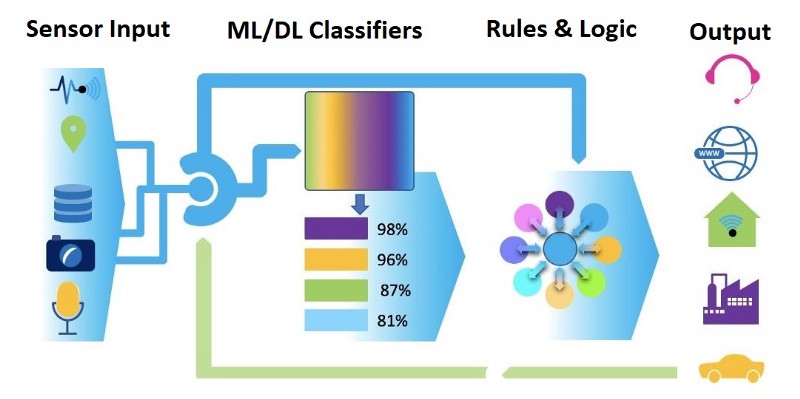

At their core, AI projects can be depicted as a simple pipeline of a few building blocks. The diagram above explains that pretty nicely: Unstructured content, usually in huge amounts of data, comes in from the left, and is fed into AI classifiers. These pre-trained machine- or deep- learning models separate the wheat from the chaff and reduce the input to a few numerical or string output values.

For example, megas of pixels and colors in an image are reduced to a label: this is a giraffe. Or a zebra. In audio, millions of wave frequencies produce a sentence through Speech To Text models. And in conversational AI, that sentence can be reduced further to a few strings representing the intent of the speaker and the entities in the sentences.

Once the input has been recognized, we need to do things, and generate some meaningful output. For example, a car recognized too close should turn the wheel in an autonomous car. A request to book a flight should produce some RESTFul database queries and POST calls, and issue a confirmation, or a denial, to the user.

This last part, shown in the diagram as rule-based logic, is an inseparable part of any AI system, and there’s no change in sight to that. It has usually been done by coding, thousands and thousands of lines of code — if it’s a serious system — or a couple of scripts if it’s a toy chatbot.

Behavior Trees

A Behavior Tree is a programming paradigm that emerged in video games to create human-like behaviors in non-player characters. They form an excellent visual language with which a software architect, a junior developer and even a non-coder, technical designer can all create complex scripts. In fact, since Behavior trees (BTs) allow logic operations like AND and OR, loops and conditions, any program that can be created by code, can be created with BTs.

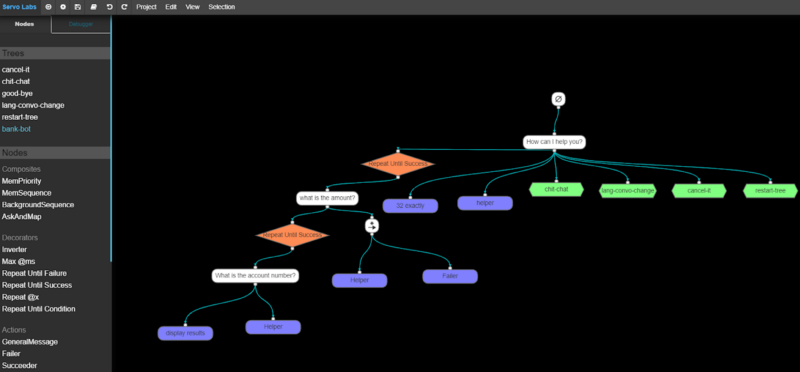

Servo is an open-source AI conversational framework built on top of a JavaScript behavior tree framework called Behavior3. It’s designed to do the needed orchestration of inputs and outputs for conversational AI systems. It’s what is called “low-code” framework: you only need to code a little, and most of the tasks can be done in the visual editor.

It’s not your usual newbie's toy: it was designed to be extended using real-life, debuggable JS source files and classes, and abide by any team project-management methodologies. Moreover, it’s suitable for teams that grow in size, allowing the introduction and reuse of new modules through abstracted and decoupled sub-processes.

I’m the main developer of Servo. After 30-something years of coding, feeling the pain in long-delayed projects and watching legacy systems break under their own weight, I wanted to achieve maximum flexibility with minimum coding. Here I’m going to explain the magic that can be done when one combines Behavior Trees with NLU/NLP engines, using Servo and Wit.ai.

Any developer can benefit from this tutorial, but it’s best if you are a developer with experience in building chat- or voice-bots and have knowledge working with NLU/NLP engines like LUIS, Wit.AI, Lex (the Alexa engine), or Dialogflow. If you do not, it’s ok, but I’ll be covering some subjects a bit briefly.

If you want to learn about NLU and NLP engines, there are excellent resources all over the Internet — just search for ‘Wit tutorial’. If you want to learn how to build a heavyweight assistant, then just continue reading.

Getting Started with Servo

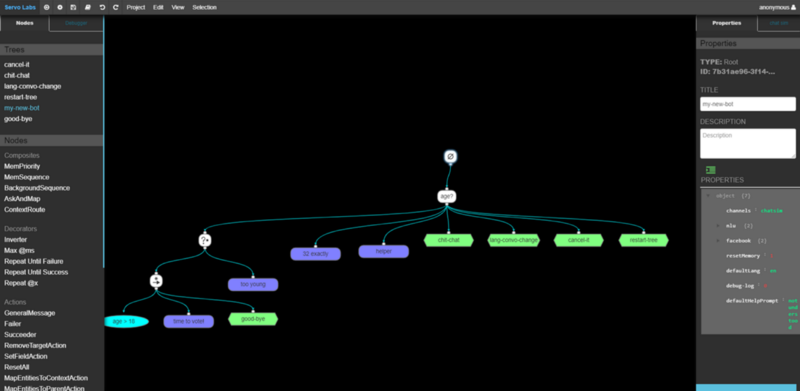

I won’t get into details of installing Servo here, but just say that getting started with Servo is really easy. You can read about it here. In essence, you clone the Github repo, npm-install it according to the readme, and run it locally. Then, every New Project would start you off with a small ready-made bot to get things going:

You can notice here the green hexagons named ‘chit-chat’, ‘cancel’ and others. By the end of this article, you’ll have a clear idea of what they are and how they work. But first, let’s tackle the first challenges.

Building An NLU Model

Let’s talk about building a banking assistant, and specifically, one that works for the money transfer department. Had it been a web application, we would have a form with few fields, among which the amount and the transferee’s (also called beneficiary) account are the most important. Let’s use these here for this tutorial. Actually, when using NLU engines, we can still think of it as forms, with the fields now called entities (also slots, in Alexa lingo). The NLU engines also produce an intent, which can be viewed as the name of the form that will guide the assistant to the area of that functionality of the user’s intent.

We should train the NLU engine with a few sentences, such as:

- “I’d like to send some money”

- “I’d like to send $100 “

- “Please transfer $490 to account #01–10099988”

And for these, we need to tell the engine to output the following:

- a TransferIntent intent for such sentences

- A wit/number for the amount

- An accountNumberEntity for the beneficiary account

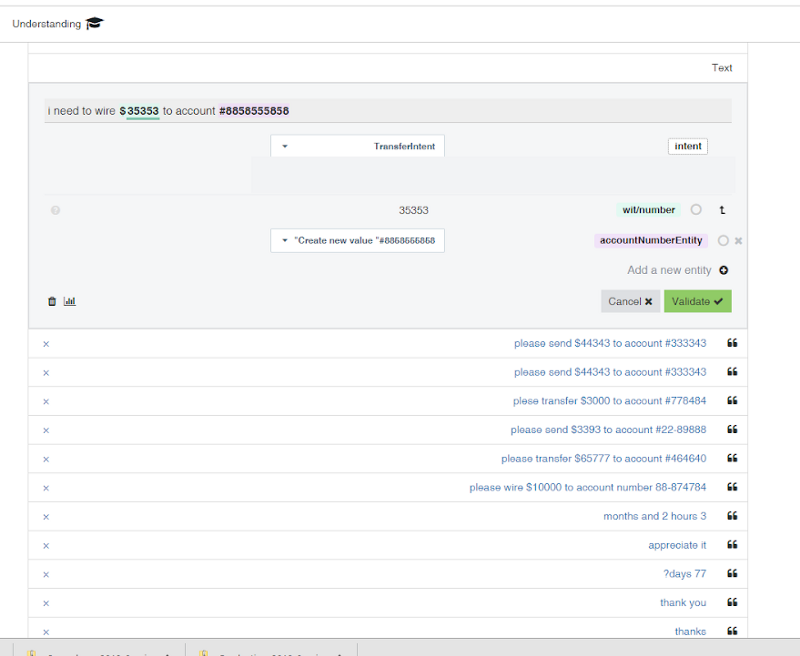

Let’s do that on Wit.ai. Again, I won’t get into a Wit tutorial — there are plenty of guides. Servo comes with a general Wit model which you can take from Servo’s Github here. Then, open your own Wit app and import it.

I created a free-text entity for the account numbers (as account number might include other symbols), and a wit/number entity for the amount. I found composite entities work pretty well, too, although they need some training. For simplicity, for account numbers, I trained the model to be a # followed by 8 digits.

In general, it’s always better to experiment with different entity models. In our case, we might get two numbers in the same sentence (account number and amount) and we need a way to tell them apart, so it’s best if it’s two different entity names. But you can try other types: AI is still a very empirical science…

We then trained it with a few sentences and let Wit build the bank-transfer model. For convenience, I added it here, and also set the whole banking bot tutorial bot to come along with the pre-loaded examples.

Last, we need to connect the NLU to the assistant. Go to Settings in Wit, and copy the access token. We need to paste it in our tree root’s properties. We do this by opening Servo’s editor, selecting the root, opening its properties, and pasting it under nlu. As you can see, Servo supports multi-language assistants and different NLU engines:

Start The Bank Assistant

Now, we can turn to Servo. We should construct a small tree with a question for each entity and intent.

As a reminder, the basic rules of Servo behavior-trees is as follows

- The main loop of the tree is executing the root continuously

- Each node executes its children

- An AskAndMap node (the “Age” node in the diagram above) outputs a question to the user and waits for an answer

- Once an answer comes in, the flow is routed to the appropriate child according to the intent and entities that the NLU engine gave it

Let’s first change the main, topmost question from “Age?” into “What would you like to do?”. Also, let’s delete the first (that is, left-most) child and its nodes, as we are not going to use them anymore:

Why are we seeing the red dashes around the node? Hover over it and you’ll see the error:

Count of contexts number should be equal to the number of children

We will fix that in a minute.

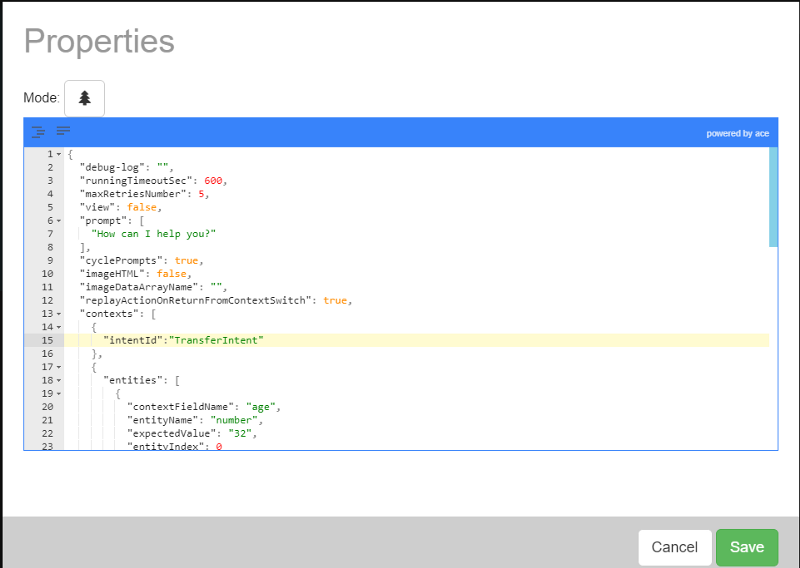

Now let’s build the transfer flow. We’ll assume that once the user says “I’d like to wire money”, we want to descend into the first, leftmost child. For that, we’ll select the “How can I help you” node and go into its properties. There, change the first context to have an intentId of “TransferIntent”:

This will cause any sentence that the Wit determines to have a TransferIntent, to be routed there.

Mapping an entity

Now, once the NLU has recognized our intent to transfer money, we should get all the different “fields”, or entities. Let’s add a node for the amount:

We added an AskAndMap node, and set its prompt to a question about the amount. We also changed its title — it’s always a good practice. Last, don’t forget to save your work using the Save button or Ctrl-S.

You can also notice the red warning disappeared from the How can I help you node.

Last, let’s add a number entity to one of the child contexts of the Amount node, and map the value into a field called amount.

“contexts”: [

{

“entities”: [

{

“contextFieldName”: “amount”,

“entityName”: “number”,

“expectedValue”: “”,

“entityIndex”: 0

}

]

}

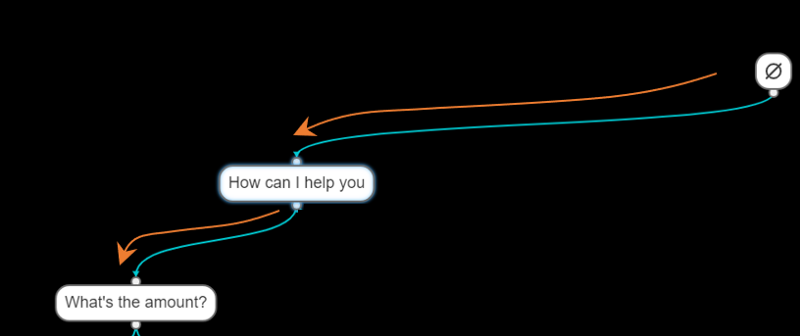

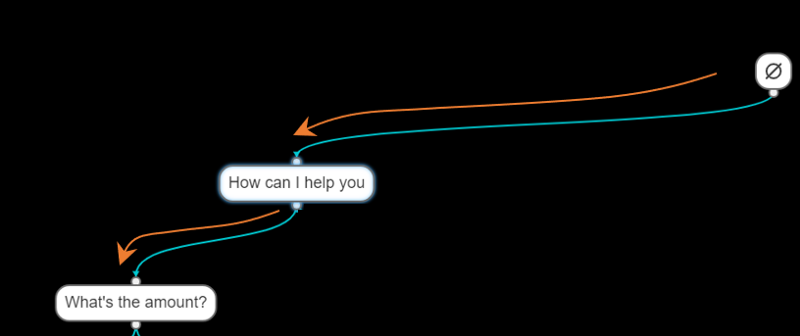

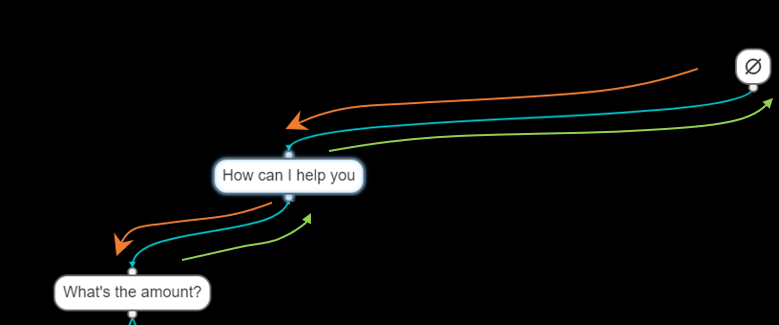

All this seems very simple, and it is: if a user says something like “I need to send some money”, they will be asked, “What is the amount?”. Once they enter the amount, the number will be extracted by the NLU and mapped to the context.amount in Servo. Then, we can use it later in the game. Visually, the flow started from the root:

And the assistant would ask:

“How can I help you?”

If the user answered:

“I’d like to transfer some money”

the NLU engine would output a TransferIntent and the flow would continue downstream to the context it identified — the leftmost child — and ask the next question, about the amount:

But what if the user doesn’t enter an amount?

Building Helpers

AskAndMap nodes support another type of a context child, called a Helper. This context is selected when the user answered something that couldn’t be mapped to any other context. Let’s add one into our What’s the amount AskAndMap:

"contexts":[

{

"entities":[

{

"contextFieldName":"amount",

"entityName":"number",

"expectedValue":"",

"entityIndex":0

}

]

},

{

"helper":true

}

]

Let’s now add a right-most child with a message help. Something like:

Of course, there can be only one helper context child for the AskAndMap.

One could imagine an example of the flow:

User: “I’d like to transfer some money”

Assistant: “What is the amount?”

User: “You think I’d know. But I’m not sure”

Assistant: “Please provide the amount to transfer”

That looks simple: obviously, the assistant didn’t understand the “You think I’d know. But I’m not sure” and went on with the helper message “Please provide the amount to transfer”.

But in fact, if you’ll run the bot, you will get a surprising sentence after that last line:

User: “You think I’d know. But I’m not sure”

Assistant: “Please provide the amount to transfer”

Assistant: “How can I help you?”

What happened here? Where did the “How can I help you?” come from?

Here’s the flow. The helper node said its line and returned SUCCESS to its parent, the AskAndMap. This, in turn, returned SUCCESS too, and so on, until the root was reached. At which point, the whole tree was restarted, and we get the initial How can I help you?” question.

So, to avoid that, we need to put a loop before the AskAndMap, so that it won’t return until it really succeeded. That is done with something called a decorator.

Adding a repeat decorator

Behavior Trees implement loops using decorators, which are nodes that have one parent and one child. Depicted as a rhombus ⧫, we will use here the RepeatUntillSuccess decorator to loop the AskAndMap until it is successfully completed. Receiving a help message would not complete it, so we need to return a FAILURE after the help message. We do that by sequencing a Failer node right after the message. All in all, that’s the decoration we add to the AskAndMap construct:

Now it’s the time to add the next node that would map the beneficiary account number. Again, pretty straight-forward: as before, we add an an AskAndMap with the question for the account number and a map from accountNumberEntity to an accountNumber member on the context. We set it as a child of a RepeatUntilSuccess decorator, and a helper child that explains what’s needed for this entity.

Then, we should add the actual business logic to do the transfer. This would probably mean several API calls with the entities collected. We would simulate this with a message: we are going to transfer $X to account #Y. For that, you need to drag in a GeneralMessage as the first child of the accountNumberEntity, and make its properties as follows:

“debug-log”:””,

“runningTimeoutSec”:600,

“maxRetriesNumber”:5,

“replayActionOnReturnFromContextSwitch”:true,

“view”:false,

“prompt”:[

“About to transfer <%=context.amount%> to account <%=context.accountNumber%>”

],

…

This is how the tree looks like now:

The tree comes with Servo. It’s files are under server/convocode/anonymous/drafts/bank-bot.

Running and testing

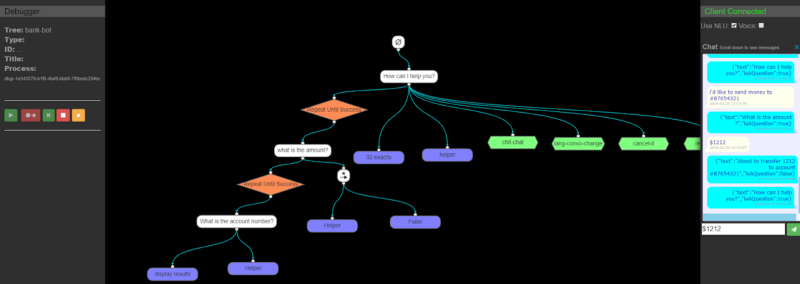

Let’s test the bot and see what happens with various inputs. Click on the Debugger tab, then the play button ▶️. On the right hand the simulator will pop:

You can enter a sentence like:

I’d like to send money.

That would be answered, as expected, with

“What is the amount?”

And you can put in the amount, and continue.

But what if we say

I’d like to send $14141??

Test it, and you’ll see how the assistant nicely jumps over the amount question straight to the account number:

“What is the account number?”

Now, let’s make its life even harder:

I’d like to send money to account #87654321

Nicely enough, it asks only for the amount. Say you enter $3400, it would then skip the account number (since it knows it already) into the final confirmation sentence:

About to transfer 3400 to account #87654321.

How does it know to do all that magic?

The Context Flow

Servo comes equipped with a powerful context-recognition set of algorithms that helps it do all that. What happened here shows a bit of it. Let’s take the last example. After the assistant asked:

“How can I help you?”

And the user answered:

I’d like to send money to account #87654321

The NLU engine output a TransferIntent and the flow continued downstream to the next question, about the amount:

But the NLU also returned an accountNumberEntity! So before descending, this entity is saved on the ‘How can I help you’ context. And, every AskAndMap defines its own context.

That’s actually an important remark, so I’ll repeat it: every AskAndMap defines its own context.

At any point in the flow, when an entity is mentioned, Servo searches back (read: upwards) in the conversation to find it. If it hasn’t, it would ask for it.

So after the amount is entered, once we continue to the account number node, Servo finds that the accountNumberEntity was already mentioned, and uses it.

By the way, a process of similar characteristics happens also when we get to the last confirming GeneralMessage node. Its prompt reads:

About to transfer <%=context.amount%> to account <%=context.accountNumber%>

To resolve that, Servo searches up the context tree to find the needed entities, or context members.

Does this remind you of something? Folks familiar with JavaScript prototypical inheritance would see that it basically uses the same design. In Servo, we implemented that since we need more control over the variables. But it’s always interesting to see how object-oriented concepts are actually applied to real life, natural conversations.

But what if the user asks something much more unrelated, like:

“How much money do I have in my account?”

Or more so

“Who are you, for heaven’s sake??”

To which to bot responds:

“I’m an artificial intelligence assistant built by Servo Labs.”

Whaaaaaaaaaat?? Where did this come from?

Context and sub-trees

Almost all of the structural designs architects use to build manageable large systems can be divided into one of two categories:

- Reuse

- Modularize

If Servo is to stand as the infrastructure of large AI systems, it must provide some mechanism for allowing developers to achieve these goals. And that’s where sub-trees come into play.

We mentioned before the green hexagons:

This is a sub-tree. Double-click it, and you’ll enter a new tree, with that name. To create a new sub-tree, hover over the Trees on the left pane and select New:

A tree with a unique GUID name would appear. Change its name to something meaningful, and build it using any node from the left pane. Once built, you can drag, drop and connect it at any point in any other tree (including itself, by the way, but be very careful about that). Since sub-trees can have many leaf nodes, you can connect them only as leaves, too.

What happened when the user asked the assistant “Who are you”?

First, the NLU, already trained for such questions, returned a WhoAreYouIntent. Then context search was activated. If the conversation was somewhere down in the middle of a transfer conversation, the search went upwards, trying to find a context with WhoAreYouIntent. This context is found: it sits on the 4th context, in the How can I help you node. The flow then was redirected there, meaning, that route was made the active route. The flow here continued downstream into the chit-chat subtree, answered the question, returned up with a SUCCESS and the routing was returned to its previous context, the transfer one.

Here we learned something actually very important. The conversation flows down, but context is searched up. Never forget that:

Connecting to a messenger client

Until now, we have used the internal simulator and debugger as our messaging client. Let’s connect our small assistant to a real Facebook messenger. There is one big important change on the root properties of our tree, and that is to change the channel name from the default channel “chatsim” to “facebook”:

"channels":"facebook"

On the Facebook side, these are the main high-level steps that one needs to take:

- Open a Facebook page under your Facebook account

- Create a new Facebook app in the Facebook developer center

- Add a messenger functionality to your app

- Subscribe the app to listen to events in the page

- Set the assistant callback address as the webhook to post to. Servo always publish its bot with the format of

/entry//

So for a bank-bot assistant, running on www.mydomain.com, the address would be:

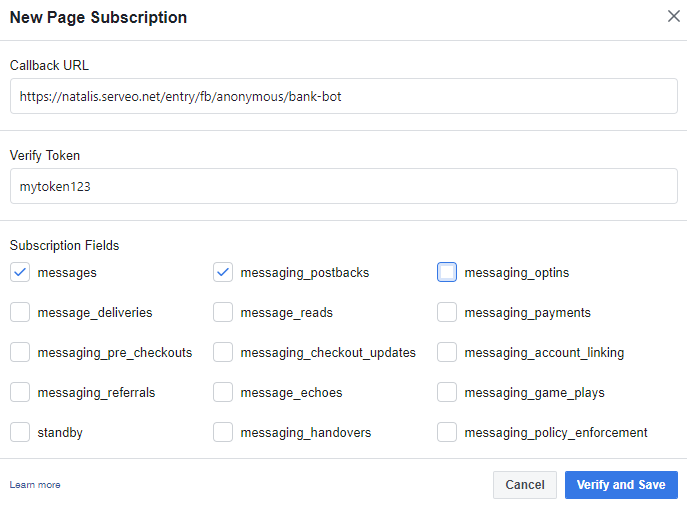

You should set it in the page subscription section of the Facebook app, at the developer’s portal. You need to select at least messages, _messagingpostbacks, and to match the verify token with the validation token you set in the bot’s root properties:

By the way, https://serveo.net is a great tunneling system (another alternative is ngrok), if you are developing your assistants, like me, on localhost.

On the assistant root properties, set the same verify token and re-publish it:

"facebook": {

"validationToken": "mytoken123",

"accessToken": "<token here>"

},

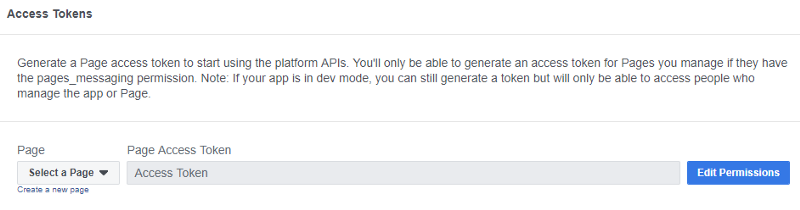

The access token should be set too, taken from Facebook’s messenger area:

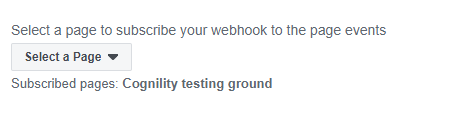

Last, select a page to subscribe your webhook to the page events:

and… once you connect all these ends, you should have, at last, a full, orchestrated, end-to-end conversational AI system!

Connecting Backends

Real life connections vary, but luckily, most of them these days are done by using RESTFul API. For these, check out the documentation on RetrieveJSONAction and PostAction. Once data is retrieved, or a response is received, it is set into a memory field (context/global/volatile). You probably would want to query it. This is done using ArrayQueryAction, which implements an in-memory Mongo-like query language. For direct MongoDB queries, use the MongoQuery action.

In Summary

Servo is an open-source IDE and framework that uses a context-recognition search to place the user on the right conversation and output the right questions. We learned how to construct a simple conversation, and how to wrap such conversations in sub-trees for decoupling and re-use. Servo has many other features that are worth exploring, among which you could find

- Connectors to Facebook, Alexa, Twilio and Angular

- Connectors to MongoDB, Couchbase and LokiJS databases

- Harness for automated conversation testing

- A Conversation debugger

- More actions, conditions and decorators

- Flow control mechanisms

- Field assignment and compare

- Context manipulation

- Validation

- In-memory mongo-like queries

- And any customized action you come up with

Feel free to check it out and ask questions on the Github forum or at my @lmessinger Github name. Enjoy!