by Ty Irvine

How to List Out All URLs Associated With a Website Fast-ish

So you need a list containing all the URLs for a website? Are you doing some redirects perhaps? Hit the limit on XML Sitemaps? Cool, me too. I’ve got just the tool for you that’ll get it done at about the same speed as XML Sitemaps, but you’ll look way cooler doing it.

Where the tutorial actually starts

To get your list of URLs, we’re going to use Wget!

What the Frigg is Wget?

“Wget is a free software package for retrieving files using HTTP, HTTPS, and FTP, the most widely-used Internet protocols.” — Brew Formulas

And you can also use it to request a big list of URLs associated with a domain.

1. Installing Wget

To install Wget if you haven’t already, you’re going to need first to install HomeBrew; aka Brew. ? Brew is a package manager, meaning it installs software for you and manages it. You can check out the instructions on their website or just follow the ones below.

Install Brew

Paste this into a Terminal Prompt and hit enter twice ⮐ (It may ask you for a password.)

/usr/bin/ruby -e "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/master/install)"Install Wget

Now that you have Brew installed it’s time to install Wget. Paste this into a Terminal Prompt and hit enter ⮐

brew install wget2. Time To Get ‘Dem URLs

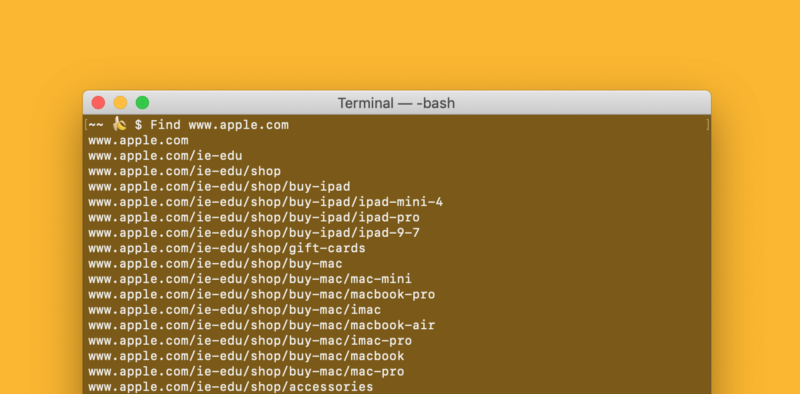

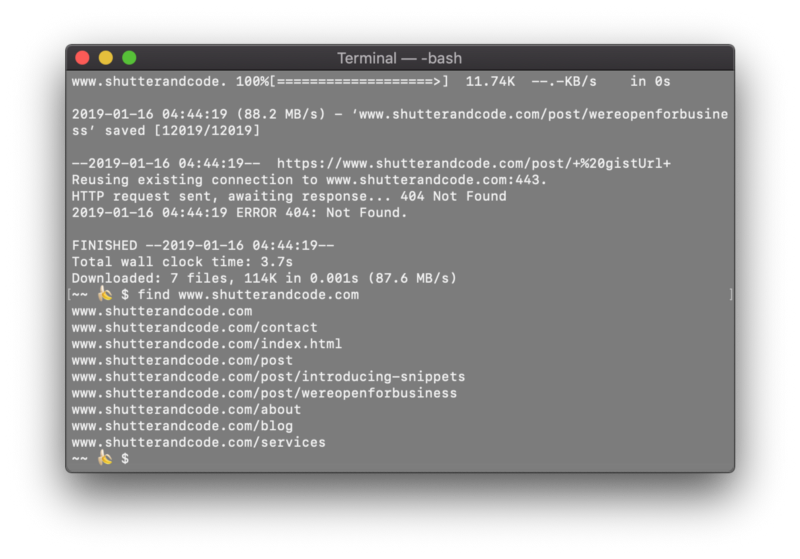

Now with Wget installed we simply download the website and then display all of its URLs. Start by downloading the website you’d like with

Wget -r www.shutterandcode.comThen once the download is complete we’ll list out the URLs with

Find www.shutterandcode.com(Make sure to use the same website domain as what was downloaded).

Conclusion

After a series of casual tests pitting Wget against XML Sitemaps using smaller websites, I found that they are both pretty much on par with each other. Occasionally one would be faster than the other but overall they both had similar speeds.

If you’d like to know more about Wget commands simply type this into your prompt

wget --helpI hope you enjoyed reading this! Don’t forget to like, comment, and subscribe! ?

p.s. don’t actually feel obligated to like, comment, and or subscribe because it is simply a joke for YouTubers :)

UPDATE: if you don’t want the site actually to download to your computer add in ‘ — spider’ after ‘wget’ like

wget -r --spider www.example.comCheck out the original post and the rest of the Snippets! series at