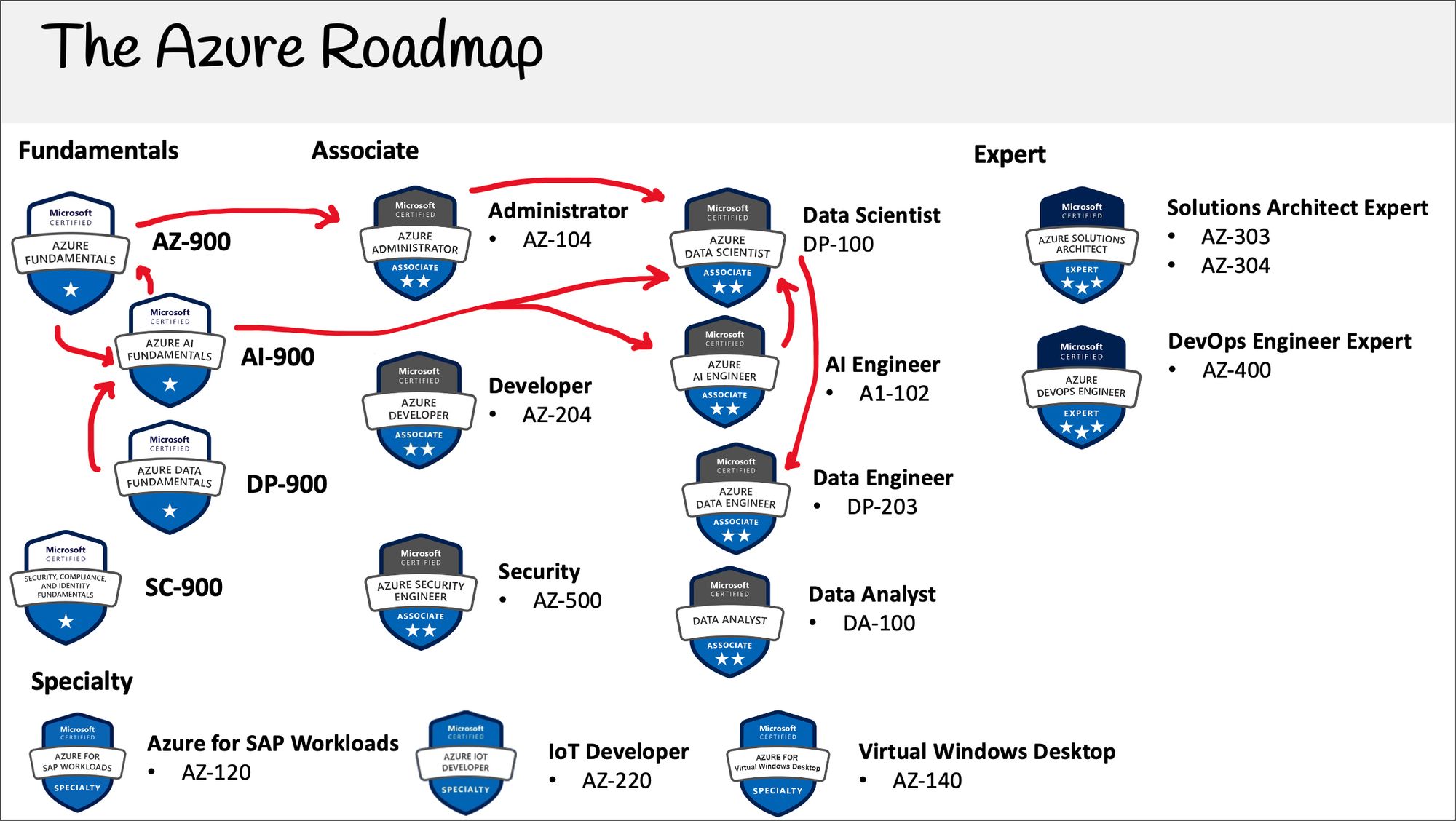

The Azure AI Fundamentals Certification is for those seeking a Machine Learning role such as AI Engineer or Data Scientist.

The certification has recently been updated for 2024 and I've released an updated course to teach you how to pass the exam.

In the last decade companies have been collecting vast amount of data surrounding their service and product offerings.

The successful companies of the 2010s were data-driven companies who knew how to collect, transform, store and analyze their vast amount of data.

The successful companies of the 2020s will be ml-driven who how know how to leverage deep learning and create AI product and service offerings.

Overview of the Azure AI Fundamentals

The Azure AI Fundamentals covers the following:

- Azure Cognitive Services

- AI Concepts

- Knowledge Mining

- Responsible AI

- Basics of ML pipelines and MLOps

- Classical ML models

- AutoML and Azure ML Studio

How do you get the Azure AI Fundamentals certification?

You can get the certification by paying the exam fee and sitting for the exam at a test center partnered with Microsoft Azure.

Microsoft Azure is partnered with Pearson Vue and PSI Online who have a network of test centers around the world. They provide both in-person and online exams. If you have the opportunity, I recommend that you take the exam in-person.

Microsoft has a portal page on Pearsue Vue where you can register and book your exam.

That exam fee is $99 USD.

Can I simply watch the videos and pass the exam?

For a fundamental certification like the AI-900 you can pass by just watching the video content without exploring hands-on with the Azure services on your own

Azure has a much higher frequency of updates than other cloud service providers. Sometimes there are new updates every month to a certification however, the AI-900 is not hands-on focused, so study courses are less prone to becoming stale.

- The exam has 40 to 60 questions with a timeline of 60 minutes.

- The exam contains many different question types.

- A passing grade is around 70%.

The Free Azure AI Fundamentals Video Course

Just like my other cloud certification courses published on freeCodeCamp, this course will remain free forever.

The course contains study strategies, lectures, follow-alongs, and cheatsheets, and it's designed to be a complete end-to-end course.

Head on over to freeCodeCamp's YouTube channel to start working through the full 4-hour course.