Would you like to talk to a chatbot that speaks like your favorite character, fictional or non-fictional? Let's build one!

In case you've seen my previous tutorial on this topic, stick with me as this version features lots of updates.

You can follow along with this tutorial using the code on my GitHub:

If you want, you can dive right into my video tutorial on YouTube – or read on for more details. 😎

What to Expect from this Tutorial

Here is an example of the Discord AI chatbot that we will have built by the end of this tutorial.

My chatbot project started as a joke with a friend when we were playing video games.

I'm honestly surprised by how popular it became – there were 5.9k views of my previous tutorial, plus, when I deployed my bot to a 1k+ user server, people flooded it with 300+ messages in an hour, effectively crashing the bot. 😳 You can read more about my deployment post-mortem in this post.

Since a lot of people are interested in building their own bots based on their favorite characters, I updated my tutorial to include an in-depth explanation on how to gather text data for any character, fictional or non-fictional.

You may also create a custom dataset that captures the speech between you and your friends and build a chatbot that speaks like yourself!

Other updates in this tutorial address changes in Hugging Face's model hosting services, including API changes that affect how we push the model to Hugging Face's model repositories.

Outline of this Tutorial

The video version of this tutorial runs for a total of one hour and features the following topics:

- Gather text data for your character using one of these two methods: find pre-made datasets on Kaggle or make custom datasets from raw transcripts.

- Train the model in Google Colab, a cloud-based Jupyter Notebook environment with free GPUs.

- Deploy the model to Hugging Face, an AI model hosting service.

- Build a Discord bot in either Python or JavaScript, your choice! 🤩

- Set up the Discord bot's permissions so they don't spam non-bot channels

- Host the bot on Repl.it.

- Keep the bot running indefinitely with Uptime Robot.

To learn more about how to build Discord bots, you may also find these two freeCodeCamp posts useful – there's a Python version and a JavaScript version.

How to Prepare the Data

For our chatbot to learn to converse, we need text data in the form of dialogues. This is essentially how our chatbot is going to respond to different exchanges and contexts.

Is Your Favorite Character on Kaggle?

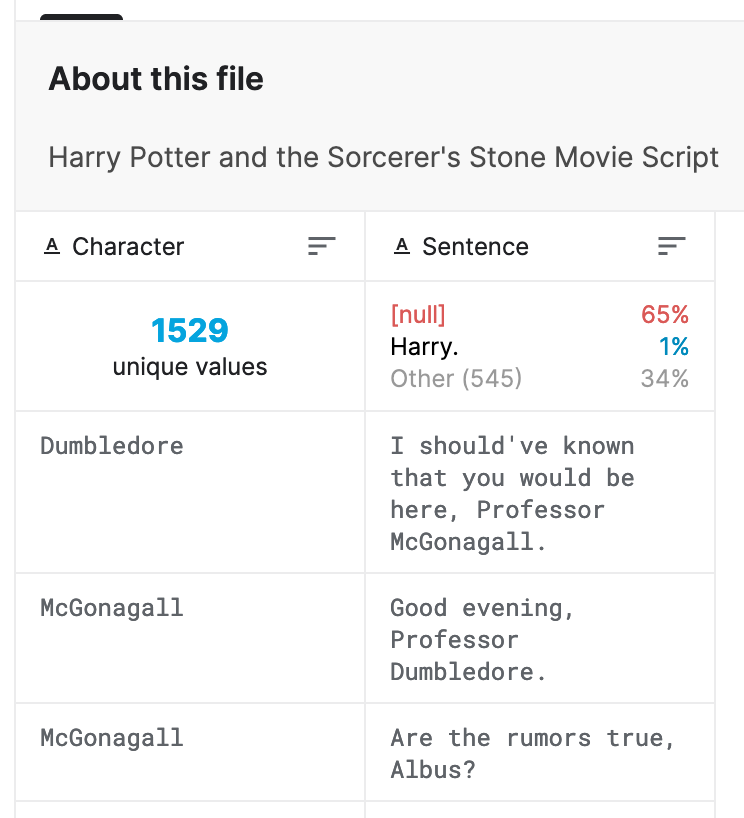

There are a lot of interesting datasets on Kaggle for popular cartoons, TV shows, and other media. For example:

We only need two columns from these datasets: character name and dialogue line.

Can't Find Your Favorite Character on Kaggle?

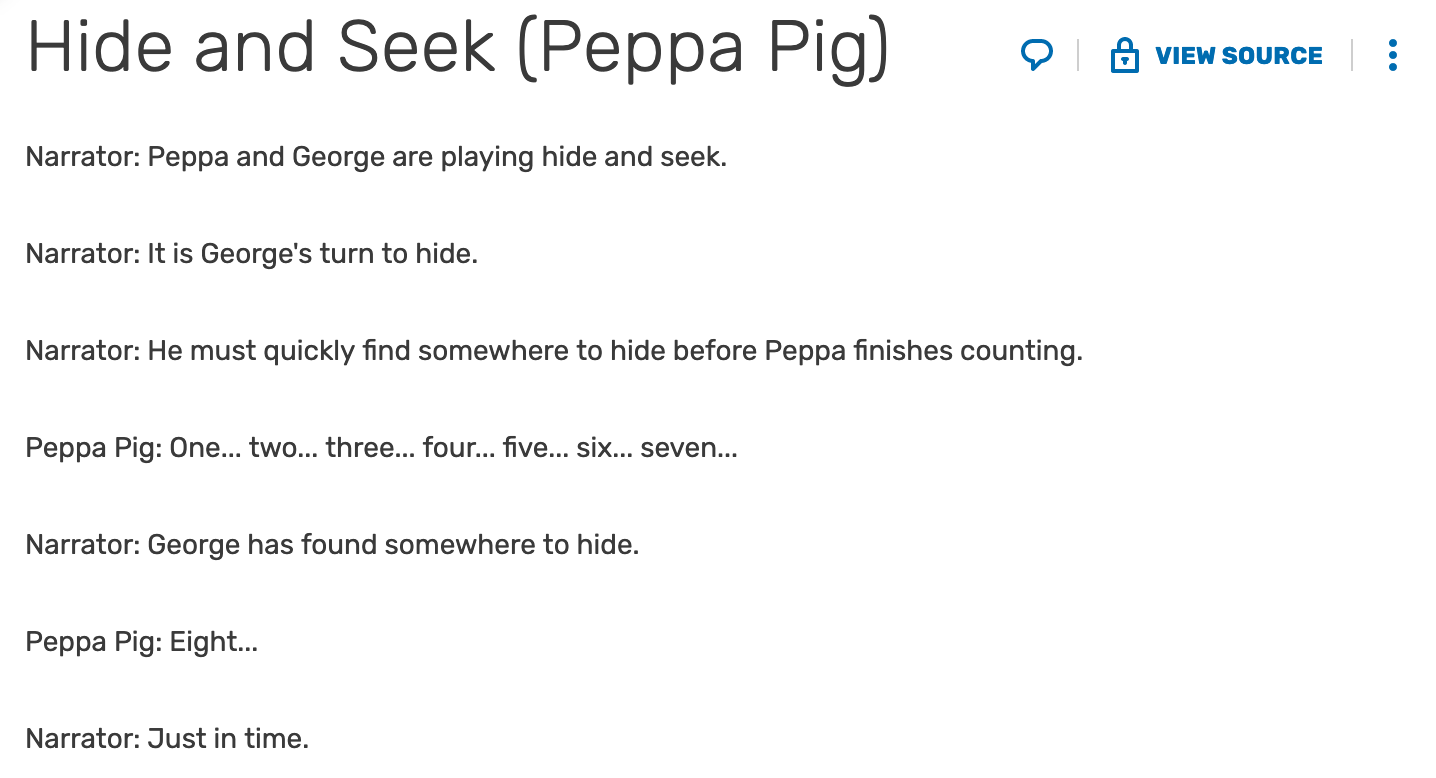

Can't find your favorite character on Kaggle? No worries. We can create datasets from raw transcripts. A great place to look for transcripts is Transcript Wiki. For example, check out this Peppa Pig transcript.

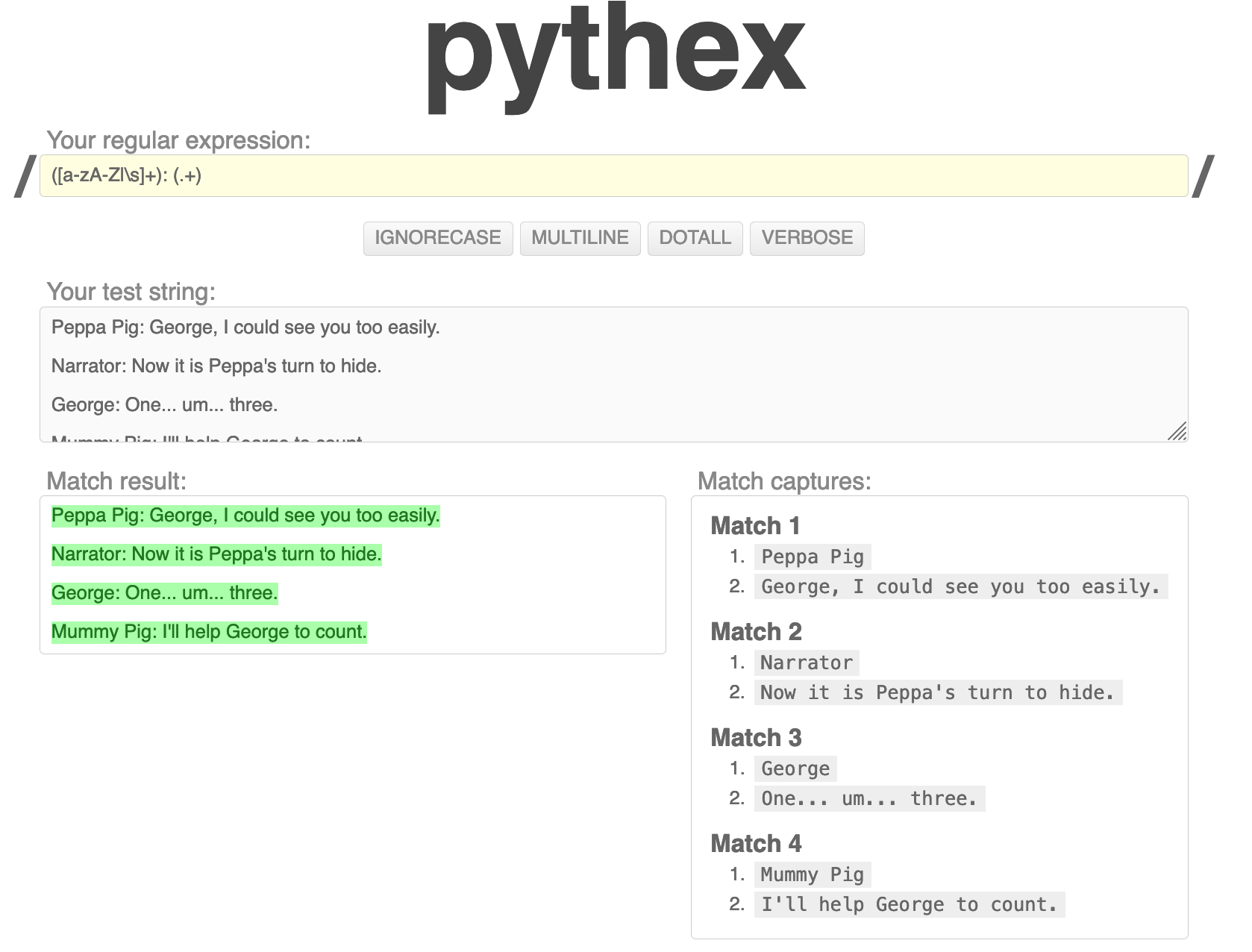

Using a regular expression like ([a-zA-Z|\s]+): (.+), we can extract out the two columns of interest, character name, and dialogue line.

Try it out on this Python regex website yourself!

How to Train the Model

Under the hood, our model will be a Generative Pre-trained Transfomer (GPT), the most popular language model these days.

Instead of training from scratch, we will load Microsoft's pre-trained GPT, DialoGPT-small, and fine-tune it using our dataset.

My GitHub repo for this tutorial contains the notebook file named model_train_upload_workflow.ipynb to get you started. All you need to do is the following: (please refer to the video for a detailed walkthrough)

- Upload the file to Google Colab

- Select GPU as the runtime, which will speed up our model training.

- Change the dataset and the target character in code snippets like:

data = pd.read_csv('MY-DATASET.csv')

CHARACTER_NAME = 'MY-CHARACTER'Running through the training section of the notebook should take less than half an hour. I have about 700 lines and the training takes less than ten minutes. The model will be stored in a folder named output-small .

Want an even smarter and more eloquent model? Feel free to train a larger model like DialoGPT-medium or even DialoGPT-large. Model size here refers to the number of parameters in the model. More parameters will allow the model to pick up more complexity from the dataset.

You may also increase the number of training epochs by searching for num_train_epochs in the notebook. This is the number of times that the model will cycle through the training dataset. The model will generally get smarter when it has more exposure to the dataset.

However, do take care not to overfit the model: If the model is trained for too many epochs, it may memorize the dataset and recite back lines from the dataset when we try to converse with it. This isn't ideal as we want the conversation to be more organic.

How to Host the Model

We will host the model on Hugging Face, which provides a free API for us to query the model.

Sign up for Hugging Face and create a new model repository by clicking on New model. Obtain your API token by going to Edit profile > API Tokens. We will need this token when we build the Discord bot.

Follow along with this section in my video to push the model. Also, remember to tag it as conversational in its Model Card (equivalently its README.md):

---

tags:

- conversational

---

# My Awesome Model

You will know that everything works fine if you are able to chat with the model in the browser.

How to Build the Discord Bot

Go to the Discord Developer's page, create an application, and add a bot to it. Since our chatbot is only going to respond to user messages, checking Text Permissions > Send Messgaes in the Bot Permissions Setting is sufficient. Copy the bot's API token for later use.

Sign up for Repl.it and create a new Repl, Python or Node.js for JavaScript, whichever you are working with.

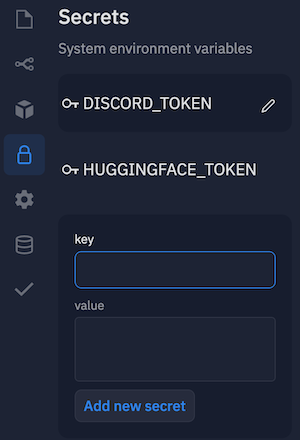

Let's store our API tokens for Hugging Face and Discord as environment variables, named HUGGINGFACE_TOKEN and DISCORD_TOKEN respectively. This helps keep them secret.

Copy my Python script for a Python bot and my JS script for a JS bot. Note that for the JS bot, because of a version incompatibility with Repl.it's Node and NPM, we will need to explicitly specify a lower version of the Discord API in package.json.

"dependencies": {

"discord.js": "^12.5.3",

}With that, our bot is ready to go! Start the Repl script by hitting Run, add the bot to a server, type something in the channel, and enjoy the bot's witty response.

How to Keep the Bot Online

One problem with our bot is that it halts as soon as we stop the running Repl (equivalently, if we close the Repl.it browser window).

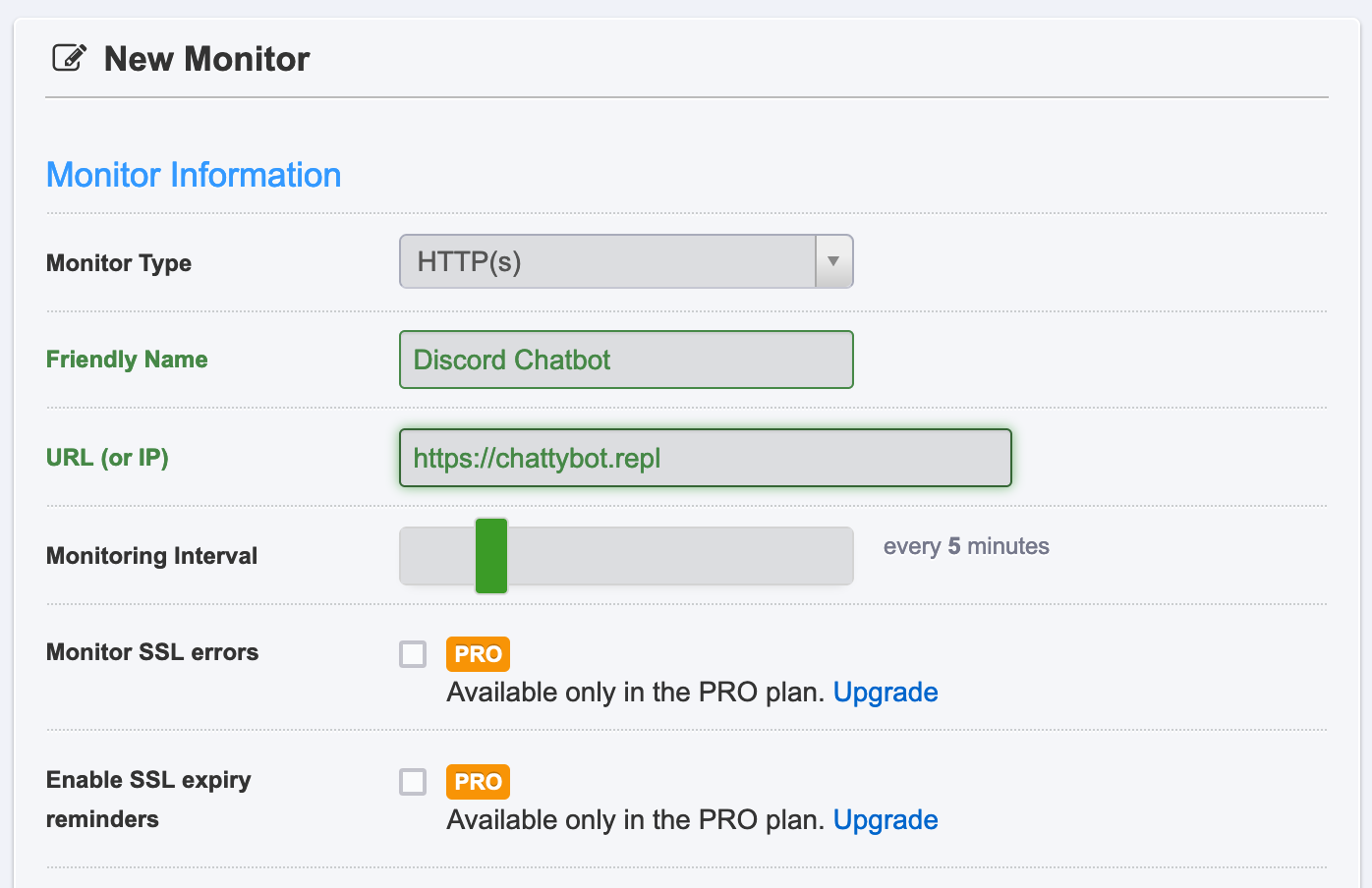

To get around this and keep our bot running indefinitely, we will set up a web server to contain the bot script, and use a service like Uptime Robot to pin our server every five minutes so that our server stays alive.

In my video tutorial, I copied the server code from these two freeCodeCamp posts (Python version, JavaScript version). Then, I set up the monitor on Uptime Robot. Now my bot continues to reply to my messages even if I close the browser (or shut down my computer all together).

Congratulations on reaching the end of this tutorial! I hope you enjoyed creating the bot and have fun chatting with your favorite character! 🥳

Tutorial Video Link

More About Me and My Chatbot Project

I'm Lynn, a software engineer at Salesforce. I graduated from the University of Chicago in 2021 with a joint BS/MS in Computer Science, specializing in Machine Learning. Come say hi on my personal website!

I post fun project tutorials like this on my YouTube channel. Feel free to subscribe to catch up on my latest content. 😃

Want to learn more about my bot? Check out this 15-minute real-time chat demo featuring me, my friend, and my bot!

Interested in the model I trained? Check it out on Hugging Face:

My chatbot was so popular on a 1k+ user server that... it crashed. 🤯 Read about my deployment post-mortem in this post:

Thanks for reading!