by Avi Ashkenazi

From Augmented Reality to emotion detection: how cameras became the best tool to decipher the world

The camera is finally on stage to help solve user experience (UX) design, technology, and communication issues.

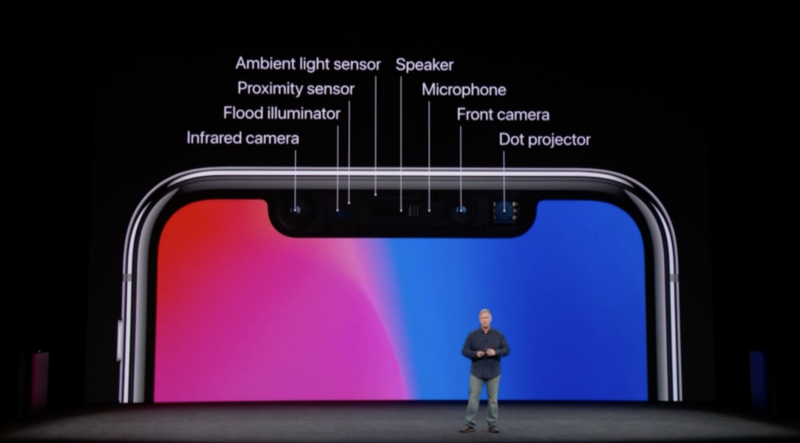

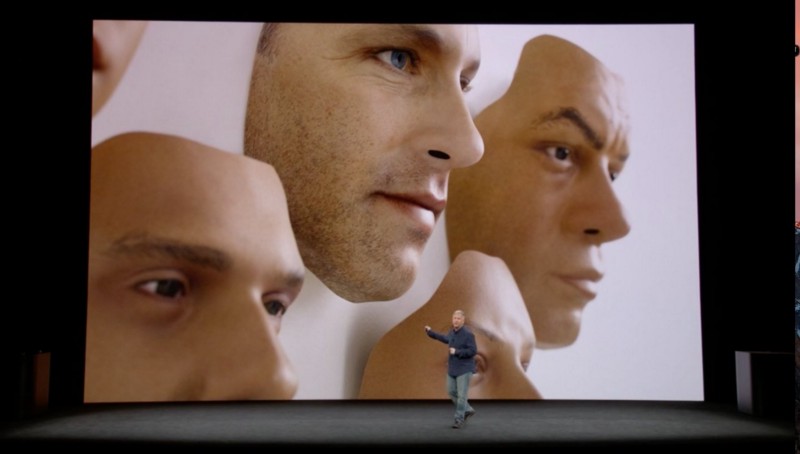

Years after the Kinect was trashed and Google Glass failed, there is now new hope. The impressive technological array that Apple minimized from a PrimeSense to the iPhone X is the beginning of emotion-dependent interactions.

It’s not new. It’s commercialized and gives developers access to indispensable information.

Recently, Mark Zuckerberg mentioned that much of Facebook’s focus will be on the camera and its surrounding environment. Snapchat has defined itself as a camera company. Apple and Google are also heavily investing in cameras. The camera has tremendous power that we have not yet tapped into. It has the power to detect emotions.

Inputs need to be easy, natural, and effortless

When Facebook first introduced emojis as an enhanced reaction to Like, I realized that they were onto something. Facebook recently added five emotions which helped Facebook to better understand its users’ emotional reactions to its content. I argue that the emojis are glorified form of the same thing, but one that works better than anything else.

In the past, Facebook only had the Like button while YouTube had the Like and Dislike buttons. But these are not enough to track emotions, and do not provide much value to researchers and advertisers. Most people express their emotions in comments, and yet there are more Likes than comments.

The comments are text based or even presented with an image, which is harder to analyze. That is because there are many contextual connections the algorithm needs to guess. For example, how familiar is the person who reacts to a post with the person who posted it, and vice versa? How is the person connected to the specified subject?

Is there subtext, slang, or anything related to the person’s experience? Is it a continued conversation from the past? Facebook did a wonderful job of keeping the conversation positive. Facebook prevented the Dislike button from pulling focus, which could have discouraged people from creating and sharing content. Facebook kept it positively pleasant.

Now I would compare Facebook to a glorified forum. Users can reply to comments or to the emojis. Users can Like a Like ?. Yet it is still very hard to know what people are feeling. Most people who read don’t leave a comment. What do readers feel when they read a post then?

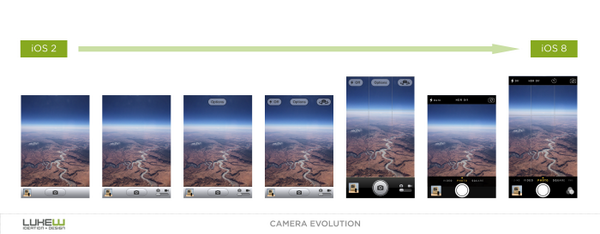

The old user experience for cameras

What do you do with a camera? Take pictures and videos, and that’s about it. There has been huge development in camera apps. There are many features that are related to the surroundings of the main use case, for example high dynamic range (HDR), slow motion, portrait mode, etc.

Based on the enormous number of pictures users generate, a new wave of smart galleries, photo processing, and metadata apps has been created.

However, recently the focus has changed. It is now on the life-integrated camera, which is a combination of the strongest treats and best use cases for mobile phones. The next generation of cameras will be fully integrated into our lives and could replace all the input icons in a messaging app (microphone, camera, and location).

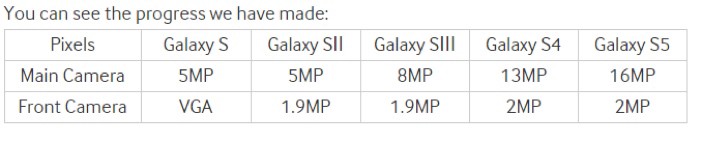

Cameras are one of the three items that has been consistently developed at a dizzying pace. The screen and the processor are the other two. Every phone that has come out has pushed the limits, and has done so year after year. The improvements made to the cameras are to their megapixels, movement stabilization, aperture, speed and, as mentioned above, the apps.

Let’s evaluate the evolution of a few products created by companies.

Much of the development was focused on the camera at the back of the phone because, at least at first, the front camera was thought to be useful for video calls only. However, selfie culture and Snapchat changed that. Snapchat’s masks, which were later copied by everyone else, are a huge success. Face masks are not new. Google introduced them a while ago, but Snapchat was effective at growing the use of masks.

Highlights from memory lane

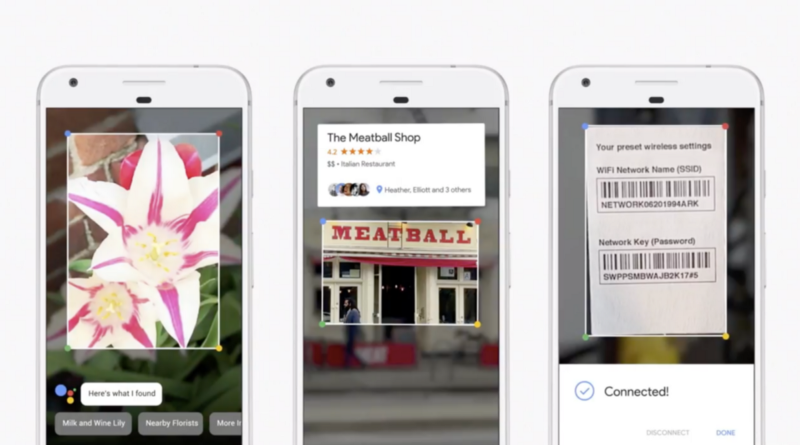

In December 2009, Google introduced Google Goggles. It was the first time that users could use their phone to get information about the environment around them. The information was mainly about landmarks initially.

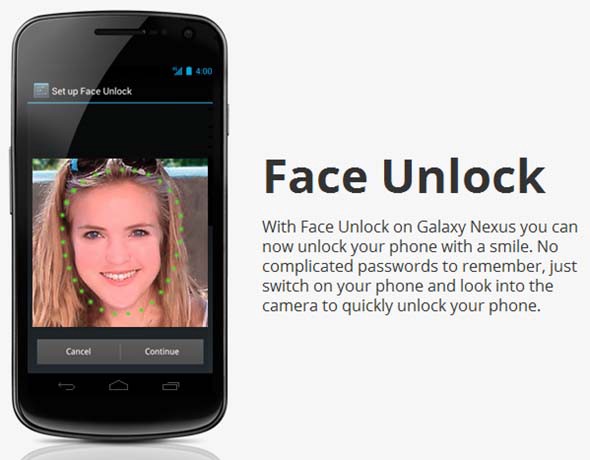

In November 2011, Samsung Nexus introduced facial recognition as a way to unlock phones. Like many things done for the first time, it wasn’t very good and was later scrapped.

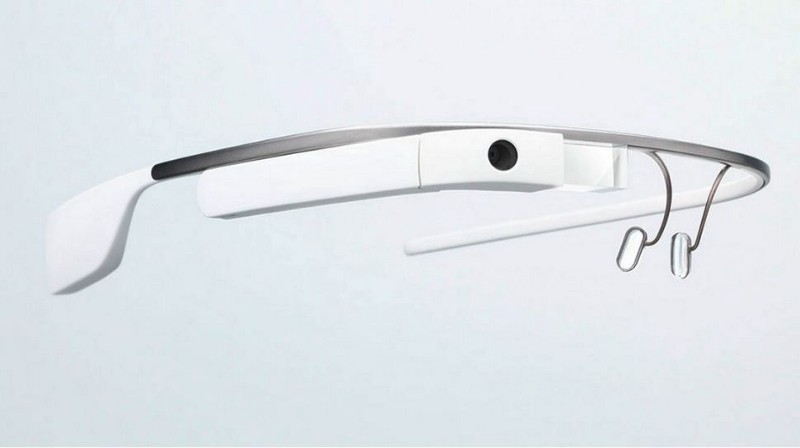

In February 2013, Google released Google Glass. It had more use cases because it was able to receive input not just from the camera but also from other items like the voice. The Google Glass was always there, but it failed to gain traction because it was too expensive, looked unfashionable, and triggered a backlash from the public. It was just not ready for prime time.

So far, devices have only limited information at their disposal. The information they have are audio visual with GPS and historical data. But it limited Google Glass from displaying the information on the small screen that is positioned near the users’ eye. The screen blocked users from looking at anything else. Putting this technology on a phone for external use is not just a technological limitation but also a physical one.

When you focus on your phone, you cannot see anything else. Your field of view is limited. This is similar to the field of view in user experience principles for virtual reality (VR). That’s why there are cities that have created routes for people who use their phones while walking and have set up traffic lights that help people walk and text. A premise like Microsoft’s HoloLens is more aligned with the spatial environment and can actually help users while they move and use their phones, rather than absorb their attention and put them in danger.

In July 2014, Amazon introduced the Fire Phone. It featured four cameras at the front of the phone. This was a breakthrough, even though it didn’t succeed. The four frontal cameras were used for scrolling and created 3D effects based on the accelerometer and users’ gaze. It was the first time that a phone used its front cameras as an input method from users.

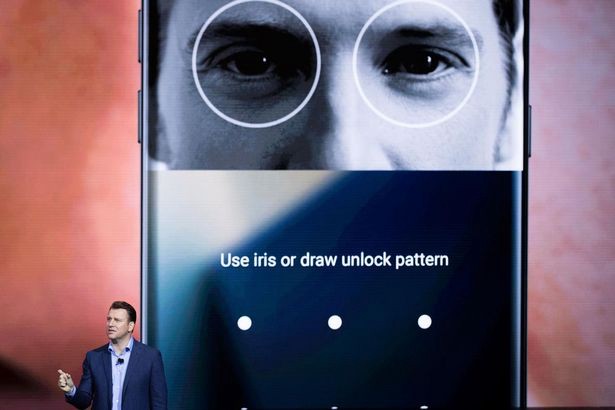

On August 2016, Samsung’s Note 7 was launched. It allowed users to unlock their phones with iris scanning. Samsung resurrected a facial-recognition technology that had rested on the shelf for six years. Unfortunately, just looking at the tutorial can be vexing. Samsung didn’t do much user experience testing for that feature.

It is disturbing to hold this huge phone and put it at a 90° angle to your face. It is not something that anyone should be doing while they are walking on the street. It can work nicely for Saudi women who cover their faces, but because of manufacturing defects, many Note 7 phones overheated, combusted, or exploded. The concept of iris scanning was put on hold for another full year until the Note 8 came out.

But by that time no one mentioned iris scanning. The Note 8 mentioned that iris scanning is an another way of unlocking a phone in conjunction with the fingerprint sensor. It’s probably because the phone was not good enough or Samsung wasn’t able to make a decision (similar to the release of the Galaxy 6 and 6 Edge). For a product to succeed, it needs to have multiple functions, otherwise it risks being forgotten.

Google took a break, and then in July 2017 released the second version of Google Glass as a business-to-business product. The use cases became more specific for some industries.

Now Google is about to release the Google Lens to bring the initial Goggles use case to the present. It’s Google’s effort to learn how to use visual information with additional context, and to figure out what product to develop next. It appears that Google is leaning towards a camera that users can wear.

There are other companies that are exploring visual input as well. For example, Pinterest is seeing a huge demand for its visual search lens, which its users are using to search for items to buy and to help people curate products and services online.

Snapchat’s spectacles allow users to record short videos easily (even though the upload process is cumbersome).

Now facial recognition is also on the Note 8 and Galaxy 8, but this feature is not panning out as well as hoped.

To check out a facial recognition demonstration, click here.

Apple is slow to adopt new technologies relative to its competitors. But on the other hand, Apple commercializes its products, for example, the Apple Watch. The Watch was all about facial recognition and finite screen. There is no better way to make people use this feature than by removing all other options (like the Touch ID). It’s not surprising that Apple did this last year with wireless audio (Apple removed the headphone jack) and USB C on the MacBook Pro (by removing everything else).

There is a much bigger reason why Apple chose this technology at this time. It has to do with its augmented reality efforts.

Face ID has challenges that include recognizing users who wear the Niqāb (face covers), users who have had plastic surgery, and users who are changing physically because they are growing. But the bigger picture here is much more interesting. This is the first time that users can do something that they naturally do with no effort, while receiving data that is meaningful for the future of technology. I believe that a screen that can read fingerprints is better. It appears that Samsung is heading in that direction (although rumours has it that Apple tried and failed).

So where is this going? What’s the target?

In the past, companies used special glasses and devices to perform user testing. The only output they could give were heat maps. Yet they weren’t able to document what the users’ were focusing on or their emotions and reactions.

Based on tech trends, it appears the future involves augmented reality and virtual reality. But in my opinion, it includes audio, 3D sound, and visual inputs combined. This would be a wonderful experience, which would allow users to look at anything, anywhere, and get information at the same time.

What if we are able to know where the users are looking at, and what they are focusing on? For years this is something that marketing and design professionals have tried to capture and analyze. What can do that better than the set of arrays a device like the iPhone X has as a starting point? Later on this evolved into glasses that can see what the user is focused on.

Reactions are powerful and addictive

Reactions help people converse and increase retention and engagement. Some apps offer reactions to posts as messages that can be sent to friends. There are funny videos on YouTube that show the reactions of people who watch videos. There is even a TV show, called Gogglebox, that is dedicated to showing people watching TV.

In IO Google, the annual developer festival, Google opened the option to pay creators for their platform. Its like what the brilliant Patreon site is doing but in a much more dominant way. A way that helps you to stand out from the crowd and grab the creator’s attention is SuperChat.

In Chris Harrison’s student project from 2009, Harrison created a keyboard that had pressure sensing keys. Depending on the force with which users type, the keyboard reads the users’ emotions and determine if they are angry or excited. The letters too get bigger as a result. Now imagine combining it with a camera that sees the users’ facial expressions while they are typing, as people tend to express their emotions while they’re typing a message.

How would such a UX look like

Consider the pairing of a remote and center point in virtual reality. The center is our focus, but we also have a secondary focus point, which is where the remote points are. However, this type of user experience cannot work in augmented reality. To take advantage of augmented reality, which is a new focus for Apple, the user’s focus must be known.

What started as ARKit and Google’s ARCore SDK, both will be the future of development. This is because of the amazing output and input both can get from the front and back cameras combined. This will allow for a greater focus on the input.

A more critical view on future developments

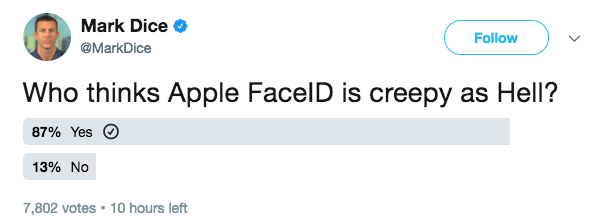

While Apple opened the way for facial recognition and triggered reactionary Animoji, it is going to get interesting when other organizations start implementing Face ID. Currently, it is manifested in a basic and harmless way, but the goal remains to get more information. Information that will be used to keep track of and sell products to us. It will also allow us to learn more about us and gather our emotional data.

It is important to say that the front camera doesn’t come alone. It’s the expected result of Apple buying PrimeSense. The array of front-facing technology includes an infrared camera, depth sensor, etc. (I think they could do well with a heat sensor too.) It’s not that someone will keep videos of our faces using the phone, but rather there will be a scraper that will document all the information about our emotions.

Summary

It’s exciting that augmented reality have algorithms that can read faces. There are many books that talks about how to identify facial reactions, but now it’s time for technology to do this. It will be wonderful for many reasons. For example, robots can now see how we feel and react, or with glasses, we can get more context about what we need them to do. Relating to computers, it’s better to look at a combination of elements because that helps the machine to understand you better.

The things that you can do if you have the information about what the user is focusing on are endless. It’s the dream of every person who works with technology.

This blog post was originally showed here.