Why do you need this?

Manually copy-pasting is fine if you don’t have too many files to work with.

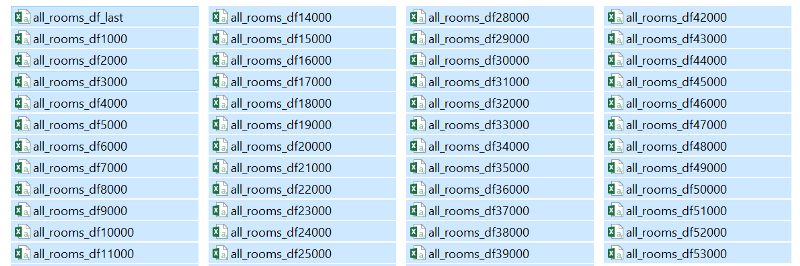

But imagine if you have 100+ files to concatenate — are you willing to do it manually? Doing this repetitively is tedious and error-prone.

If all the files have the same table structure (same headers & number of columns), let this tiny Python script do the work.

Step 1: Import packages and set the working directory

Change “/mydir” to your desired working directory.

import os

import glob

import pandas as pd

os.chdir("/mydir")Step 2: Use glob to match the pattern ‘csv’

Match the pattern (‘csv’) and save the list of file names in the ‘all_filenames’ variable. You can check out this link to learn more about regular expression matching.

extension = 'csv'

all_filenames = [i for i in glob.glob('*.{}'.format(extension))]Step 3: Combine all files in the list and export as CSV

Use pandas to concatenate all files in the list and export as CSV. The output file is named “combined_csv.csv” located in your working directory.

#combine all files in the list

combined_csv = pd.concat([pd.read_csv(f) for f in all_filenames ])

#export to csv

combined_csv.to_csv( "combined_csv.csv", index=False, encoding='utf-8-sig')encoding = ‘utf-8-sig’ is added to overcome the issue when exporting ‘Non-English’ languages.

And…it’s done!

This article was inspired by my actual everyday problem, and the coding structure is from a discussion on stackoverflow. The completed script for this how-to is documented on GitHub.

Thank you for reading. Please give it a try, have fun and let me know your feedback!

If you like what I did, consider following me on GitHub, Medium, and Twitter. Make sure to star it on GitHub :P