This post is based on my recently published book, Keeping Up, a quick and accessible guide to the current state of the tech industry and the big trends you can't afford to ignore.

In it, we'll cover everything from digital security, privacy, serverless platforms, the internet of things (IoT), technology research, and much more at a high level.

If you're a manager or decision maker at your company, someone who is plotting your career or educational trajectory, or just a curious person, then this book is for you.

Here's a link to the physical book if you'd like to grab a copy.

Table of Contents

- Chapter 1: Understanding Digital Security

- Chapter 2: Understanding Digital Privacy

- Chapter 3: Understanding the Cloud

- Chapter 4: Understanding Digital Connectivity

- Chapter 5: Understanding the Business of Technology Research

- Chapter 6: Where Hot Trends Go to Die

- Chapter 7: Compute Platforms

- Chapter 8: Security and Privacy

Chapter 1: Understanding Digital Security

Whatever your connection to technology, security should play a prominent role in the way you think and act.

Technology, after all, amplifies the impact of everything we do with it. The things we say and write using communication technologies can be read and heard by many, many more people than would be possible without. The ability to conveniently connect with people and collaborate on projects of all kinds is much greater.

The tasks we can perform are, through the magic of automation, almost limitless. The scope of information we can instantly access through the simplest and least expensive devices towers far beyond anything the greatest scholars could have hoped to see in a lifetime just a few decades ago.

All that means that criminals and other individuals unconstrained by moral conscience will have yet more powerful tools to compromise the data you create and consume, and steal or damage the property you acquire. So you've got a strong interest in learning how to protect yourself, your property, and that of the people and organizations around you.

This chapter will present a brief overview of what's at stake in the technology security domain. We'll define the kinds of threats we face and discuss the key tools at our disposable for pushing back against those threats.

If you're interested in digging deeper into the topic, my LPI Security Essentials book is entirely devoted to giving you the full picture.

Hacking? What's Hacking?

Defining computer hacking in a way that doesn't anger someone, somewhere, is like talking about politics at work. Be prepared for long, awkward silences and possibly violence.

You see, purists might insist that the term hacking should apply exclusively to individuals focused on forcibly re-purposing computer hardware for non-standard purposes. Others reserve the title for people who bypass authentication controls to break into networks for criminal or political purposes. And how about those who wear the title as a sign of their practical expertise in all things IT? (And then, of course, there are crackers.)

But this is my book, so I'm going to use the term any way I want.

I therefore decree that hacking is all about plans the bad guys have for your digital devices. Specifically, their plans to get in without authorization, get out without being noticed, and (sometimes) take your stuff with them when they leave.

Using the term this way gives us a useful way to organize a discussion of some common and particularly scary threats.

How Hackers Get In

The trick is to find a way through your defences (like passwords, firewalls, and physical barriers). In most cases, passwords probably provide the weakest protection:

- Passwords are often short, use a narrow range of characters, and are easy to guess.

- If a device came with a simple factory default password (like "admin" or "1234") just meant to get you in for the first time, then the odds are pretty good that many users will never get around to trading it in for something better.

- Even strong passwords can be stolen by deceptive phishing email scams ("Click here to login to your bank account..."); social engineering ("Hi, it's Ed from IT. We're having some trouble with your corporate account. Would you mind telling me your password over the phone so I can quickly fix it?"); and keyboard tracking software.

We'll talk more about firewalls later in this chapter. And physical barriers? I think you already know what a locked door looks like. But it's probably worth spending a few moments thinking about other kinds of digital attack.

The big prize is usually getting to your data and making off with copies. But for some, simply destroying the originals can be just as satisfying.

Of course, logging into your devices using stolen passwords is the most straightforward approach. But access can also be achieved by intercepting your data as it travels across an insecure network.

One approach that's commonly used here is known as a man-in-the-middle attack, where data packets can be intercepted in transit and altered without authorized users at either end knowing anything's wrong.

Properly encrypting your network connections (and avoiding unsafe public networks altogether) is an effective protection against this kind of threat. We'll talk more about encryption a bit later.

If the hardware you're using has an undocumented "back door" built in, then you're pretty much toast whatever you do. We'll talk more about back doors later in the book.

But for now, I'll just note that there have been no shortage of factory-supplied laptops, rack servers, and even high-end networking equipment that's been intentionally designed to include serious access vulnerabilities. Be very careful where you purchase your compute devices.

If the attackers find a way into your physical building (sometimes posing as employees of a delivery company), they could quietly plug a tiny listening device into on unused ethernet jack on your network. That'll give them a nice platform to watch and even influence all your activities from the inside.

Protecting your physical infrastructure and carefully monitoring network activity is your best hope against that kind of intrusion.

Even if your home or office is all fortressed up, there's no guarantee that data moving around on mobile devices (like smartphones or laptops) won't find its way into the wrong hands.

And even if you've been careful to use only the best passwords for those devices, the data drives themselves can still be easily mounted as external partitions on a thief's own machine. Once mounted, your files and account information will now be wide open.

The only way to protect your mobile devices from this kind of threat is to encrypt the entire drive using a strong passphrase.

What Hackers Are After

Now that entire economies are run on computers directly connected to public networks, there's money and value to be had through well-planned corporate, academic, or political espionage efforts... and through old fashioned, traditional theft.

Whether the goal is building up a military or commercial competitive advantage, completely destroying the competition, or just getting your hands on "free" money, illegally accessing other people's data has never been easier.

So what are hackers likely to be after?

All the important financial and other sensitive information you'd prefer they didn't have. Including, it should be noted, the kind of information you use to identify yourself to banks, credit card companies, and government agencies.

Once the bad guys have got important data points like your birth date, home address, government-issued ID numbers, and some basic banking details, it's usually not hard to present themselves as though they're you, completely taking over your identity in the process.

Digital attacks can also be used as blackmail to force victims to pay to undo the damage they've done.

That's the objective of most ransomware attacks, where hackers encrypt all the data on a victim's computers and refuse to send the decryption keys needed to restore your rightful access unless you send them lots of money. Such attacks have already effectively brought down critical infrastructure like the IT systems powering hospitals and cities.

The very best defence against ransomware is to have full and tested backups of your critical data and a reliable system for quickly restoring it to your hardware. That way, if you're ever hit with a ransomware attack, you can simply wipe out your existing software and replace it with fresh copies, populated with your backed up data.

But you should also beef up your general security settings to make it harder for ransomware hackers to get into your system in the first place.

When their primary goal is to prevent you or your organization from going about its business, hackers can remain at a safe distance and launch a distributed denial of service (DDoS) attack against your web infrastructure.

Historical DDoS attacks have used massive swarms of thousands of illegally hijacked network-connected devices to transmit crippling numbers of requests against a single target service. When large enough, DDoS attacks have managed to bring down even huge enterprise-scale companies using sophisticated defences for hours at a time.

The site hosting one of my favorite online open source collections was hit hard more than a year ago and still hasn't fully recovered.

What Is Encryption?

If your data is unreadable, there's a lot less bad stuff that unauthorized individuals will be able to do with it. But if it's unreadable, there's probably not a whole lot you'll be able to do with it either.

Wouldn't it be nice if there was some way to present your data as unreadable in every scenario except where there's a legitimate reason?

Well waddaya know? There is, and it's called data encryption.

Encrypting Data in Transit

Encryption algorithms encode information in a way that makes it hard, or even impossible, to be read.

A simple (and ancient) example is symbol replacement, where every letter "a" in a message would be replaced with, say, the letter three positions on in the alphabet (which would be "d"). Every "b" would become "e" and so on. "Hello world" would be "khoor zruog". Then people who come across your encoded message would be unable to understand it at a glance.

Of course, it wouldn't take long for a modern computer (or even a smart 8-year-old) to decode that one.

But some very clever cryptologists have been working hard over most of the past century to produce much more effective algorithms.

There are some significant variations of modern cryptography. But the general idea is that people can apply an encryption algorithm to their data and safely transmit the encrypted copy over insecure networks. Then the recipient can apply a decryption key of some sort to the data, restoring the original version.

Encryption is now widely available for many common activities, including sending and receiving emails. You can similarly ensure that the data you request from a website is the same data that's eventually displayed in your browser by checking the lock icon in your browser's address bar. The icon confirms that the website server employs Transport Layer Security (TLS) encryption.

Over the past few years, the Let's Encrypt project (letsencrypt.org) has encouraged millions of new websites to use encryption by provided free encryption certificates and simple-to-use tools to help server administrators install them.

Encrypting Data at Rest

TLS will protect your data when it's out and about, but what'll keep it safe even when it's relaxing in its comfy storage disk? File and drive encryption, that's what.

All operating systems now offer integrated software for encrypting all or part of a storage disk either at installation time or later. Each time you power up an encrypted disk, you'll be prompted to enter the passphrase you created when you enabled encryption.

The thing is that if you forget your passphrase, you're pretty much permanently locked out of your system and the data is as good as gone forever.

But if you don't encrypt your system then, as we noted earlier, anyone who steals the hardware will have easy and instance access to your private information.

It's a tough world out there, isn't it?

What Does a Firewall Do?

You can think of a firewall as a filter.

Just like, say, a water filter is able to block certain impurities, allowing only clean water through, a firewall can inspect every packet of data coming into or leaving your infrastructure, blocking access where appropriate.

Besides not needing to be replaced every few weeks, the big advantage of a firewall over a water filter is that it can be closely configured to permit and refuse entry to exactly match your security and functional needs, and then updated later should your needs change.

Hardware Firewalls

A hardware firewall is a purpose-built physical networking device that's commonly used within enterprise environments.

Such firewalls are installed at the edge of a private network and set to block potentially dangerous incoming traffic, redirect other traffic to remote destinations, or permit traffic to access thousands of hosts within the local network.

Hardware firewalls are sold by specialized companies like Cisco and Juniper, and also general equipment manufacturers like HP and Dell.

Firewalling appliances tend to be very expensive, often costing many thousands of dollars. They're normally only deployed to manage enterprise infrastructure.

Software Firewalls

A software firewall is an application that runs on a regular PC that can perform just about any function that you'd otherwise expect from a hardware firewall.

There are two important differences:

- Firewall software (like the Linux iptables utility) is often free and, while complicated, enjoys the benefits of vast documentation resources. The software can also be installed any old PC that's just lying around, reducing your overall cost to nearly nothing.

- You won't want to use such a firewall within a busy business environment however, since a regular PC probably won't have the compute power necessary to manage high volumes of network traffic. Nor, in most such cases, will it be reliable enough to provide mission-critical services 24/7.

There's another flavor of software firewall that's used as part of consumer-grade operating systems. Such firewalls allow you to better secure your OS by setting rules for what kind of activities you want to allow. These can be especially useful for mobile devices that frequently move from network to network.

Cloud computing platforms - like Amazon Web Services (AWS) and Microsoft's Azure - provide a firewall-like technology for use with the resources you might deploy within their systems. Firewall policies might exist in objects with names like "security group" or "access control list" that can be applied to whichever resource requires them.

Who Does Security Best?

In the not too distant past, you would often hear IT professionals swearing they would never run their IT operations on infrastructure they didn't physically control.

This was common when referring to outsourcing to third party, offsite companies or to cloud computing platforms.

Whether it was because those administrators didn't trust the reliability and security of compute infrastructure run by strangers, or because regulatory restrictions required that sensitive workloads remained local, the sentiment was widely shared. And it made sense.

But the past is a foreign country. Today, it can be forcefully argued, the most secure and reliable environments can be found in the biggest public cloud providers.

Why? They've got the money and incentive to hire the very best engineers, and the money and incentive to build the very best infrastructure.

Beyond that, cloud providers maintain data centers in political jurisdictions around the world, and go to great lengths to ensure their deployments comply with industry and government standards.

Let me illustrate. Remember the DDoS threat we discussed a bit earlier in the chapter?

Well, back in the summer of 2020, an unnamed organization deploying resources on AWS was hit with a DDoS attack peaking at 2.3 Tbps. That is, each and every second, requests hit that organization's public-facing service with 2.3 terabytes of data.

What does "2.3 terabytes" actually mean?

Well, a megabyte is (approximately) one million bytes of information (a PDF version of this book would probably take up six megabytes or so). A gigabyte is one thousand million bytes of information. A terabyte is one thousand thousand million bytes of information.

That would be the equivalent of around 165,000 PDF books. 2.3 terabytes would be the rough equivalent of 380,000 PDF books.

Now try to imagine all the text characters used to fill 380,000 PDF books being thrown at a web service each second.

Got that image in your mind?

Now here's what happened to that web service: Nothing. It just carried on working as though it hadn't a care in the world.

How on earth is that even possible?

Amazon's AWS Shield service simply mitigated the attack. The customer didn't have to do a thing.

That is why moving your workloads to the public cloud doesn't necessarily involve compromising your standards.

Chapter 2: Understanding Digital Privacy

Public service warning: you might find this chapter a wee bit depressing. If you'd prefer some cheering up right now, perhaps skip to "Understanding the Cloud."

For all the many benefits we enjoy from technology - and particularly the technologies that make up the public internet - there are clearly plenty of costs, too. Figuring out how you want to balance the benefits against the costs can take some careful thinking.

Here's a concise and effective way to describe the equation (whose source I've sadly forgotten):

"Select any two of privacy, security, and convenience. But you can't have all three."

In other words, if security is a critical value for you, then you'll need to give up on 24/7 instant access to your money, credit, and personal accounts. That's because that kind of access requires exposing your accounts across public networks at a level that won't permit as much data protection as you might want.

Similarly, what if you just can't live without the convenience of getting news updates and social connectivity through sites belonging to third party businesses that collect and use your personal information? Well, you'll need to "pay for it" by giving up a measure of your privacy.

Of course, most of us will choose some blend of those three elements based on a practical compromise between competing values and needs.

But making a reasonable decision on that blend will require solid information. That's what you'll find through the rest of this chapter.

How Companies Get Your Data

Curious about what kinds of personal and even private data you may be exposing through the course of a normal day on the internet?

The answer is "all kinds".

Perhaps the best way to understand the scope and nature of the problem is to break it down by platform.

Financial Transactions

Take a moment to visualize what's involved in a simple online credit card transaction.

You probably signed into the merchant's website using your email address as an account identifier and a (hopefully) unique password.

After browsing a few pages, you'll add one or more more items to the site's virtual shopping cart. When you've got everything you need, you'll begin the checkout process, entering shipping information, including a street address and your phone number. You might also enter the account number of the loyalty card the merchant sent you and a coupon code you received in an email marketing message.

Of course, the key step involves entering your payment information which, for a credit card, will probably include the card owner's name and address, and the card's number, expiry date, and a security code.

Assuming the merchant infrastructure is compliant with Payment Card Industry Data Security Standard (PCI-DSS) protocols for handling financial information, then it's relatively unlikely that this information will be stolen and sold by criminals.

But either way, it will still exist within the merchant's own database.

To flesh all this out a bit, understand that using your loyalty card account and coupon code can communicate a lot of information about your shopping and lifestyle preferences, along with records of some of your previous activities.

Your account on the site also includes your contact information, and your home location.

All of that information can, at least in theory, be stitched together to create a robust profile of you as a consumer and citizen.

It's for these reasons that I personally prefer using third-party e-commerce payment systems like PayPal because such transactions leave no record of my specific payment method on the merchant's own databases.

Devices

Modern operating systems are built from the ground up to connect to the internet in multiple ways.

They'll often automatically query online software repositories for patches and updates and "ask" for remote help when something goes wrong. Some performance diagnostics data is sent and stored online, where it can contribute to statistical analysis or bug diagnosis and fixes.

Individual software packages might connect to remote servers independently of the OS to get their own things done.

All that's fine.

Except, you might have a hard time being sure whether all the data coming and going between your device and the internet is stuff you're OK sharing.

Can you know that private files and personal information aren't being swept in with all the other data? And are you confident that none of your data will ever accidentally find its way into some unexpected application lying beyond your control?

To illustrate the problem, I'd refer you to devices powered by digital assistants like Amazon's Alexa (figure 2.1) and the Google Assistant ("OK Google").

Since, by definition, the microphones used by digital assistants are constantly listening for their key word ("Alexa..."), everything anyone says within range of the device is registered.

At least some of those conversations are also recorded and stored online and, as it turns out, some of those have eventually been heard by human beings working for the vendor.

In at least one case, an inadvertently-recorded conversation was used to convict a murder suspect.

Amazon, Google, and other players in this space are aware of the issue and are trying to address it. But it's unlikely they'll ever fully solve it.

Remember, convenience, security, and privacy don't work well together.

Now if you think the information from computers and tablets that can be tracked and recorded is creepy, wait 'till you hear about thermostats and light bulbs.

As more and more household appliances and tools are adopted as part of "smart home" systems, more and more streams of performance data will be generated alongside them.

And, as has already been demonstrated in multiple real-world applications, all that data can be programmatically interpreted to reveal significant information about what's going on in a home and who's doing it.

Mobile Devices

Have you ever stopped in the middle of a journey, pulled out your smartphone, and checked a digital map for directions?

Of course you have.

Well the map application is using your current location information and sending you valuable information but, at the same time, you're sending some equally valuable information back.

What kind of information might that be?

I once read about a mischievous fellow in Germany who borrowed a few dozen smartphones, loaded them up on a kids' wagon, and slowly pulled the wagon down the middle of an empty city street. It wasn't long before Google Maps was reporting a serious traffic jam where there wasn't one.

How does the Google Maps app know more about your local traffic conditions than you do?

One important class of data that feeds their system is obtained through constant monitoring of the location, velocity, and direction of movement of every active Android phone they can reach - including your Android phone.

I, for one, appreciate this service and I don't much mind the way my data is used. But I'm also aware that, one day, that data might be used in ways that sharply conflict with my interests. Call it a calculated risk.

Of course, it's not just GPS-based movement information that Google and Apple - the creators of the two most popular mobile operating systems - are getting. They, along with a few other industry players, are also handling the records of all of our search engine activity and the data returned by exercise and health monitoring applications.

In other words, should they decide to, many tech companies could effortlessly compile profiles describing our precise movements, plans, and health status. And from there, it's not a huge leap to imagine the owners of such data predicting what we're likely to do in the coming weeks and months.

Web Browsers

Most of us use web browsers for most of our daily interactions with the internet. And, all things considered, web browsers are pretty miraculous creations, often acting as an impossibly powerful concierge, bringing us all the riches of humanity without even breaking into a sweat.

But, as I'm sure you can already anticipate, all that power comes with a trade-off.

For just a taste of the information your browser freely shares about you, take a look at the Google Analytics page shown in figure 2.2. This dashboard displays a visual summary describing all the visits to my own bootstrap-it.com site over the previous seven days. I can see:

- Where in the world my visitors are from

- When during the day they tend to visit

- How long they spent on my site

- Which pages they visit

- Which site they left before coming to my site

- How many visitors make repeat visits

- What operating systems they're running

- What device form factor they're using (i.e., desktop, smartphone, or tablet)

- The demographic cohorts they belong to (genders, age groups, income groups)

Besides all that, a web server's own logs can report detailed information, in particular, the specific IP address and precise time associated with each visitor.

What this means is that, whenever your browser connects to my website (or any other website), it's giving my web server an awful lot of information. Google just collects it and presents it to me in a fancy, easy-to-digest format.

By the way, I'm fully aware that, by having Google collect all this information about my website's users, I'm part of the problem. And, for the record, I do feel a bit guilty about it.

In addition, web servers are able to "watch" what you're doing in real time and "remember" what you did on your last visit.

To explain, have you ever noticed how on some sites, right before you click to leave the page a "Wait! Before you go!" message pops up? Servers can track your mouse movements and, when they get "too close" to closing the tab or moving to a different tab, they'll display that popup.

Similarly, many sites save small packets of data on your computer called "cookies." Such a cookie could contain session information that might include the previous contents of a shopping cart or even your authentication status. The goal is to provide a convenient and consistent experience across multiple visits. But such tools can be misused.

Finally, like operating systems, browsers will also silently communicate with the vendor that provides them. Getting usage feedback can help providers stay up to date on security and performance problems. But independent tests have shown that, in many cases, far more data is heading back "home" that would seem appropriate.

Website Interaction

Although some of this might be covered by previous sections in the chapter, I should highlight at least a couple of particularly relevant issues. Like, for instance, the fact that websites love getting you to sign up for extra value services.

The newsletters and product updates that they'll send you might we be perfectly legitimate and, indeed, provide great value. But they're still coming in exchange for some of your private contact information.

As long as you're aware of that, I've done my job.

A perfect example is the data you contribute to social media platforms like Twitter, Facebook, and LinkedIn.

You may think you're just communicating with your connections and followers, but it actually goes much further than that.

Take a marvellous - and scary - piece of software called Recon-ng that's used by network security professionals to test for an organization's digital vulnerability. Once you've configured it with some basics about your organization, Recon-ng will head out to the internet and search for any publicly available information that could be used to penetrate your defences or cause you harm.

For instance, are you sure outsiders can't possibly know enough about the software environment your developers work with to do you any damage?

Well perhaps you should take a look at the "qualifications" section from some of those job ads you posted on LinkedIn. Or how about questions (or answers) your developers might have posted to Stack Overflow?

Every post tells a story, and there's no shortage of clever people out there who love reading stories.

Software like Recon-ng can help you identify potential threats, but that only underlines your responsibility to avoid leaving your data out there in public in the first place.

The bottom line? Smile. You're being watched.

Why Companies Want Your Data

Data is money. Some of the biggest and most successful tech companies of the past decade or two made their billions from data. Generally, that'd be from your data.

Of course, the value doesn't all move in one direction.

Big tech companies do, as a rule, provide useful services. Health tracking apps do track and report on your health. Social media companies do (on rare occasion) provide for healthy social interactions. And historical performance data does sometimes help improve customer and technical service.

But businesses exist to generate revenue and, as a rule, the more data they own, the more revenue it can generate.

The more potential customers there are who provide their email and social media account coordinates, the easier it'll be to connect to them with new offers. And the easier it would be for other companies working in overlapping industries to connect to a business's customers as well. The incentive for you to sell your contact list to an interested third party is obvious.

Naturally, legal restrictions and user agreements can sometimes stop such sales of data sets. But not every use-case is necessarily covered by such laws, and not every company is necessarily bound by a strong desire to follow the law.

A delicious case in point would be Canada's Do Not Call list from all the way back in 2004. The law prevented telemarketers from contacting anyone who had adding themselves to the national list. The law required all telemarketers to remove all entries from the list from their own call lists.

The problem was that spammers happily downloaded the Do Not Call lists and, confident that they represented confirmed active accounts, called those specifically. The only law that was effective in this case was the law of unintended consequences.

Your data can also be useful for personalizing the results you get from search engine queries. Of course, you might sometimes enjoy seeing results relating to previous browsing behavior, but don't lose sight of the fact that your behavior is being used as part of a campaign to sell you stuff.

It's not only search engines: smartphone browsing histories are sometimes used by nearby businesses to push customized ads in your direction - sometimes even through automated digital displays on physical billboards and other signage.

Perhaps the biggest value your data can offer is when it's aggregated along with data generated by thousands or millions of other users. Data scientists can stream and parse huge, dynamic data sets to extract significant insights about subtle but significant trends. In many cases, such data is sanitized to remove any personally identifiable information (PII).

We can nicely sum up the 21st Century web application business model with this popular - and accurate - expression:

"If you're not paying for the product, you are the product."

How to Protect Your Data

All that sounds pretty bleak. After all, George Orwell's 1984 was meant to be a warning, not a how-to guide.

What can you do to push back?

Be aware of your environment.

Do you still even notice those terms of service disclosures you "click to sign" before they'll let you use some service or tool?

Some of those disclosures are as long as this chapter - and, if I may say so myself, a whole lot less fun. But the fact is that they contain information that can have a profound impact on both you and your data.

Many agreements describe what data they're likely to collect and what they're planning to do with it. They'll often also offer assurances that they'll never sell your data to third parties - an assurance that they might sometimes even honor in both the letter and the spirit of the law (although there have been famous cases of companies that did neither).

I've never met anyone who has the time and energy to read through those endless disclosures from end to end. But if an organization pays a bunch of lawyers to write something, you can bet it's a serious business.

Be aware of your rights.

Beyond your specific agreement with a technology service provider, the use of your data might be regulated by government legislation.

One example is the European Union's General Data Protection Regulation (GDPR), which controls how organizations must treat any personal data they encounter in the course of their operations.

Another example is the US government's Health Insurance Portability and Accountability Act (HIPAA), which regulates the handling of private information in the health insurance and healthcare industries.

Be aware of your alternatives.

Consider adopting privacy-first tools instead of the more heavily commercial services you're using now.

For instance, the DuckDuckGo.com search engine, whose home page is shown in figure 2.3, doesn't track your search behavior and will return the same results to a particular query for you as for anyone else.

They are a for-profit business, but they earn much of their income through affiliate links that pay them a commission for sales generated through search links - none of which has any impact on your privacy.

The Brave browser, as another example, has been shown to send far less undocumented data out to the internet than any other major browser.

To be specific, in early 2020, Douglas Leith of the School of Computer Science & Statistics, Trinity College Dublin, tested six browsers for their risks of revealing unique identifying information about their host computers (scss.tcd.ie/Doug.Leith/pubs/browser_privacy.pdf). He found that Brave clearly offered the greatest privacy protection.

Brave also blocks web page ads by default, which raises a question. Since many web pages earn income exclusively through display ads, does Brave expect content providers to offer their services for free?

The browser provider actually has a business model that includes the content providers: users of the Brave browser can opt to be shown simple and extremely unobtrusive ads from carefully curated advertisers in exchange for micro payments in a cryptocurrency.

The users can then choose to make micro payments to website content providers using those funds as a way to pay for their content through the Brave Rewards program (pictured in figure 2.4).

Opting for open source applications can also be an effective privacy strategy.

OpenStreetMap (openstreetmap.org) is an alternative to Google Maps. It might not have all the bells and whistles and built-in connectivity you may be used to, but it's just that connectivity that powers our reservations, isn't it?

If you're not comfortable with the big mobile operating system players (Android and iOS), you could, instead, buy a phone and install one of a number of experimental mobile Linux variations.

Going down this road will likely be bumpy. Expect to run into unexpected configuration and compatibility challenges, and don't expect to find all the convenient apps that you've come to know and love using the big app stores.

See a hole that needs filling? Why not contribute your own innovation by participating in existing open source projects or adding your own solutions to the community?

Chapter 3: Understanding the Cloud

You may not always be aware of it, but you're enjoying the many fruits of the cloud just about every hour of every day. Many of the joys (and horrors) of modern life would be impossible without it.

Before we talk about what it does and where it's taking us, we should explain exactly what it is.

The "cloud" is all about using other people's computers rather than your own. That's it. No, really.

Cloud providers run lots of compute servers (which are just computers that exist to "serve" applications and data in response to external requests), storage devices, and networking hardware. Whenever the impulse takes you, you can provision units of those servers, devices, and networking capacity for your own workloads. When you add millions more users taken by similar impulses, you get the modern cloud.

For many - although not all - applications, there are enormous cost and performance benefits to be realized by deploying to a cloud. And countless applications - whether small, large or smokin' colossal - have found productive homes on one cloud platform or another.

So let's see how it all works and what you might be able to do with it.

Application Server Deployment Models

Over the decades, we've been through a number of models for running server workloads. In a way, all those changes have been the product of just two technologies:

- Networking protocols that permit communication between connected nodes

- Virtualization which permits fast, efficient, and cost-effective use of hardware resources for multiple and parallel uses

Networking, largely because it's now such a stable and well established technology, isn't something we'll focus on here. But we will get back to virtualization a bit later.

Local Data Centers

In the old days, if you wanted to fire up a new server to perform a compute task, you would spend a week or so calculating how much compute power you'd need for your job. You'd then contact the sales reps at a few hardware vendors, wait for them to get back to you with bid tenders, compare the bids and, when you've selected one, wait another couple of weeks for your new hardware to be delivered. Finally you'd put all the pieces together, plug it all in, and start loading software.

The room where your servers ran would need a reliable and robust power supply and some kind of cooling system: like angry children, servers generate a great deal of heat but don't like being hot. You probably wouldn't want to do any other work in that room, since the noise of your servers' powerful internal cooling fans was difficult to ignore.

While locally-deployed servers gave you all the direct, manual control over your hardware that you could need, it came at a cost.

For one thing, opportunities for infrastructure redundancy (and the reliability that comes with it) were limited. After all, even if you regularly backed up your data (and assuming your backups were reliable), they still wouldn't protect you from a facility-wide incident like a catastrophic fire.

You would also need to manage your own networking, something that could be particularly tricky - and risky - when remote clients required access from beyond your building.

By the way, don't be fooled by my misleading use of past tense here ("were limited," "backed up"). There are still plenty of workloads of all sizes happily spinning away in on-premises data centers.

But the trend is, without question, headed in the other direction.

Server Co-Location

Another option for mid-sized to large organizations is to store your own servers in someone else's data center, an arrangement known as co-location.

The hosting company provides the server racks and power, along with all the networking and cooling equipment you'll need. Whenever you need physical access to your servers, they'll always be happy when you drop by to say hello.

This is a convenient way to maintain direct control over your servers while leaving physical security and the larger infrastructure headaches in the hands of specialists. Co-location facilities are often capable of far higher standards of security and reliability then smaller operations would be able to manage on their own.

For security reasons, co-location centers will probably not advertise their services at street level.

But if you want to see what they look like, search for "server hosting co-location" in your city and then use Google Satellite to check out one or two of the addresses that come back. If you see a large, unmarked building with dozens of powerful air conditioning units on the roof, that'll be a data center.

Virtualization

As I hinted earlier, virtualization is the technology that, more than any other, defines the modern internet and the many services it enables.

At its core, virtualization is a clever software trick that lets you convince an operating system that it's all alone on a bare metal computer when it is, in fact, just one of many OSs sharing a single set of physical resources. A virtual OS will be assigned space on a virtual storage disk, bandwidth through a virtual network interface, and memory from a virtual RAM module.

Here's why that's such a big deal: Suppose the storage disks on your server host have a total capacity of two terabytes and you've got 64GB of RAM. You might need 10GB of storage and 10GB of memory for the host OS (or, hypervisor is what some virtualization hosts are called). That leaves you a lot of room for your virtual operating system instances.

You could easily fire up several virtual instances, each allocated enough resources to get their individual jobs done. When a particular instance is no longer needed, you can shut it down, releasing its resources so they'll instantly be available for other instances performing other tasks.

But the real benefits come from the way virtualization can be so efficient with your resources. One instance could, say, be given RAM and storage that, later, proves insufficient. You can easily allocate more of each from the pool - often without even shutting your instance down. Similarly, you can reduce the allocation for an instance as its needs drop.

This takes all the guesswork out of server planning. You only need to purchase (or rent) generic hardware resources and assign them in incremental units as necessary. There's no longer any need to peer into the distant future as you try to anticipate what you'll be doing in five years. Five minutes is more than enough planning.

Now imagine all this happening on a much larger scale: Suppose you've got many thousands of servers running in a warehouse somewhere that are hosting workloads for thousands of customers.

Perhaps one customer suddenly requests another terabyte of storage space. Even if the disk that customer is currently using is maxed out, you can easily add another terabyte from some other disk, perhaps one plugged in a few hundred meters away on the other side of the warehouse.

The customer will never know the difference, but the change can be virtually instant.

Cattle vs Pets

Server virtualization has changed the way we look at computing and even at software development.

No longer is it so important to build configuration interfaces into your applications that'll allow you to tweak and fix things on the fly. It's often more effective for your developers and sysadmins to build a custom operating system image (nearly always Linux-based) with all the software pre-set. You can then launch new virtual instances based on your image whenever an update is needed.

If something goes wrong or you need to apply an change, you simply create a new image, shut down your instance, and then replace it with an instance running your new image.

Effectively, you're treating your virtual servers the way a dairy farmer treats cows: when the time comes (as it inevitably will), you take a old or sick cow out and kill it, and then bring in another (younger) one to replace it.

Anyone who's ever been involved with legacy server room administration would gasp at such a thought! Our old physical machines would be treated like beloved pets.

At the slightest sign of distress, we'd be standing, concerned, at its side, trying to diagnose what the problem was and how it can be fixed. If all else failed, we'd be forced to reboot the server, hoping against hope that it came back up again. If even that wasn't enough, we'd give in and replace the hardware.

But the modularity we get from virtualization gives us all kinds of new flexibility.

Now that hardware considerations have been largely abstracted out of the way, our main focus is on software (whether entire operating systems or individual applications).

And software, thanks to scripting languages, can be automated. So using orchestration tools like Ansible, Terraform, and Puppet, you can automate the creation, provisioning, and full life cycle management of application service instances.

Even error handling can be built into your orchestration, so your applications could be designed to magically fix their own problems.

Virtual Machines vs Containers

Virtual instances come in two flavors. Virtual machines (or VMs) are complete operating systems that run on top of - but to some degree independent of - the host machine.

This is the kind of virtualization that uses a hypervisor to administrate the access each VM gets to the underlying hardware resources, but such VMs are generally left to live whichever way they choose.

Examples of hypervisor environments include the open source Xen project, VMware ESXi, Oracle's VirtualBox, and Microsoft Hyper-V.

Containers, on the other hand, will share not only hardware, but also their host operating system's software kernel. This makes container instances much faster and more lightweight (since their images don't need to include a kernel).

Not only does this mean that containers can launch nearly instantly, but that their file systems can be transported between hosts and shared. Portability means that instance environments can be reliably reproduced anywhere, making collaboration and automated deployment not only possible, but easy.

Examples of container technologies include LXD and Docker. And enterprise container implementations include Google's open source Kubernetes orchestration system.

Public Clouds

Public cloud platforms have elevated the abstraction and dynamic allocation of compute resources into an art form. The big cloud providers leverage vast networks of hundreds of thousands of servers and unfathomable numbers of storage devices spread across data centers around the world.

Anyone, anywhere, can create a user account with a provider, request an instance using a custom-defined capacity, and have a fully-functioning and public-facing web server running within a couple of minutes. And since you only pay for what you use, your charges will closely reflect your real-world needs.

A web server I run on Amazon Web Services (AWS) to host two or three of my moderately busy websites costs me only $50 a year or so and has enough power left over to handle quite a bit more traffic.

The AWS resources used by the video streaming company Netflix, will probably cost a bit more - undoubtedly in the millions of dollars per year. But they obviously think they're getting a good deal and prefer using AWS over hosting their infrastructure themselves.

Just who are all those public cloud providers, I'm sure you're asking?

Well that conversation must begin (and, often, end) with AWS. They're the elephant in every room.

The millions of workloads running within Amazon's enormous and ubiquitous data centers, along with their frantic pace of innovation, make them the player to beat in this race. And that's not even considering the billions of dollars in net profits they pocket each quarter.

At this point, the only serious competition to AWS are Microsoft's Azure which is doing a pretty good job keeping up with service categories and, by all accounts, is making good money in the process; and Alibaba Cloud which is mostly focused on the Asian market at this point.

Google Cloud is in the game, but appears to be focusing on a narrower set of services where they can realistically compete.

As the barrier to entry in the market is formidable, there are only a few others who are getting noticed, including Oracle Cloud, IBM Cloud and, with a welcome change to the naming convention, Digital Ocean.

Private Clouds

Cloud goodness can also be had closer to home, if that's what you're after. There's nothing stopping you from building your own cloud environments on infrastructure located within your own data center.

In fact, there are plenty of mature software packages that'll handle the process for you. Prominent among those are the open source OpenStack (openstack.org) and VMware's vSphere (vmware.com/products/vsphere.html) environments.

Building and running a cloud is a very complicated process and not for the hobbyist or faint of heart. And I wouldn't try downloading and testing out OpenStack - even just to experiment - unless you've got a fast and powerful workstation to act as your cloud hosts and at least a couple of machines for nodes.

You can also have it both ways by maintaining certain operations close to home while outsourcing other operations in the cloud. This is called a hybrid cloud deployment.

Perhaps, as an example, regulatory restrictions require you to keep a backend database of sensitive customer health information within the four walls of your own operation, but you'd like your public-facing web servers to run in a public cloud. It's possible to connect resources from one domain (say, AWS) to another (your data center) to create just such an arrangement.

In fact, there are ways to closely integrate your local and cloud resources. The VMware Cloud on AWS service makes it (relatively) easy to use VMware infrastructure deployed locally to seamlessly manage AWS resources (aws.amazon.com/vmware).

The Value of Outsourcing Your Compute Operations

Why might you want to migrate workloads to the cloud? You might end up saving a lot of money. So there's that.

Of course, it's not going to work out that way for every deployment, but there do seem to be a lot of use cases where it does.

To help you make informed decisions, cloud platforms often provide sophisticated calculators for you to compare the costs of running an application locally as opposed to what it would cost in the cloud. The AWS version of that is here: aws.amazon.com/tco-calculator

Part of the pricing calculus is the way you pay.

The traditional on-premises model involved large up-front investments for expensive server hardware that you hoped would deliver enough value over the next five to ten years to justify the purchase. These investments are known as capital expenses ("Capex").

Cloud services, on the other hand, are billed incrementally (by the hour, or even minute) according to the number of service units you actually consume. This is normally classified as operating expenses (Opex).

Using the Opex model, if you need to run a server workload only once every few days for five minutes at a time in response to an external triggering event, you can automate the use of a "serverless" workload (using a service like Amazon's Lambda) to run only when needed. Total costs: perhaps only a few pennies a month to cover those minutes the service is actually running.

Besides cost considerations, there's a lot more going on in the cloud world that should attract your consideration.

You've already seen how the lag time between the decision to deploy a new server on-premises and its actual deployment (weeks or months) compares to a similar decision/deployment process in a public cloud (a few minutes).

But large cloud providers are also positioned to deliver environments that are significantly more secure and reliable.

As an example, you may remember our story about the DDoS attack from chapter 2 (Understanding Digital Security). That was the incident where the equivalent of 380,000 PDF books worth of data were used to bombard an AWS-hosted web service each second... and the service survived. Are you confident you could do that yourself?

And how about reliability through redundancy? Would your on-premises infrastructure survive a catastrophic loss of your premises? Even if you did the right thing and maintained off-site backups, how long would it take you to apply them to rebuilt, network-connected, and functioning hardware?

The big cloud platforms run data centers across physically distant locations around the world. They make it easy (and in some cases unavoidable) to replicate your data and applications in multiple locations so that, even if one data center goes down, the others will be fine. Can you reproduce that?

Cloud providers also manage content distribution networks (CDNs) allowing you to expose cached copies of frequently-accessed data at edge locations near to wherever on earth your clients live. This greatly reduces latency, improving the user experience your customers will get. Is that something you can do on your own?

One more thought. Most of the big investments into new IT technologies these days are being plowed into cloud ecosystems.

That's partly because the big cloud providers are generating cash far faster than they can hope to spend it. But it's also because they're involved in a live-or-die race to capture new segments of the infrastructure market before the competitions claims them.

The result is that the sheer rate of innovation in the cloud is staggering.

I earn a living keeping a close eye on AWS, and even I regularly miss new product announcements. One of the reasons I avoid including screenshots of the AWS management console in my books and video courses is because their console is updated so often, the images will often be out-of-date before the book hits the streets.

In some cases, this might mean that local deployments will run at a built-in disadvantage simply because they won't have access to the equivalent cutting edge technologies.

The Risks of Outsourcing Your Compute Operations

Having said all that, as with most things in life, choosing between cloud and local isn't always going to be as obvious as I may have made it sound.

There may still be, for instance, laws and rules forcing you to keep your data local. There will also be cases where the math just doesn't work out: sometimes it really is cheaper to do things in your own data center.

You should also worry about platform lock-in. The learning curve necessary before you'll be ready to launch complex, multi-tier cloud deployments isn't trivial. And you can be sure that the way it works on AWS, probably won't be quite the same as what's happening on MS Azure.

The knowledge investment you'll need to make once you make your choice will probably be expensive.

But what happens to that investment if the provider's policies suddenly change in a way that forces you off the platform? Or if they actually go out of business (this could happen: Kodak, Blockbuster Video, and Palm were once big, too)?

And what about getting locked out of your account for some reason? How hard would it be for you to retool and reload everything somewhere else?

Just think ahead and make sure you're making a rational choice.

Chapter 4: Understanding Digital Connectivity

Telephones changed the way we all talked to each other and went about our work (well, the way our great-grandparents did, at any rate). Information could now be communicated instantly, rather than being sent over slow, overland routes.

But that's hardly news to anyone these days. The modern network - best known as the internet - similarly boosted communication, although this time it was the movement of data rather than voice that got a boost.

In the fifty years or so since the birth of the internet, it's been trusted with the movement, storage, and management of more and more of our data. These changes have brought tremendous opportunities, risks, and pressures.

Just getting connected is now a basic necessity.

Managing all of our many connected devices and leveraging the ways we authenticate to extend our identities also present challenges. We'll discuss all that in this chapter.

Connecting to the Internet

These days, after food and shelter, one of the most basic resources of all is internet connectivity.

If you can't access the internet, you'll find it difficult to do your banking, educate yourself, book travel arrangements, or even figure out exactly where you are.

It's not for nothing that widespread, reliable, and relatively fast internet access is critical for a region's general economic development.

Even though the internet was originally built as a decentralized, distributed network of resources, you still need to establish some kind of connection to access it.

The best connections are run by network carriers, known as tier 1 networks. Theses networks can reach all other networks through a peering arrangement that doesn't require payment for IP transit.

You can think of these networks as the backbone of the internet, and their network infrastructure is its structure.

Examples of companies managing tier 1 networks include AT&T and Verizon in the US, Tata Communications (India), and Deutsche Telekom (Germany). Those carriers will resell bandwidth to smaller internet service providers (ISPs) who, in turn, sell access to end users like you and me.

Broadband Options

Individuals looking for broadband access in their homes or small businesses can usually choose between one of four access models:

- Cable. Since they're already in the business of providing data to millions of homes over existing physical connections, cable TV providers can easily transmit internet over the same wires.

- Digital subscriber line (DSL). A family of technologies that permit digital data across copper telephone lines, DSL can provide a roughly similar level of service as cable, but without the need for an underlying cable subscription. In fact, using a "dry copper" connection, you don't even need a telephone landline account.

- Fibre optics. Due to some arcane technical details (including the laws of physics), transmitting digital signals as infrared light can happen faster and require fewer repeaters than comparable electrical cables. A fibre optics internet connection could typically deliver transfer speeds of 10-40Gbit/s - a thousand times faster than currently standard rates using cable or DSL.

- Satellite. Running new cable through densely populated cities is expensive, but companies can quickly make their money back through the many access contracts they'll sign.

But sparsely populated rural regions are much more difficult to service. Partly to fill a rural connectivity gap, a number of companies ambitiously working to launch constellations of thousands of orbiting satellites to provide universal internet coverage.

As of this writing, SpaceX is furthest along with its project, having already successfully launched more than 500 satellites as part of the Starlink system.

Besides those dominant technologies, there have been more than a few alternate connectivity solutions attempted. Some are experimental but promising, and others are a bit more speculative.

Google's Balloon Internet (known officially as Loon LLC), is an attempt to float fleets of high-altitude balloons providing a 1 Mbps signal to anyone within range on the ground.

Loon is designed to provide low-end broadband in hard-to-reach regions where reliable service has been difficult or even impossible. As of 2020, the project seems to be in a late experimental stage.

Broadband over power line (BPL) can take advantage of all the electrical grid that connects power authorities with homes and businesses to provide internet data.

Ultimately, the technology delivers limited bandwidth because line noise causes significant data signal loss. Data carrying power lines can also cause interference with high frequency radio communications.

In the end, relatively low signal quality and strong competition from other technologies mean that BPL will probably never be widely adopted.

Networks using forms of wireless Internet service provider (WISP) can service homes and offices across larger geographic areas without the need to physically wire every building.

Wired connections are installed in an area's center and, where necessary, connected backhauls are installed in elevated areas to strengthen the wireless signals aimed at consumers. Existing radio towers or other tall structures can be used for the backhaul repeaters, making a WISP system relatively inexpensive to install.

Smaller-scaled wireless network co-ops can be shared locally using a neighborhood internet service provider (NISP) (using rooftop antennas) or a wireless mesh network (where network-connected devices act as peer nodes) to efficiently share a single physical connection.

Those systems are primarily designed to serve us where we live and work. But mobile data access is definitely also a thing.

I'm sure you're already familiar with data plans that mobile phone companies can provide alongside their calling and texting services.

Mobile Phone Data Access

Cell connectivity is distributed through geographic areas (known as "cells") from individual radio transmitters spread throughout the cell.

Since the transmitters within each cell will use different radio frequencies than the cells around it, modern wireless technologies permit a seamless, automated "handover" as a user moves between cells.

The technologies used for wireless telephony have changed since the 80s, when what's now known as 1G ("First Generation") cell phones were introduced. To describe the evolution of cell phones in very general terms, we could say that:

- 1G phones carried only voice communications and had a maximum transfer speed of 2.4 Kbps.

- 2G phones could carry Short Message Service (SMS) and Multimedia Messaging Service (MMS) messaging, which could include short videos and images.

- 3G phones had much higher transfer rates (as high as 2 Mbps) than any variant of 2G and was therefore dubbed, "mobile broadband."

- 4G phones could reach speeds as high as 100 Mbps, which permitted HD mobile TV, online gaming, and video conferencing.

- 5G phones - when used on compatible networks - are expected to reach transfer speeds of up to 20 Gbps at a very low latency, permitting fully immersive virtual environments.

Should the 5G rollout be successful (and, at the time of writing, this isn't yet clear), the range and limits of new service categories that could be deployed is not yet known.

When it comes to planning a new venture, it's long been the accepted wisdom that there's no replacement for solid market research.

Without knowing who your customers will be, where they live, and what they like, how can you properly serve them?

Well, now you can add to that list "how reliable and robust is their internet connectivity," because without digital access, they may never find you or be able to consume your service.

Talking to the Internet of Things

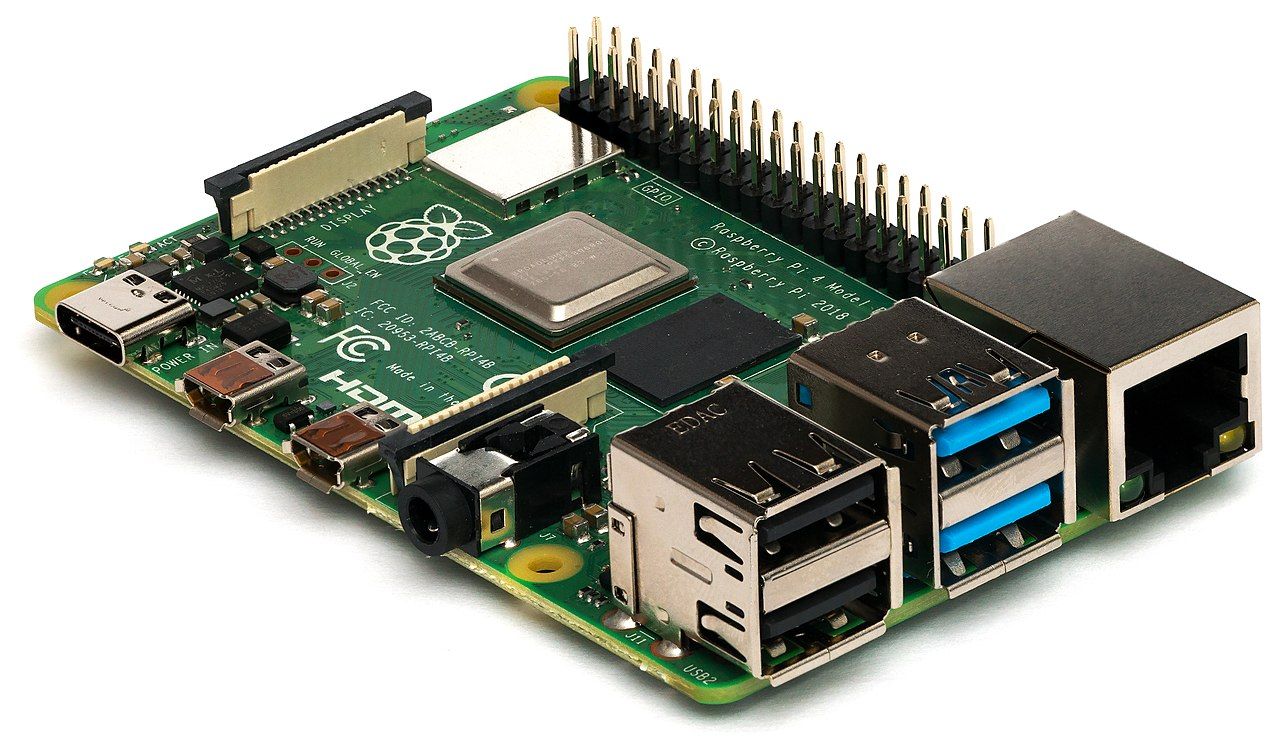

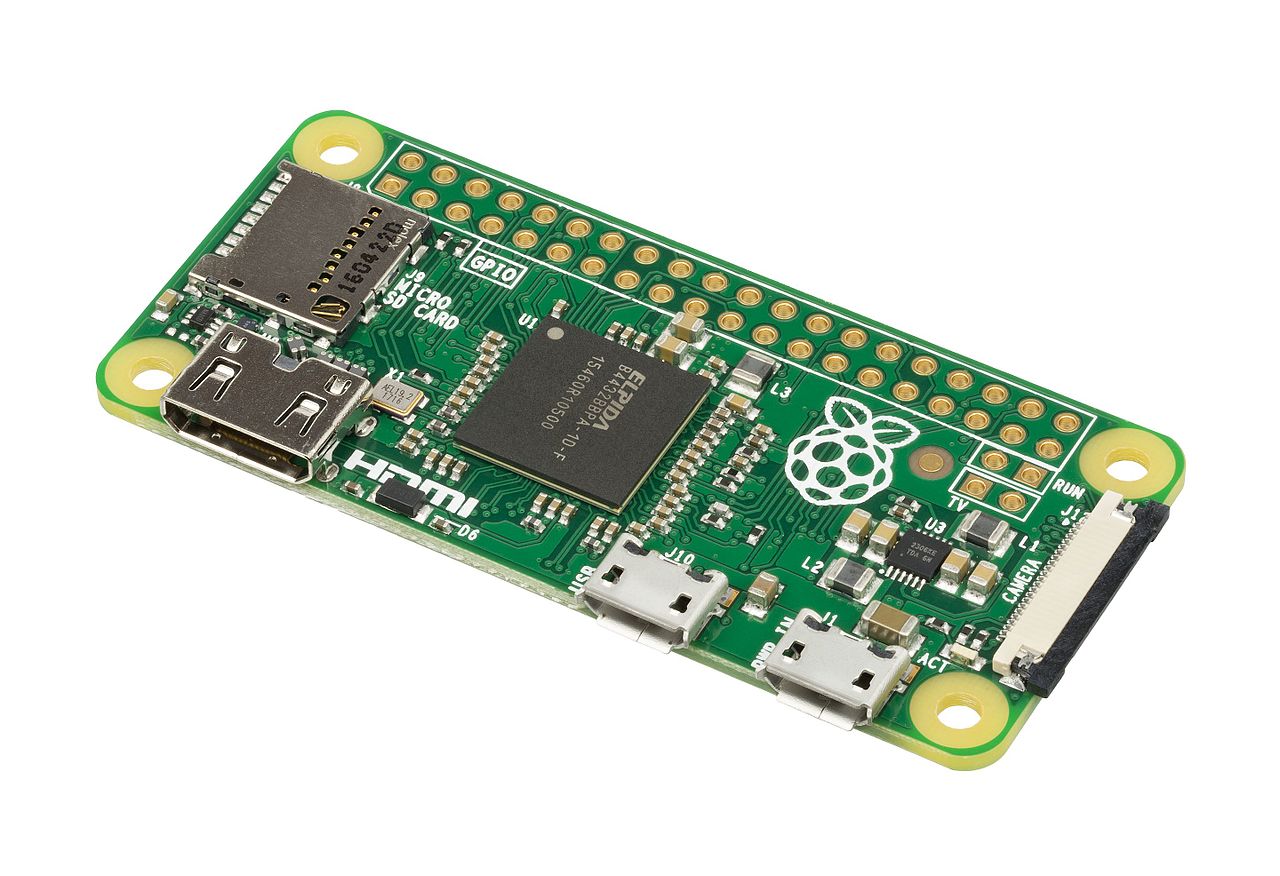

Two recent changes are, more than anything else, responsible for the internet of things (IoT) ecosystem: the availability of cheap, embedded, single-board computers (like the Raspberry Pi pictured in figure 4.1), and cheap and always-on internet connectivity.

Those tiny single-boards - often smaller than a credit card - are easy to incorporate into just about anything you're planning to manufacture. Such devices cost very little - sometimes just a few dollars a piece - and they're generally built to run fully-powered (and free) Linux distributions.

And network availability means that the vast streams of data generated by all those on-board cameras, sensors, and other peripherals, can be automatically sent back "home" for processing and analysis.

The Dream of IoT

Here are some ways that IoT applications are already actively changing business and consumer practices:

- Inventory control. The very first IoT device was - arguably at least - a Coca-Cola vending machine at Carnegie Mellon University. Back in the early 80s, the machine was modified to digitally report its ongoing inventory.

The simple idea that physical devices can monitor themselves and their surroundings, providing accurate, up-to-the-minute status reports to remote servers lies at the heart of countless modern industrial solutions.

Modern retail, wholesale, logistics, and manufacturing operations now have constant access to their inventories, allowing them to understand trends and anticipate problems. - Agriculture. Increasingly, modern farming incorporates robotic irrigation, fertilization, planting, and even harvesting technologies. All those robots running around your property are generating data and, from time to time, getting themselves into trouble.

Moving that data "back" to administration servers is critical for keeping track of what's going on, what might need fixing, and how your actual farm is performing.

You can, therefore, expect that each of those devices will be part of someone's IoT. - Military. Communication is key for military operations. But if even weapons, vehicles, and other equipment can communicate autonomously, and if there are servers dedicated to interpreting and acting on that communication, then you're already way ahead of the game.

Sensors connected to each of hundreds of components for, say, a fighter jet, can contribute to giving planners an unprecedented view of what's really going on. - Smart cities. When sensors embedded in buildings, roads, public lighting, smartphones, and electrical systems are combined with data coming from traffic cameras and public departments, the potential for data-driven insights is huge.

Properly understood data can help cities manage their resources, utilities, and even traffic more efficiently, and better maintain their physical infrastructure. - Smart homes. On a far smaller scale than smart cities, home appliances can be connected and monitored and controlled through smartphone apps or remote servers.

Smart home devices already include heating and cooling systems, light bulbs, robotic vacuum cleaners, garage doors, and security systems. These devices can be controlled through phone apps but, in many cases, also through voice controlled devices like Amazon Echo (Alexa).

Conversations about IoT are always just one step away from buzzwordism - where words lose meaning and exaggeration becomes an acceptable lifestyle choice.

Not all IoT stuff is actually IoT. Or, to put it another way, not all IoT is worth talking about.

But here's one good way to categorize a particular technology: if, hour after hour, something generates more data than any human being could possibly absorb, then it's probably an IoT device.

Effectively dealing with all that data can be a problem. And that's not the only potential for trouble in IoT land.

The Nightmare of IoT

In the information technology world, as a general rule, the more active network connections you have in your infrastructure, the greater your risk of being successfully attacked.

That's because successful hacker intrusions usually come through badly configured or unpatched devices. The more public-facing devices you're exposing, the greater the chance one of them will have a serious vulnerability.

What kind of vulnerabilities are we talking about?

Well, the US government's Common Vulnerabilities and Exposures database contains nearly 140,000 individual entries, each one representing a unique software weakness that could allow unauthorized access to and destruction of an IT system.

There are threats impacting all operating systems (Windows, Linux, macOS), all formats (server, PC, smartphone), and all ages (there are active threats going back to the 1990s).

And many hundreds of new entries are added each month.

In that sense, IoT devices are no different than any other kind of computer. But there is one way that they're a whole lot worse.

Because you usually don't normally directly interact with IoT devices on an OS level, and because they're often commodity items that are purchased and deployed by the dozens, or even thousands, you don't instinctively treat them like computers.

Most of us, as an example, are aware that we should create complex and unique passwords for our laptops and WiFi routers.

But your fridge? Just plug it in and it'll be fine!

The problem is that many IoT devices - like "smart" fridges - have their own embedded operating systems and, usually, their own network interfaces.

There's a good chance that anyone driving down your quiet residential street can scan for available networks, and quickly identify the brand of IoT device you're using. They can then assume that you haven't changed the authentication credentials from their factory defaults, and log in to your private network.

What makes things much worse is that many device manufacturers are still shipping their products with authentication credentials using some variation of admin/admin.

That's a big problem.

Leveraging Federated Identities

All this talk about the dangers presented by authentication and credentials should make you curious about how they can be used to generate some good connectivity stuff.

In a single word, that'd be federation.

Identity federation is a technology for linking a single person's identity across multiple network services. Federation is what lets you log in to online gaming or web application sites using, say, your Google account credentials.

The upside of federation is that a single login can be all you'll need as you move between many of the online services you regularly use. That lets you reduce the risk of exposing your password through a vulnerable website.

Of course, it also increases the damage that can come from a serious data breach of the servers used by your federation provider.

Federation can be used to integrate with third party single sign-on (SSO) authentication systems, like Kerberos, the Lightweight Directory Access Protocol (LDAP), and Microsoft's Active Directory (AD). When used with cloud services, SSO systems can securely permit automated as-needed access to private account resources for consumers or processes.

Besides convenience, all this authentication goodness drives opportunities for secure remote collaboration on large, complex projects - itself a fast-growing trend.

Chapter 5: Understanding the Business of Technology Research

Getting a new technology out to consumers will usually require good people and boat loads of resources - including money. Generally, lots of money.

A lot of that money will be spent on research and, more often than not, the hard research needed to translate a great idea into a usable product will be performed by someone whose job title isn't "entrepreneur."

In fact, sometimes the research will be done by individuals who are barely aware that their innovations have any commercial value at all.

If you're here because you want to get the jump on cutting edge technologies, then you may want to keep an eye on the organizations that are known to produce practical research. Knowing who's big in research, who's funding it, and where the big bucks are being spent can give you useful insights into what might be coming next.

From there, you're just a step away from, say, spending time learning the tools that'll come with the new tech or positioning yourself to profit when it finally shows up.

Who Funds Commercial Science and Why?

Once upon a time, major breakthroughs in serious scientific research were the products of private patronages. The Italian Medici family, for instance, famously supported many individuals whose work would prove pivotal, including Leonardo da Vinci and Galileo.

However, the years leading up to the Second World War saw the scope and complexity of research projects growing far beyond the capacity of private support. The war's dependence on unprecedented technological complexity - exemplified by the work of the Manhattan Project building the atom bomb - pushed more and more research under government charge.

Government involvement in research has continued in the generations since the war. Still, it's been estimated that universities and governments are responsible for only 30% of research funding between them, with most of the rest provided by private industry (see en.wikipedia.org/wiki/Funding_of_science).

Let's see how that breaks down.

Taxpayers

Democratic governments, of course, don't spend their own money, of which they traditionally have none.

Their many programs and services are funded by revenues raised, one way or another, from their capital assets and from their populations. In modern nation states, "populations" would mean those individuals and corporations who pay taxes.

Public research and development can be performed within government agencies. According to the terms of some agency mandates, research results must immediately enter the public domain.

But even those who retain rights to their research will often point their work towards businesses and institutions that can use it productively.

The US National Science Foundation (NSF), for instance, uses its $8 billion annual budget to fund "approximately 25 percent of all federally supported basic research conducted by America's colleges and universities" (https://www.nsf.gov/about/).

Other American agencies do much or all of their research in-house. Here are some examples:

- The National Institute of Standards and Technology (NIST) has a mandate to "promote innovation and industrial competitiveness."

One very important part of that mission is maintaining the National Vulnerability Database (NVD), which plays a foundational role in the management of the vulnerability assessment and detection systems protecting our IT infrastructure. - The US military's Defense Advanced Research Projects Agency (DARPA) collaborates with private and public sector partners to aid in the development of emerging technologies.

Work in recent years has included research into robotics and autonomous vehicles, but you might be more familiar with a DARPA innovation from a few decades ago: the internet. - The National Institutes of Health (NIH) employs 6,000 research scientists across 27 research institutes and centers.

Their "mission is to seek fundamental knowledge about the nature and behavior of living systems and the application of that knowledge to enhance health, lengthen life, and reduce illness and disability."

The complete list of US government research agencies (available at en.wikipedia.org/wiki/List_of_United_States_research_and_development_agencies) makes for quite a read. Take a look for yourself.

Naturally, governments of other countries have their own research agencies. One example is Canada's National Research Council (NRC), which has evolved from its military technology origins through the two world wars, to its current focus on partnerships with private and public-sector technology companies.

The NRC now divides its work into four "business lines:"

- Strategic research and development

- Technical services

- Management of science and technology infrastructure

- NRC-Industrial Research Assistance Program (IRAP)

As we mentioned when discussing the NSF, a significant proportion of taxpayer funds directed towards research and development are granted to public and private colleges and universities.

But, from the college perspective, how much academic R&D funding comes from government sources?

A 2016 review of the 20 US colleges that spent the most on R&D found that they each spent between $837 thousand and $2.4 million, and that between approximately 47-87% of their total spending came from government sources of one sort or another (see bestcolleges.com/features/colleges-with-highest-research-and-development-expenditures/).

By contrast, businesses only provided between 2 and 22% of that funding.

Private Charitable Funding

While we're on the subject of academic research, we shouldn't ignore a third source of funding: private endowments.

Some - although not all - permanent endowments were targeted by their donors at research activities. Although the fund capital can't be spent each year, the income that capital generates can.

Harvard university famously - or perhaps infamously - has a total endowment greater than 40 billion dollars. Some of that undoubtedly finds its way to R&D.

Curiously, according to that 2016 study, Harvard's total R&D spending that year - including activities funded by governments (52.1%), businesses (4.7%), and endowments - was just over one million dollars.