When you're starting your coding journey, one of the most exciting – and at times overwhelming – things about it is just how much there is to learn.

You'll discover different languages, frameworks, libraries, inventions and conventions.

One technology often requires knowledge of another one, and everything seems to be interconnected and intertwined.

With new technologies coming out often in such a fast paced and ever-changing industry, it can quickly get confusing for beginner coders.

When learning how to code, instead of just focusing on learning a specific technology, it can also help to learn the foundations – the building blocks – and to peel back the layers of abstraction to get to know the underlying principles that all these technologies have in common.

Understanding what coding is at a fundamental level will make solving problems easier and will give you a better understanding of how these technologies work underneath the hood.

In this article we will learn what coding is so you have a solid foundation on which to build.

How Do Computers Work?

Computers, when powered off, are just costly electronic machines. They're objects consisting of a bunch of metals, plastics, and other materials.

However, once you press the power button and they have gone through their power-up process, called booting up, they come to life.

Your computer turns into this extremely powerful machine. It's this electronic device that gets to do complicated tasks at mind boggling speeds that would be difficult, if not impossible, for humans to do.

Their screens are vibrant and active and there's a variety of buttons and icons ready to be clicked.

Computers and Electricity

Computers are powered and function with the help of electricity.

Electricity has only two states – it can be either turned on or off.

Electricity being turned on and flowing represents true, it has the 'on' state. On the other hand, when it's turned off and not flowing this represents false and the 'off' state.

It can only ever have one state at each point in time.

The two states of electricity are called Binary States, the prefix bi- meaning two.

What is Binary Code?

This concept of electrical charges and the existence of only two possible states ties in nicely with the numerical system computers use in their hardware. They use it to complete every single task they are given. It's called binary code and is made up of sequences of 0s and 1s.

Binary code by design corresponds directly with specific machine instructions, commands, and locations in the computer's memory. The computer then reads and interprets these instructions and then carries out particular tasks.

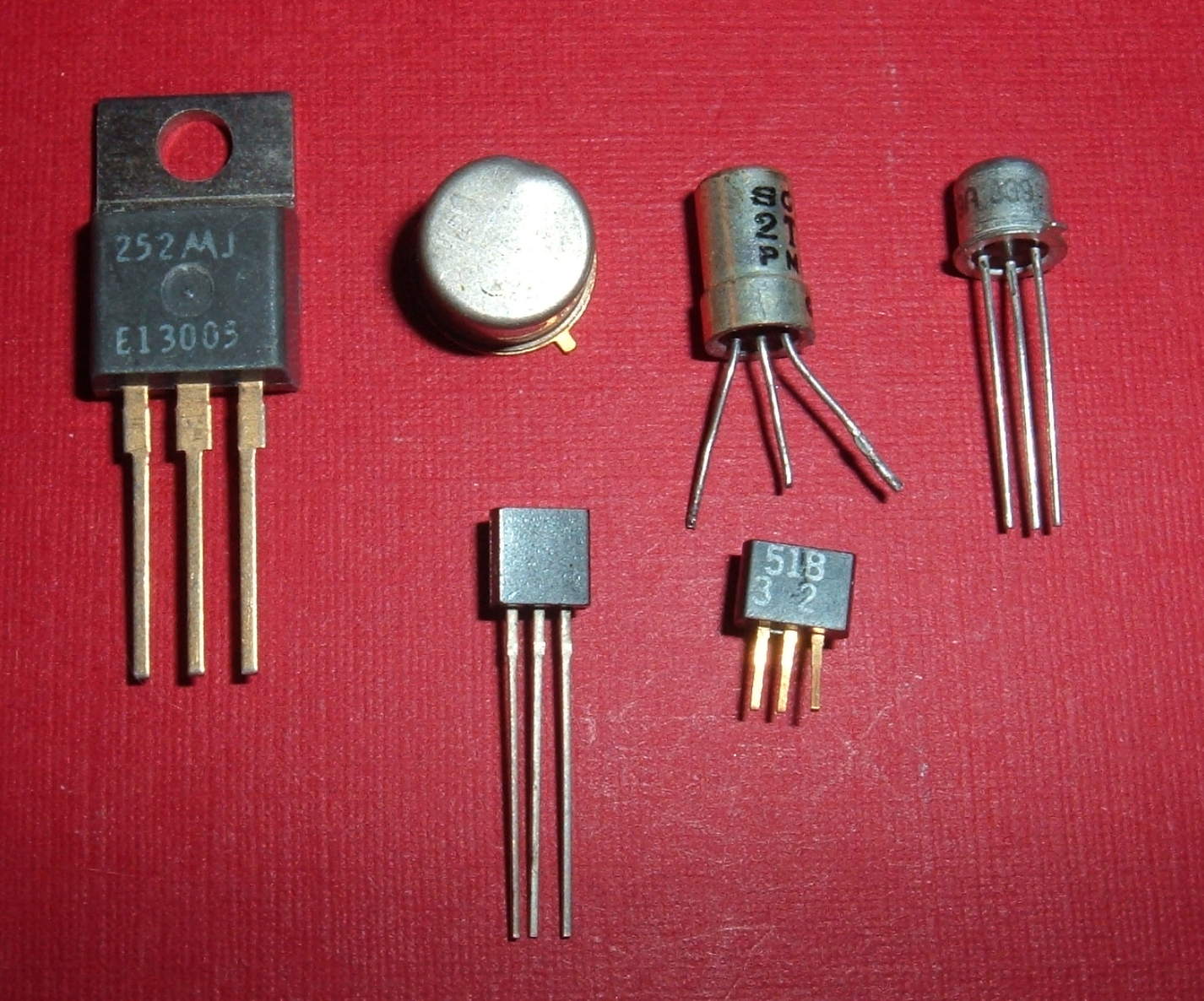

Computers are made up of a combination of thousands of tiny physical devices that act as electrical switches, called transistors.

These little electrical hardware components, the transistors, enable or disable the flow of electricity.

They can have either a positive or a negative electric charge depending on their state – that is, whether they carry electricity or don’t.

Those thousands of tiny little switches can either be on or off.

How Binary Works

You may have heard that ‘Computers work in 1s and 0s’ but what does that actually mean? We might not deal with binary code directly anymore, but it is the only thing that the computer's CPU understands.

Are there literally 0s and 1s stored in our physical devices flowing and moving around? Not really.

However, our CPU's consist of many microscopic digital circuits that carry information.

With the help of the transistors that come together to form these circuits and their tiny sequences of electrical signals that are switched either on (1) or off (0), there can only be only be two types of voltages – a high and a low. And that results in the representation of different values, that is different instructions or calculations being performed.

When these electrical signals from the transistor circuits and other electrical components are linked and combined/modified in a certain way, they can create a wide range of possible tasks and operations the computer can perform and see through.

Computers and Their Relationship with Humans

This machine-level programming language is the only language that computers can directly understand and are capable of making sense of.

The binary code can vary from computer to computer and from machine to machine. When it comes to this level of programming a computer, there is no portability. This means that programs and software can't be transferred to different systems.

Limitations of Machine Languages

Machine languages can vary depending on the operating system they are implemented on.

This is, of course, very limiting.

Machine code or binary code may run very fast, they may be the computer's native language, and they may be extremely efficient because the instructions are executed directly by the CPU. But it's a very dull and monotonous – not to mention extremely error-prone – way to use a computer.

Attempting to use a computer by manually typing binary codes for each transitor is a cumbersome process.

Making an error while directly managing the computer's data storage and operations is very hard to fix.

Machine-level languages are hard for humans to read, write, learn and understand. So programmers and computer scientists found a better and arguably easier way to solve problems.

The things a computer can do by itself are on a very primitive level and are limited in scope.

They are good at performing arithmetic calculations like adding numbers or checking if a number is equal to zero.

The Human Element in Computing

Humans created these machines that have revolutionized our way of life, but when it comes down to it, computers are really not that smart and have limited capabilities by themselves.

They only do exactly what they are told to do. They don’t make assumptions or have any common sense like humans do.

Computers at their core are machines that perform mathematical operations. But they are also good at displaying some text on the screen or repeating a task over and over again.

Those operations are basic and don’t go much further than that. They're known as the computer’s instruction set.

Even though at their core computers can only do very basic tasks, they are able to perform extremely complex actions and follow and execute instructions from programs they are given. This is thanks to the many layers of abstraction they have.

The true power however, lies in the hands of humans. Whatever we want to achieve and whatever we imagine, we can use this machine as a tool to do complicated calculations, conduct research to find and extract a document among billions of other documents, or keep in touch with friends and family far away.

Whatever we can think of, we can now create it by coding a program.

Computers and programs can improve our lives collectively all around the world. But how do we make them do what we want?

What is computer programming?

Computer coding and computer programming are terms that are often used interchangeably. They do have some differences though.

Programming vs coding

Programming means telling a computer what to do and how to do that thing you told it to do.

It involves providing well thought-out, methodogical instructions for your computer to read and execute.

You have to break down large tasks into smaller ones. And you keep repeating that process of breaking something down into smaller tasks until you reach a point where you don’t need to tell the computer what to do anymore – it already knows how to do that task.

The essence of programming is the process of problem solving, complex thinking, attention to detail, and reasoning – all using a computer.

Programming involves thinking of all the different steps a user could take and considering all the different things that could go wrong. Once you've thought of all potential problems a user may encounter you have to find solutions before you code anything.

We can think of problem solving as taking an input (the information and details about our problem that we want to solve) and generating an output (the end goal or the solution to our problem).

Outputs can be complicated and millions can run per second.

Problem Solving with Algorithms

When you're problem solving using a computer, you need to express the solution to that problem according to the instruction set of the computer.

For that purpose, we use algorithms – a systematic approach to solving problems.

Algorithms are an idea or method that's expressed in a very concise and precise set of rules and step by step instructions. The computer needs to follow these instructions in order to solve the problem.

When we think of algorithms, they don’t only apply to computers. They are also machine independent.

We humans follow algorithms too – sets of instructions for completing tasks in our daily lives.

Some examples could be:

- counting people in a room

- doing arithmetic calculations

- trying to figure out the correct route to take to reach a particular destination

- following a cooking recipe

In the last example, we can think of the recipe as the instructions that we use, and we're the computer that has to read and then execute them correctly.

An algorithm is a plan that presents the steps you need to follow in order to get a desired result.

How Computers Use Algorithms

When it comes to computers, algorithms need to be precise as computers take everything literally. They don’t read between the lines or make any assumptions.

There is no room for ambiguity, so algorithms not only need to be precise but also organized, correct, free from errors, efficient, and well-designed. All this helps minimize the time and effort the computer needs to spend to complete a task.

Computers execute algorithms in a mechanical way without putting much thought into each step. And they should work in the exact way we intended them to work.

A computer program is a collection of those instructions – or algorithms – in a text file which serves as an instruction manual.

It describes a very precise sequence of steps for the computer to follow. The computer performs a particular task, its hardrive executes the instructions, and you get the final desired result in the end.

Aside from the thinking, research, design, and in-depth planning, programming also involves testing, debugging, deployment, and maintanance of the finished result.

When you're developing a program to solve a particular problem, you typically express the idea for the solution using an algorithm. Then developers code the program by implementing that algorithm. You use a language that has particular syntax and that both humans and computers can understand.

This is where the actual coding comes in.

What is Computer Coding? A Simple Definition.

Coding is the process of transforming ideas, solutions, and instructions into the language that the computer can understand – that is, binary-machine code.

Coding is how humans are able to talk with computers.

Coding involves communicating and giving instructions for different actions we want our computers to perform using a computer programming language.

Programming languages, like JavaSctipt, Java, C/C++, or Python, act as the translator between humans and machines.

These languages bridge the communication gap between computers and humans by representing, expressing, and putting algorithms into practice. They do this using a specific sequence of statements that machines understand and can follow.

Programming languages are similar to human languages in the sense that they are made up of basic syntactical elements like nouns, verbs, and phrases. And you group these elements together to form something that resembles a sentence to create meaning.

These languages actually resemble and look a lot like English. But they offer a shorter, more precise and less verbose way to create instructions that the computer can understand.

A spoken/natural language like English, on the other hand, leaves a lot of room for ambiguity and different interpretations from different people.

Programming languages are a set of rules that define how you write computer code.

We use computer code to create all the web applications, websites, games, operating systems, and all the other software programs and technologies we use on a daily basis.

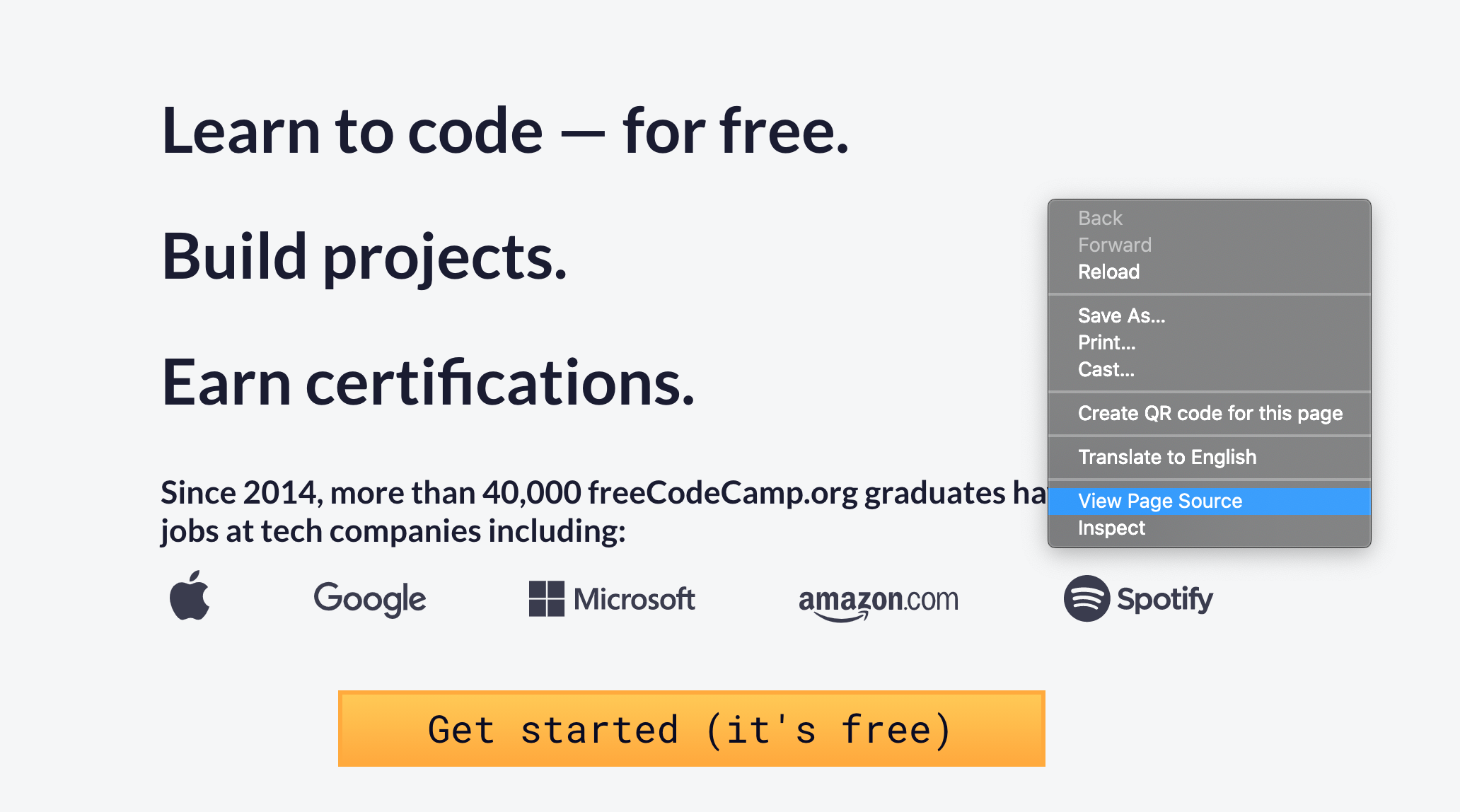

You can look at the code that makes up your favorite websites by hitting Control and clicking your touchpad/mouse then selecting View Page Source (or inspect) from the menu that pops up (or you can use the shortcut Option Command U):

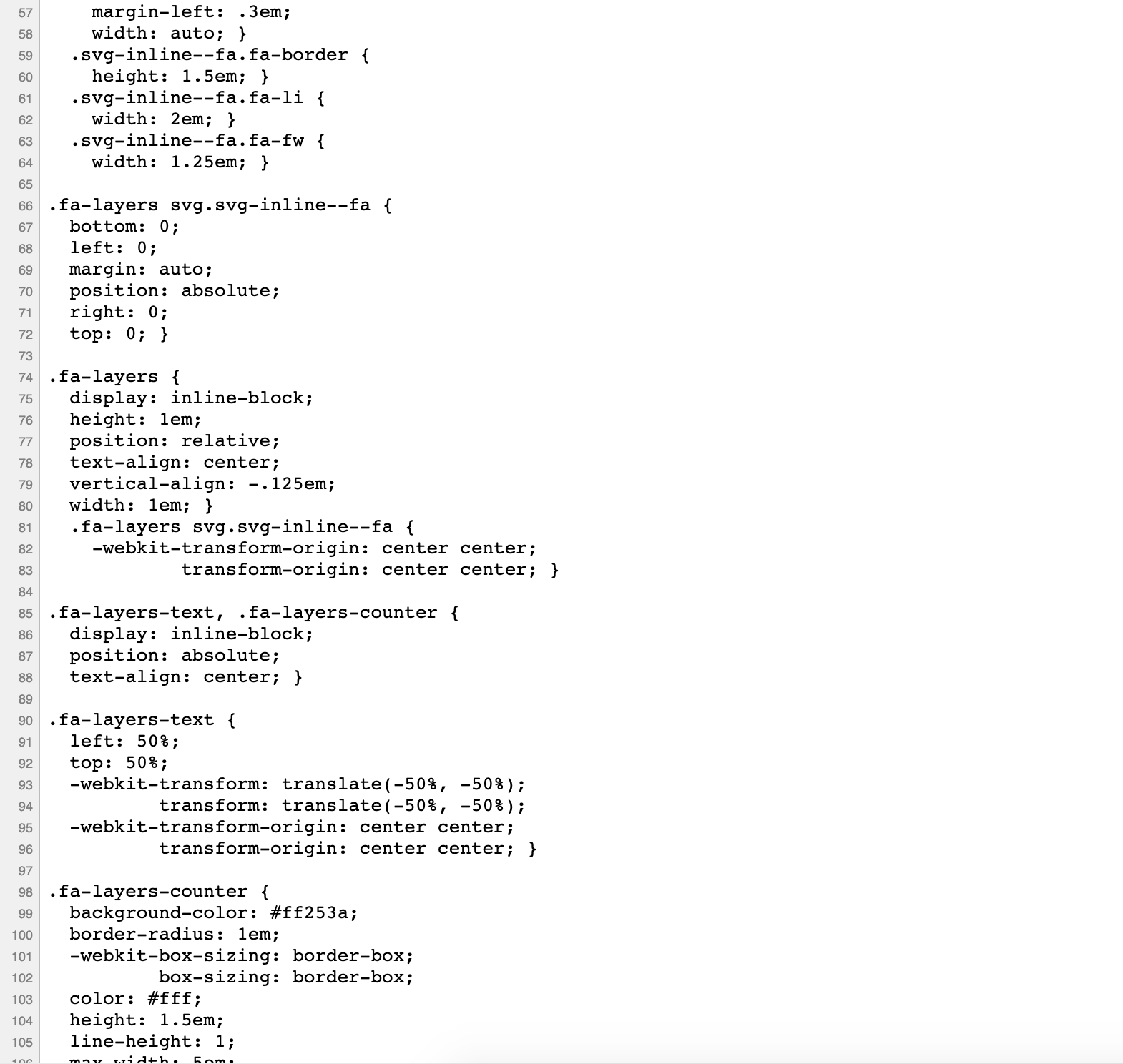

You'll then see the HTML, CSS, and JavaScript that make up the frontend code of the website you're using:

In a nutshell, coding is the act of translating problems that are first in a natural, human language to a machine readable language. And that translation happens thanks to programming languages and coding.

Coding requires that you understand the intricacies, the particular syntax, and the specific keywords that make up a programming language. Once you know these features of a language, you can start developing applications.

Conclusion

In this article we learned how computers work at a high level. We then definied what programming is, what coding is, and the differences between them.

Remember that coding is only the process of writing code to develop programs and applications.

Coding is a subset of programming, which entails the logical reasoning, analysis, and planning out a sequence of instructions for a computer program or application before any coding is done.

Programming is the bigger picture in the process. Coding is a part of that process, but should always come after the programming, or problem-solving and planning stage.

I hoped this helped you understsand programming and coding basics. Thanks for reading!